The Future of Edge Rendering and CDN-First Architectures

The Future of Edge Rendering and CDN-First Architectures

The Future of Edge Rendering and CDN-First Architectures

Modern apps are no longer anchored to a single region. Users expect instant responses wherever they are, and platforms keep pushing compute closer to them. That is why edge rendering executing rendering logic on globally distributed nodes has moved from “interesting experiment” to pragmatic default. In a CDN-first architecture, your content delivery network is not just a cache; it’s your primary execution plane for latency-sensitive work, with origin services demoted to a durable backing role. Edge rendering closes the distance between data and users, trims tail latency, and opens new possibilities for personalization without hauling every request back to origin.

Over the last two years, CDNs have grown from static accelerators into full platforms with HTTP/3 by default, KV/edge stores, durable objects, queues, WebAssembly, and regionless functions. Providers like Cloudflare, Fastly, Akamai, and Vercel now offer mature primitives for edge rendering, routing, and data locality unlocking architectures that are faster, safer, and cheaper to scale globally. This post outlines why CDN-first thinking wins, which edge rendering patterns actually work, and how to transition safely.

Sources: CDN vendor share and usage patterns (HTTP Archive Web Almanac 2024), HTTP/3 adoption stats (W3Techs Aug 2025), CDN-driven protocol adoption (HTTP Archive HTTP chapter 2024).

What “CDN-First” Means in 2025

A CDN-first architecture treats the CDN as the frontline for request handling: TLS termination, protocol negotiation (H3/QUIC), smart routing, programmable logic, and edge rendering for the critical path. Origin services become “systems of record” that expose APIs, events, and jobs rather than rendering HTML for every request.

Why now? CDNs remain the main driver of modern protocol adoption; <4% of CDN requests still use HTTP/1.1, vs. up to 29% for non-CDN traffic. The edge is where new capabilities land first.

Reality check: Cloudflare leads base-HTML CDN share (≈55%), followed by Google (≈23%), AWS CloudFront and Fastly (≈6% each). This reach matters when you move rendering to the perimeter.

Why Edge Rendering Is Becoming the Default

Lower tail latency:

Rendering at the POP nearest the user removes a cross-continent hop and avoids congested middle miles. With HTTP/3 adoption at ~35% of websites and climbing, connection setup and recovery improve on real networks.

Protocol advantage:

CDNs push HTTP/2+/3 at scale first. That translates into real-world wins (multiplexing, 0-RTT resumption, fewer head-of-line stalls) that benefit edge rendering immediately. Security posture: At-edge WAF/DDoS shields and bot controls stop bad traffic before it hits origin. Cloudflare reports blocking 20.5 million DDoS attacks in Q1 2025 alone—evidence of massive edge-scale mitigation.

Developer velocity:

Shipping logic to the edge means faster feature rollouts, A/Bs, and regional experiments without complex multi-region origin orchestration.

AI & privacy trends:

Processing PII or model prompts near users reduces data movement. Post-quantum rollout across major networks in 2024–2025 shows how perimeter platforms set security defaults globally.

Edge Rendering Patterns That Work

Static Generation + Incremental Revalidation at the Edge

Use static for the long tail and revalidate at the edge on freshness signals (cookies, headers, tags). This hybrid keeps TTFB predictable while allowing per-user touches near zero latency. Edge rendering here is the “fast path” that avoids hitting origin unless content is stale.

Middleware-Driven Personalization

Run authentication, geolocation, A/B buckets, and feature gating in edge middleware. Attach lightweight data (geo, device class, cohort) to requests and invoke edge rendering with that context for above-the-fold HTML.

Edge Data with Stale-While-Revalidate

Lean on edge KV or durable objects for micro-caches (menus, price bands, i18n bundles), plus SWR headers. Let background tasks push updates from origin to edge storage. You’ll render fast, then reconcile. (Fastly Compute and Cloudflare Durable Objects/Workers commonly power this.)

WASM for Hot Paths

Move CPU-bound transforms image policy checks, markdown→HTML, sanitizer/validator into WebAssembly modules at the edge. This keeps edge rendering hot without bloating JS runtimes and improves cold-start determinism on smaller footprints. (See vendor WASM roadmaps across Fastly Compute.)

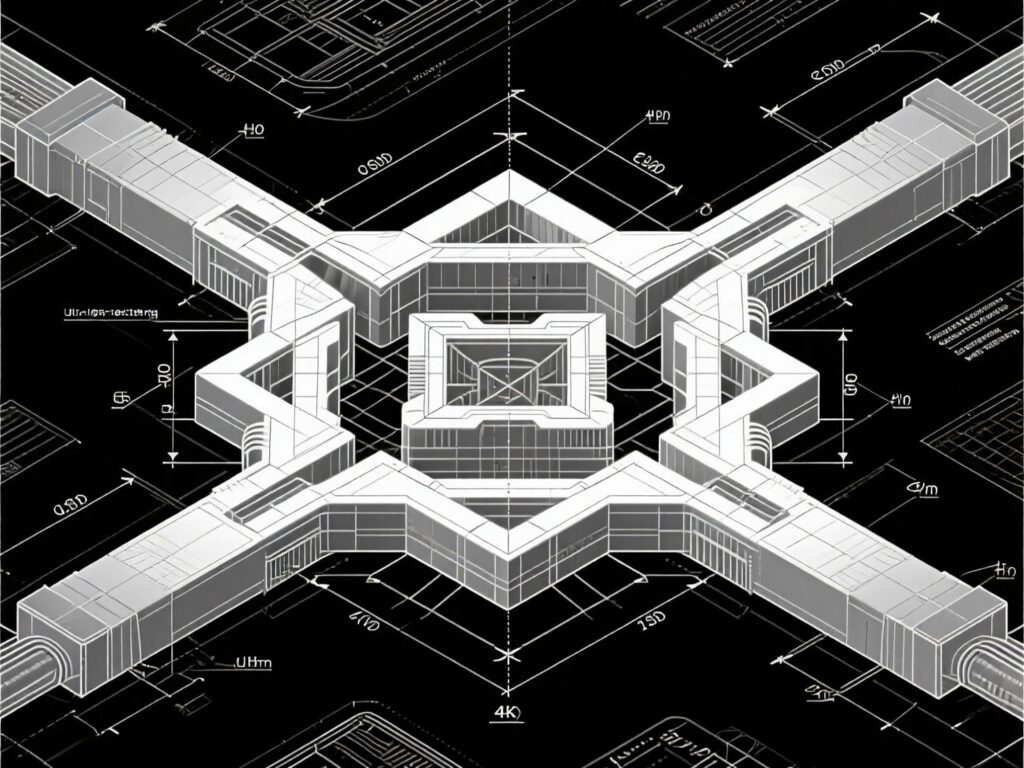

A CDN-First Reference Blueprint

A practical layout looks like this:

Client → Edge POP: TLS, H3/QUIC, bot/WAF, request normalization.

Edge middleware: auth/session, geo, experiments, cache keys.

Edge compute for HTML: edge rendering of critical route groups; stream HTML early.

Edge data: KV/edge DB for hot reads; queues for write-behind.

Origin control plane: APIs, jobs, search, analytics, CMS; emits events to edge.

Observability: synthetic checks from regions; trace + logs aggregated by POP.

Cloudflare’s reference architecture highlights Tiered Cache and anycast routing patterns that reduce origin egress and amplify the edge advantages described here.

The Tooling Landscape (And Where Each Shines)

Cloudflare Workers/Pages/Durable Objects: Mature perimeter primitives, queues, storage; strong security posture and global anycast. (See 2024/25 security & adoption reports.)

Fastly Compute (WASM): High-performance WASM runtime and edge state patterns; excellent for low-latency transformations and programmable caching.

Akamai EdgeWorkers: JS at the edge integrated with the massive Akamai footprint; ideal when you’re already on Akamai delivery.

Vercel Functions (Edge/Regional): Deep framework integration (Next.js), new fluid compute and Rust-powered functions aimed at consistency and cold-start minimization.

Mini Case Studies

Case 1 — E-commerce Globalization (Hypothetical Synthesis + Benchmarks)

A retailer consolidates three regional SSR stacks into a single CDN-first deployment. By shipping edge rendering for product/category pages and moving price/i18n tables into edge KV, they cut median TTFB from 600–800ms to under 200ms for APAC traffic. Third-party benchmarks show edge functions can be ~9× faster on cold start than regional serverless and ~2× faster when warm consistent with the team’s observed behavior during promos.openstatus.dev

Case 2 — Media Personalization on WASM

A publisher runs personalization and content gating inside WASM on Fastly Compute, caching fragments and streaming HTML early. The approach keeps edge rendering responsive during spikes, with origin limited to analytics and editorial tools. See vendor briefs on edge personalization

Pitfalls and Anti-Patterns (and How to Avoid Them)

Stateful sessions at the edge: Don’t. Use signed, short-lived tokens or edge KV keyed by an opaque session ID.

Chatty origin dependencies: Coalesce origin fetches behind edge caches or deploy regional replicas.

Cold starts from bloated bundles: Keep edge rendering bundles lean; prefer WASM for heavy transforms; Vercel’s “fluid compute” can mitigate cold starts for busy paths.

Global writes: Adopt an “edge-read, region-write” policy with idempotent queues; reconcile via events.

Implementation Roadmap: How to Go CDN-First in 90 Days

Map routes & classify: Critical render routes vs. API vs. assets.

Pick two edge landing spots: e.g., auth + HTML render.

Introduce edge middleware: geo, feature flags, cache keys.

Move read-heavy data to edge stores: menus, i18n, pricing bands.

Turn on HTTP/3 and QUIC across POPs. (HTTP/3 usage is rising rapidly.)

Instrument synthetic checks: per-region TTFB/LCP budgets.

Phase in edge rendering: start with product/category/landing pages.

Add SWR + background revalidation: minimize origin hits.

Harden security at edge: WAF/bot, DDoS, TLS modern ciphers; monitor.

What’s Next (12–24 Months): Five Predictions

WASM ubiquity for hot paths: Expect broader WASM module support and vendor marketplaces; Fastly and peers are already pushing this.

Protocol defaults: HTTP/3 passes a tipping point; EE traffic shifts further as browsers and CDNs enable features by default.

Security by default: Post-quantum and origin shielding mature at the edge; defaults roll out network-wide (Cloudflare 2024–2025 trend).

Framework-aware edge rendering: Frameworks bake in edge-first data fetching and RSC streaming for above-the-fold HTML.

Edge-native data layers: Durable objects/KV evolve into regionless consistency models, reducing the “where is my data?” question for 95% of use cases.

Wrap It Up

The future favors edge rendering not because it’s trendy, but because it’s operationally simpler at global scale. A CDN-first posture reduces tail latency, shrinks blast radius, and accelerates delivery of secure features everywhere. Keep origin as your durable core, but let the perimeter do the fast, user-visible work. The teams that standardize edge rendering patterns today will ship faster with fewer incidents tomorrow.

CTA: Want a 30-minute architecture review? Book an Edge & CDN-First Audit we’ll map your critical routes, recommend the right edge primitives, and give you a 90-day migration plan.

FAQs

Q : How does edge rendering differ from traditional SSR?

A : Traditional SSR centralizes rendering in one or a few regions; edge rendering runs the render on POPs close to users, reducing network hops and improving TTFB. It pairs with HTTP/3, edge KV, and programmable caching for consistently low latency. (Schema expander: benefits include lower tail latency and global consistency.)

Q : How can I migrate safely to a CDN-first architecture?

A: Start with read-heavy routes and middleware at the edge, move hot data to KV, enable SWR, and keep origin for writes. Add observability per region and iterate page-by-page. (Schema expander: 90-day phased plan, measure TTFB/LCP.)

Q : How do I handle authentication at the edge?

A : Use short-lived tokens, cookie signatures, or JWTs. Keep secrets in vendor key stores and avoid server-side sessions. (Schema expander: stateless tokens + edge middleware.)

Q : What about dynamic personalization?

A : Do cohorting and lightweight personalization in middleware, fetch heavy data conditionally, cache fragments, and stream HTML early. (Schema expander: combine edge KV with SWR.)

Q : How does HTTP/3 help edge rendering?

A : QUIC reduces handshake overhead and mitigates head-of-line blocking, improving resilience and speed on lossy mobile networks ideal for edge rendering. (Schema expander: adoption is growing across the web.)

Q : How can I reduce cold starts at the edge?

A : Keep bundles small, prefer WASM for CPU-heavy tasks, and select platforms with cold-start mitigation (e.g., fluid compute). (Schema expander: vendor docs show fluid compute guidance.)

Q : What data should never move to the edge?

A : Highly sensitive PII that violates locality rules, strongly consistent counters, and heavy transactional writes. (Schema expander: use “edge-read, region-write.”)

Q : How do DDoS protections fit in?

A : Edge-based WAF and rate limiting absorb attacks before origin. Vendors report massive attack volumes mitigated entirely at the edge. (Schema expander: Q1 2025 reports confirm trend.)

Q : How can I measure success?

A : Track per-region TTFB and tail latency, LCP, origin egress reduction, and error budgets. Compare before/after for the same routes. (Schema expander: synthetic checks + RUM.)