Multi Agent vs Single Chatbot for Enterprises (US/UK/EU)

Multi Agent vs Single Chatbot for Enterprises (US/UK/EU)

Multi Agent vs Single Chatbot for Enterprises (US/UK/EU)

In 2026, single chatbots are still a solid choice if your assistant mainly answers static FAQs, does light routing, and touches only one or two systems. Multi-agent AI systems are the better option once your assistant has to investigate, reason, and take actions across multiple tools, backends, and regulated workflows such as banking, healthcare, or high-ticket B2B sales.

Put simply

single chatbot for simple, low-risk journeys; multi-agent AI for complex, cross-system, regulated work.

Most enterprises in the United States, United Kingdom and Germany already run at least one customer-facing chatbot but many were designed as single “all-purpose brains” bolted onto legacy systems. As generative and agentic AI systems mature, the question has shifted from “Should we add a bot?” to “Do we stay with a single chatbot or move to a multi-agent architecture?”

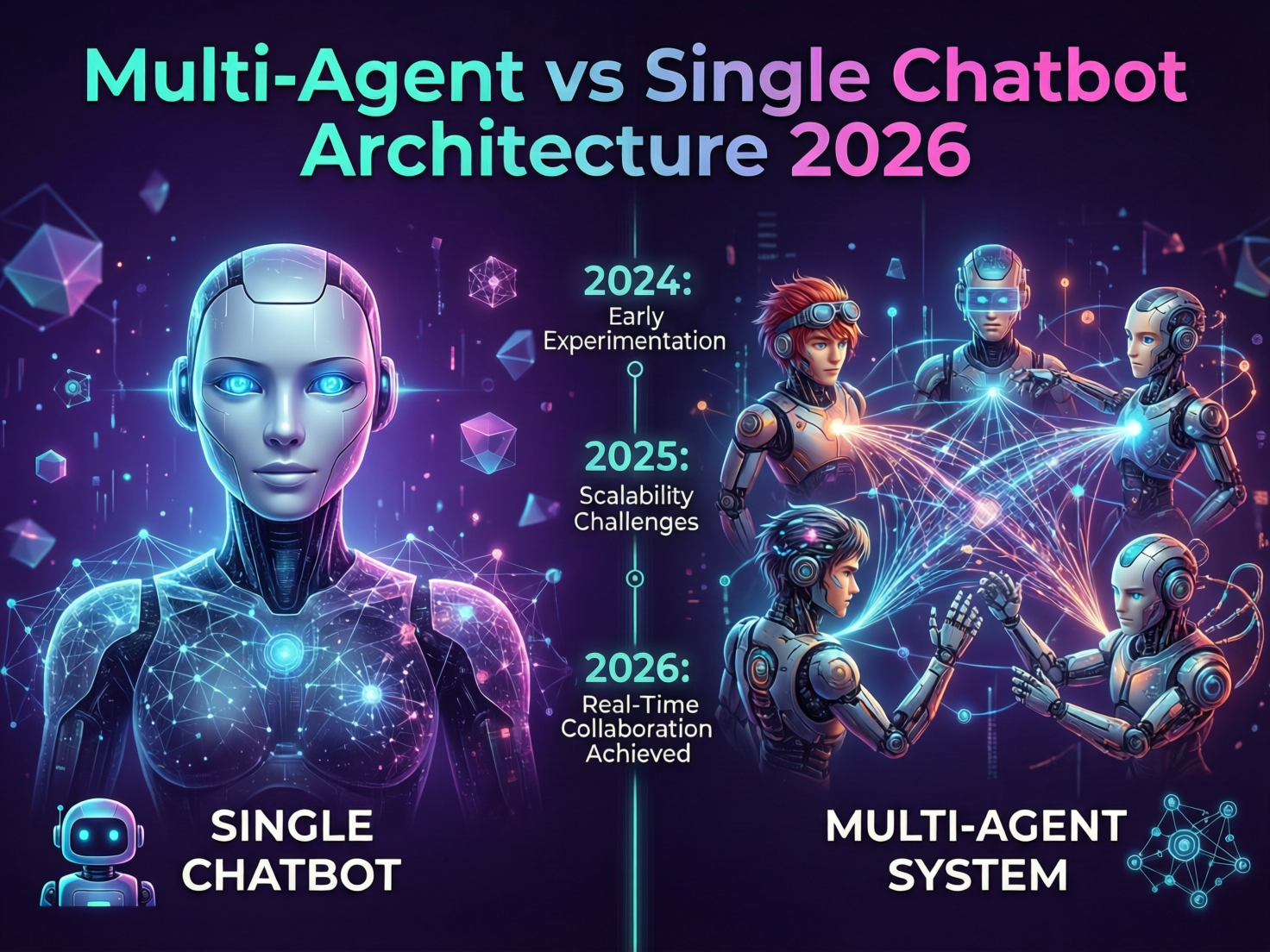

Analyst surveys suggest that by late 2024, around 60% of AI-investing organizations had deployed generative AI, and roughly a quarter were already scaling AI agents, with almost 40% experimenting. Against that backdrop, the multi agent vs single chatbot decision has become an architectural choice, not a hype debate.

In short, single chatbots are fine for simple FAQ-style flows, while multi-agent AI wins whenever tasks span multiple tools, systems, or regulated workflows. If your chatbot mostly answers static FAQs, stays in one knowledge base, and does light routing, a single-agent design is usually enough. If it needs to investigate, reason, and act across systems, you’re firmly in multi-agent territory.

One-sentence answer for busy product leaders

If your assistant mainly answers predictable FAQs and does light triage, optimise your single chatbot; if it must coordinate complex tasks across CRMs, ERPs, payments, and compliance checks, invest in a multi-agent AI system.

When a single chatbot is still “good enough”

A single chatbot (a single agent) is usually enough when.

You handle low to moderate ticket volumes with simple flows think a regional SaaS in Austin answering billing questions.

The bot mainly routes users or surfaces knowledge base content.

You only have one or two integrations (e.g., CRM + helpdesk).

You operate in lower-risk industries where a wrong answer is annoying, not catastrophic (e.g., basic retail, non-regulated education).

In these cases, investing in prompt engineering, retrieval-augmented generation (RAG), and analytics can deliver strong ROI without the overhead of full AI agent orchestration.

When to start evaluating multi-agent AI instead

You should actively evaluate multi-agent AI when at least one of these is true.

Your journeys hit multiple backends (CRM, billing, ticketing, KYC, payment rails)

There are complex, multi-step workflows (loan applications, B2B onboarding, claims)

Every interaction must pass compliance or policy checks (GDPR/DSGVO, Open Banking, internal risk rules)

You sell high-ticket products where a small uplift in conversion or retention is worth serious money.

Concrete triggers.

A US SaaS scale-up in San Francisco wants its “enterprise AI copilot” to coordinate trials, seats, contracts, and renewals end-to-end.

A UK bank under Financial Conduct Authority rules needs a virtual assistant that can orchestrate Open Banking flows and document every decision.

A German machinery manufacturer near Munich wants a multilingual conversational multi agent system that can configure complex products, quote, and schedule maintenance in one experience.

If one or two of these examples sound uncomfortably familiar, you’re already in multi-agent territory.

What Is a Multi-Agent AI System vs a Single Chatbot?

A single chatbot is one model (or one “brain”) doing everything via one prompt chain. A multi-agent AI system is a team of specialised AI agents planner, researcher, tool-caller, supervisor coordinated by an orchestrator.

Single chatbot and single-agent systems explained

In 2026, a typical single chatbot for enterprises looks like:

One large language model (LLM)

Wrapped in a system and assistant prompt template

Optionally connected to RAG and a few tools (e.g., CRM lookup, ticket creation)

This architecture is simple and cheap to operate, which is why it still powers a large share of the global chatbot market estimated in the low- to mid-single-digit billions of dollars in 2024 and growing over 20% annually.

Limitations show up when the bot must.

Juggle multiple goals (support + sales + onboarding).

Maintain context across long workflows and channels.

Reason about tools (which system to call, in what order) instead of just calling one.

Multi-agent AI systems and agentic orchestration

A multi-agent AI system decomposes the work:

A Planner agent breaks a user’s goal into steps.

Specialist agents (support, billing, KYC, technical, sales) handle specific sub-tasks.

A Tool router chooses which API or system to call.

A Supervisor (or critic) agent checks outputs, enforces policies, and escalates to humans.

Frameworks and research like CAMEL-style setups show how “societies of agents” can collaborate on complex tasks that are too brittle for a single prompt chain.

The orchestrator coordinates these agents, manages memory, and applies guardrails. That’s where the real agentic AI system value comes from.

Key differences: architecture, autonomy, context, and cost

| Dimension | Single Chatbot (Single Agent) | Multi-Agent AI System |

|---|---|---|

| Architecture | One LLM + prompt + maybe RAG/tools | Orchestrator + multiple specialised agents + tools |

| Autonomy | Follows one chain; limited self-correction | Can plan, debate, verify, and re-route tasks |

| Context | Short-to-medium conversations | Long-running workflows with shared memory |

| Robustness | Brittle to prompt changes, unclear failures | More robust via redundancy and supervision |

| Cost | Lower infra/ops cost per request | Higher per request, but can unlock more automation |

| Failure modes | Hallucinated answers, tool misuse | Chain-of-agents errors, coordination bugs, higher spend |

For enterprise AI copilots vs chatbots, multi-agent architecture is what lets the “copilot” actually do work across systems rather than just chat.

Architecture Deep-Dive: Single Agent vs Multi-Agent System

Choose single-agent when you have one dominant task and simple tools; choose multi-agent when you need parallel skills, multiple tools, or long-running workflows.

Reference architectures for single chatbots in 2026

Most US and UK enterprise chatbots today look like this.

Frontend: web/mobile widget or in-product copilot

Core: LLM + system prompt + conversation history

Knowledge: vector search / RAG over docs and FAQs

Tools: 1–3 functions (ticket creation, order lookup, account changes)

This maps neatly to customer-facing sites your team already runs whether built with Mak It Solutions web development services or similar partners.

Single chatbots work brilliantly for.

Marketing and simple support

Status checks (“Where is my order?”)

Single-step changes

But as soon as you need multi-step reasoning (e.g., “investigate this billing anomaly and prepare a summary for finance”), the prompt becomes a ball of YAML that’s hard to debug and even harder to govern.

Reference architectures for multi-agent systems

A 2026 multi agent LLM architecture for enterprises typically includes:

Orchestration layer (e.g., LangChain, LangGraph, AutoGen, CrewAI)

Planner agent that interprets the user goal and creates a task graph

Domain agents for support, sales, risk, operations

Tooling layer (HTTP APIs, DB queries, internal services)

Memory and state (short-term conversation memory + long-term profiles)

Observability (traces, metrics, replay, cost dashboards)

Major clouds now offer native multi-agent features e.g. Microsoft Azure’s agent services building on its Agent Framework.This lets teams blend open-source frameworks with managed hosting, logging, and policy controls.

Decision criteria.

Signals that your single agent is failing.

Brittle prompts that break with small spec changes.

Hallucinated actions: tickets created in the wrong system, refunds issued incorrectly.

No clear escalation path when the bot is uncertain.

No human-in-the-loop for high-risk sectors like healthcare or banking.

If your bot in New York can’t reliably hand off complex mortgage cases to humans with full context, you’re due for a multi-agent redesign.

Use Cases & ROI: Multi Agent AI vs Single Chatbot in the Real World

Multi-agent AI usually shows ROI when it either automates a whole journey end-to-end or significantly increases conversion or deflection compared with a single bot.

Customer support & success.

For US SaaS and e-commerce brands in hubs like San Francisco, multi-agent systems can:

Triage tickets, pull account and billing data, propose resolutions, and only escalate exceptions.

Run proactive retention workflows, coordinating offers with CRM and billing tools.

Deflect a larger share of tickets while improving CSAT.

Surveys consistently list customer-facing gen-AI use cases among the top three value drivers in enterprises adopting the tech.

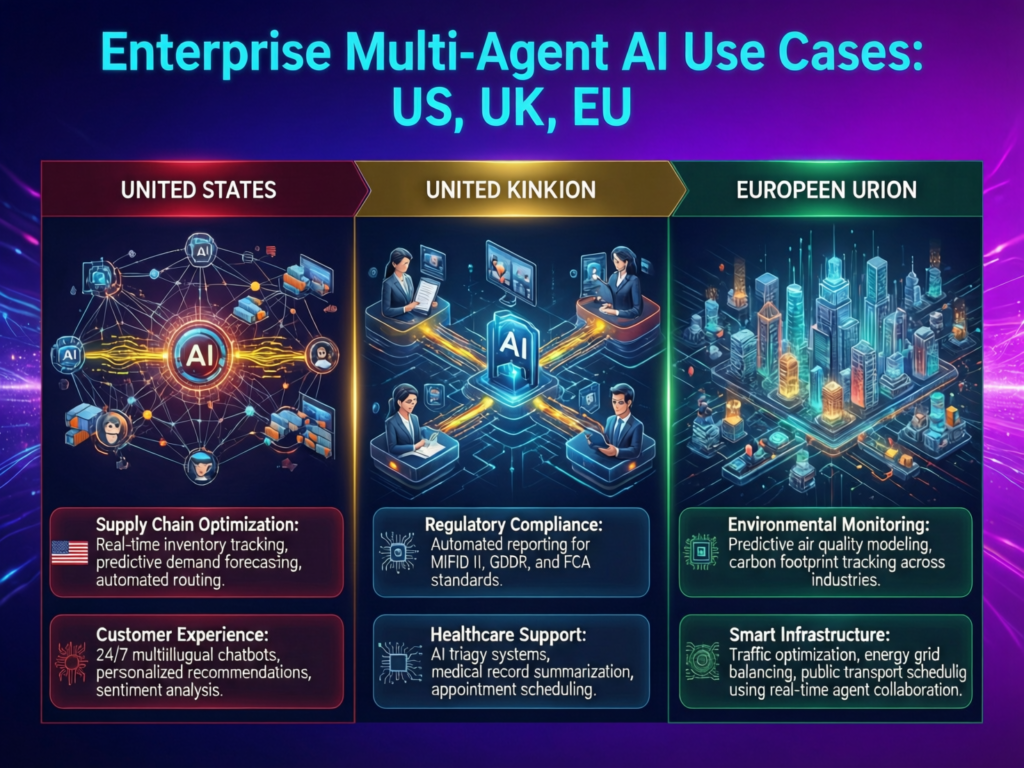

Regulated industries.

In UK and EU banking, multi-agent chatbots can orchestrate Open Banking / PSD2 journeys.

One agent handles KYC; another handles transaction analytics; another manages secure messaging.

A supervisor agent ensures flows comply with DSGVO/GDPR and UK data rules.

For healthcare (think NHS-style services or HIPAA-regulated US providers), multi-agent AI can.

Triage symptoms, schedule appointments, check benefits, and provide prep instructions.

Route anything risky to clinicians while logging decisions for auditors under HIPAA-like standards.

Automotive, manufacturing, and dealerships in US/Europe

For dealerships and manufacturers in Berlin or Frankfurt.

A sales agent configures vehicles or machinery variants.

A finance agent simulates lease and financing options.

A service agent schedules maintenance and orders parts.

Together, this conversational multi agent system can lift lead qualification rates and reduce time-to-quote versus a single chatbot that just “answers questions”.

Platforms, Frameworks & Stacks.

In 2026, most teams blend an LLM provider, a multi-agent framework, and observability/governance tools, rather than buying one opaque black-box platform.

Open-source frameworks: LangChain, LangGraph, AutoGen, CrewAI

For build-leaning teams (especially in US SaaS or EU fintech)

Pros: flexibility, community recipes, no lock-in, easier to run on your cloud.

Cons: you own reliability, upgrades, and sometimes security/compliance layers.

Open-source multi-agent frameworks have grown rapidly, with research communities like CAMEL showing new patterns for collaboration and world simulation.

Cloud-native stacks.

Cloud-aligned buyers often lean on:

Agent services on Google’s Gemini API

Multi-agent workflows on Azure AI

Agent toolkits on AWS

Benefits include:

Regional hosting for the US, UK, EU (e.g., Germany, France, Netherlands, Switzerland).

Easier integration with existing data lakes, monitoring, and security tooling.

Platform comparison factors: pricing, latency, data residency, ecosystem

When comparing platforms for a US enterprise, a London-based bank regulated by the Financial Conduct Authority, or a German bank under BaFin, look at.

Pricing model (per token, per request, per agent).

Latency for key regions and channels (web, mobile, voice).

Data residency and encryption for GDPR/DSGVO, UK data rules, HIPAA, PCI DSS.

Ecosystem maturity: SDKs, monitoring, support, partner network.

For many teams, the “best multi agent vs single chatbot solution for US enterprises” ends up being a cloud-native stack plus open-source orchestration on top.

Risk, Security & Compliance.

Multi-agent systems expand the attack surface (more agents, more tools) but also give you better controls (roles, policies, audit logs) when designed well.

New risks with multi-agent orchestration

Key risks to manage.

Tool misuse: agents calling the wrong systems or with wrong parameters.

Chain-of-agents errors: subtle bugs that only appear in long workflows.

Escalating costs when agents endlessly debate or re-run tools.

Prompt injection via tools (e.g., a compromised knowledge base or API)

Mitigation patterns:

Strict role separation (planner vs executor vs critic).

Max-step and cost budgets per conversation.

Policy-aware tools that validate actions server-side.

Security & compliance baselines for US, UK, and EU

Baseline expectations.

GDPR/DSGVO for EU users.

UK data protection rules and UK-GDPR guidance from the ICO.

HIPAA for US healthcare data.

PCI DSS for card data.

SOC 2 and similar controls for third-party vendors.

Regulators increasingly expect evidence that your agentic AI systems:

Keep sensitive data inside the right regions.

Provide access controls, logging, and monitoring.

Have clear incident response and model risk management.

Designing auditability and observability into your agent stack

To pass audits and internal risk committees.

Capture traces of every agent step (inputs, tools, outputs).

Provide replay so incidents can be reconstructed.

Run continuous red-teaming and policy testing.

Integrate with SIEM and data-loss-prevention tools.

Mak It Solutions’ business intelligence services and SEO & analytics expertise can help you turn this telemetry into dashboards for CFOs, CISOs, and regulators.

How to Decide: Multi Agent AI Roadmap vs Single Chatbot Upgrade

Start with your top three journeys, score their complexity and risk, then choose between: “optimise single chatbot”, “pilot multi-agent in one journey”, or “multi-agent-first” roadmap.

Quick reminder.

nothing here is legal, financial, or regulatory advice. Always involve your own counsel and compliance teams before production deployments.

Assess your current chatbot’s limitations

Run a quick gap analysis across.

Containment rate and topics that still escalate.

Latency and drop-offs for key journeys.

Accuracy and compliance in high-risk flows.

Integration depth (read-only vs read/write; how many systems).

Known regulatory pain (audit findings, breaches, complaints).

If most pain is around FAQ quality and search, a better single chatbot plus RAG may be enough.

Map journeys where multi-agent AI can win

Look for journeys with:

3+ systems involved (e.g., CRM, billing, ticketing, risk)

Multiple roles or approvals.

Clear monetary value per completed journey.

Examples

Payments disputes for US cards.

Loan applications for UK banks.

Claims handling for German insurers.

Cross-border support across the EU, where language and regulation vary.

This is where “multi agent banking chatbot solutions UK” and “DSGVO konforme Multi Agent AI Plattform für Banken” stop being buzzwords and become real architectural decisions.

Build the 6–12 month roadmap and success metrics

Design a pragmatic roadmap.

Pilot one journey with multi-agent AI (e.g., disputes, onboarding).

Define KPIs: conversion, CSAT, average handle time, revenue per session.

Set governance milestones: risk review, compliance sign-off, post-incident playbooks.

From there, expand to additional journeys if the pilot proves the ROI. Mak It Solutions can support from architecture workshops to full implementation via services and modern mobile app development when chat spans web and apps.

Implementation Patterns & Change Management

You don’t have to “big bang” your way from one chatbot to a complex multi-agent mesh.

Operating models.

Common patterns in US and European enterprises.

AI Center of Excellence (CoE) owning standards and shared tooling.

A platform team providing a multi-agent substrate used by product squads.

Product-led ownership in smaller firms, with one squad running the whole stack.

In London, a bank might keep agent orchestration under a central platform team for BaFin/FCA-style oversight, while a SaaS firm in France may let individual products own their assistants.

Rollout patterns.

Migration playbook ideas:

Add sidecar agents that quietly observe and propose actions while the old bot stays in charge.

Run A/B tests between single-agent and multi-agent flows.

Gradually deprecate old intents as multi-agent journeys reach target KPIs.

Earlier work you’ve done like improving rendering and performance using ideas from Server-Side Rendering vs Static Generation—helps here, because the same discipline applies to AI traffic and SLAs.

Measuring and communicating value to execs and regulators

For execs and regulators, focus on.

Outcome dashboards: resolved cases, revenue, churn reduction.

Risk metrics: incidents, policy violations caught by supervisor agents.

Evidence binders for BaFin/FCA-style committees, built from BI and log data.

Concluding Remarks

Stay with a single chatbot if your use cases are simple FAQs, low-risk, and tied to one or two systems.

Move to multi-agent AI when journeys are complex, regulated, or cross-system—and when the upside in revenue, savings, or compliance justifies the extra complexity.

Start with one high-value journey, prove ROI, and expand from there.

Recommended next steps for US, UK and German teams

US SaaS & retail: pick one journey (billing disputes, B2B onboarding) and run a 12-week multi-agent pilot.

UK and wider EU banking/fintech: start with an Open Banking or claims journey, designed with GDPR/DSGVO and UK-GDPR baked in.

German manufacturing & automotive: focus on configuration + quote + service flows, with strong language support for DACH and wider Europe.

Mak It Solutions can help you evaluate, design, and implement the right path whether that’s a sharper single chatbot for 2026 or a staged move into orchestrated multi-agent AI.

If you’re unsure whether to double down on your existing chatbot or commit to a multi-agent roadmap, you don’t have to guess. Share your top three journeys and current stack with the Mak It Solutions team, and we’ll map a practical 6–12 month plan covering architecture, risk, and ROI. Prefer something hands-on? Book a discovery workshop and let us prototype a multi-agent pilot that fits your US, UK, or EU compliance reality. Or simply contact us to start with a lightweight architecture review.

FAQs

Q : Is multi-agent AI overkill for small customer support teams with low ticket volume?

A : Often yes. If your team handles a few hundred tickets per month and most queries are simple (password resets, basic billing, “where is my order?”), a well-designed single chatbot with good RAG and analytics is usually enough. Multi-agent AI makes more sense when you need to coordinate several systems, automate whole cases, or operate in regulated industries. You can always start with a strong single-agent bot and add multi-agent capabilities later as volumes and complexity grow.

Q : Can you retrofit an existing single chatbot into a multi-agent system, or do you have to rebuild from scratch?

A : You rarely need a full rebuild. A common pattern is to keep your existing frontend and core intent structure, then introduce a multi-agent orchestrator behind the scenes. Some intents still go to the original single agent; others route to new agents (e.g., “claims agent”, “KYC agent”). Over time, more of the heavy lifting moves into the multi-agent layer, and the old bot becomes just one agent among many or is phased out gracefully.

Q : How do multi-agent AI systems impact latency and user experience compared to a single chatbot?

A : Multi-agent systems can be slower per request because multiple agents may plan, debate, and call tools. However, they often reduce overall time-to-resolution by completing more of the journey in one session. Good orchestration frameworks support parallel tool calls, streaming responses, and pragmatic limits on the number of agent steps. For high-value cases (loans, disputes, B2B sales), users usually accept slight latency increases in exchange for fewer escalations and call-backs.

Q : What extra logging and monitoring do you need before auditors or regulators will accept a multi-agent AI stack?

A : You need end-to-end traces showing each agent’s inputs, tools, outputs, and decisions. Regulators will expect role-based access control, immutable logs, retention policies, and the ability to reconstruct what happened in any sensitive case. Pair this with incident workflows, model risk documentation, and periodic testing (e.g., red-teaming prompts and tools). Many teams build dashboards that link AI traces with traditional audit logs to satisfy GDPR/DSGVO, UK-GDPR, HIPAA, and PCI DSS expectations.

Q : How do multi-agent chatbots handle language and localization across the US, UK, Germany and wider Europe?

A : Multi-agent AI makes it easier to separate language understanding from business logic. One agent focuses on multilingual NLU/NLG, while others handle local rules (US vs UK vs EU, BaFin vs FCA, SEPA vs local schemes). You can also assign agents to region-specific compliance, enabling flows that adapt to a user in Berlin versus one in the Netherlands. For many enterprises, this layered approach is key to scaling assistants across English, German, and other EU languages without rewriting every workflow.