Preventing AI Hallucinations in Arabic Language Models

Preventing AI Hallucinations in Arabic Language Models

Preventing AI Hallucinations in Arabic Language Models

Preventing AI hallucinations in Arabic language models for GCC enterprises means combining Arabic-first training, retrieval and verification layers so chatbots never “guess” about fees, fines, fatwas or regulations. For banks, governments and telcos in Saudi Arabia, United Arab Emirates and Qatar, this usually looks like an in-region knowledge base, RAG, validation rules and human oversight wrapped in a clear AI governance framework.

Introduction

Imagine a retail bank in Riyadh launching an Arabic chatbot. A customer asks about a Saudi Central Bank (SAMA) complaints deadline; the bot confidently “invents” a rule, or miscalculates zakat based on a hallucinated fatwa. When your goal is preventing AI hallucinations in Arabic language models, that kind of failure isn’t just a UX glitch it’s a risk event.

In Saudi Arabia, United Arab Emirates and Qatar, hallucinations are amplified by three things: heavy regulation (banking, telecoms, health, public services), Arabic-first user journeys, and strong social and religious sensitivities around misusing scripture or government decisions. For GCC enterprises, “good enough” GenAI is not acceptable.

Put simply, preventing AI hallucinations in Arabic language models means designing a verification layer that catches wrong or fabricated answers before they reach citizens or customers, and aligning that layer with emerging AI rules, data laws and sector regulators. This article walks through the technical causes, a practical verification-layer architecture, and how to keep it aligned with bodies like Saudi Data & AI Authority (SDAIA), Telecommunications and Digital Government Regulatory Authority (TDRA) and Qatar Central Bank (QCB).

What “Preventing AI Hallucinations” Really Means for Arabic Models.

Working definition of hallucinations in Arabic LLMs.

In this context, “preventing AI hallucinations” doesn’t mean blocking all creativity. It means stopping confidently wrong factual answers in Arabic interfaces especially where money, law, religion or government are involved. Examples include fabricated fatwas, imaginary ministerial decrees, non-existent circulars, wrong IBAN formats or false deadlines for fines in cities like Dubai or Doha.

Q: What are the main causes of AI hallucinations in Arabic language models used by GCC enterprises?

A: The main causes are weak or missing Arabic training data in regulated domains, dialect and code-switching complexity, and lack of grounding in trusted in-region sources. When models are forced to “guess” beyond their knowledge, they invent confident but wrong answers.

Why Arabic morphology, dialects and data gaps increase hallucination risk.

Arabic is morphologically rich, with complex inflection and clitics in Modern Standard Arabic and very different Gulf dialects. Users freely mix MSA, Khaleeji, English and even romanised Arabic (“zakat fee”, “SAMA rules ksa”), which many global LLMs handle poorly. On top of that, high-quality labelled corpora for banking, telecoms, government and energy in Arabic are limited, so the model often has no solid ground truth to draw on for GCC-specific questions.

GCC business impact: from citizen trust to regulatory escalation.

One hallucinated answer about loan deferral, digital ID, or telecom penalties can trigger a complaint on social media in Jeddah, escalate to a formal case, and quickly reach a regulator or Sharia board. It’s why hallucinations are increasingly treated as an AI governance and operational risk issue, not a mere “model quality” bug. Boards now expect hallucination controls to sit alongside cybersecurity, data privacy and business continuity in their risk registers.

GCC Regulators’ View on Hallucinations and Reliable GenAI.

How Saudi Data & AI Authority (SDAIA) links hallucinations to misinformation and PDPL risk.

Saudi guidelines for generative AI stress accuracy, non-misleading content and protection of personal data under PDPL. Hallucinations that misrepresent government services, personal obligations or individuals’ data can be viewed as both misinformation and a data-quality failure. For ministries or banks in cities like Jeddah or Riyadh, this means content verification, provenance tracking and user disclosures (“AI-generated, may contain errors”) are no longer nice-to-have they are part of responsible AI deployment.

UAE AI ethics, TDRA and sector regulators’ focus on transparency & robustness.

UAE AI principles emphasise safety, reliability, transparency and human oversight in AI systems powering public services and media. For Arabic chatbots integrated with national digital ID or smart-government portals in Abu Dhabi, hallucination controls support both AI ethics and emerging privacy / data laws. They help demonstrate that responses are grounded in approved policies and that sensitive requests are routed to humans when confidence is low, in line with TDRA’s broader digital governance expectations.

Qatar’s ethical AI guidelines and robustness expectations.

Qatar’s national AI framework, led by the Ministry of Communications and Information Technology (MCIT), emphasises reliability, non-harm and human oversight, supported by sector rules in finance and data protection. For a QCB-regulated bank or a citizen-service portal in Doha, that translates into clear expectations: GenAI answers must be auditable, traceable to sources and overrideable by humans. A hallucinating bot that misstates social benefits or energy subsidies cuts directly against those principles.

Designing a Verification Layer for Arabic GenAI in GCC Enterprises.

What is a verification layer between the LLM and the end user?

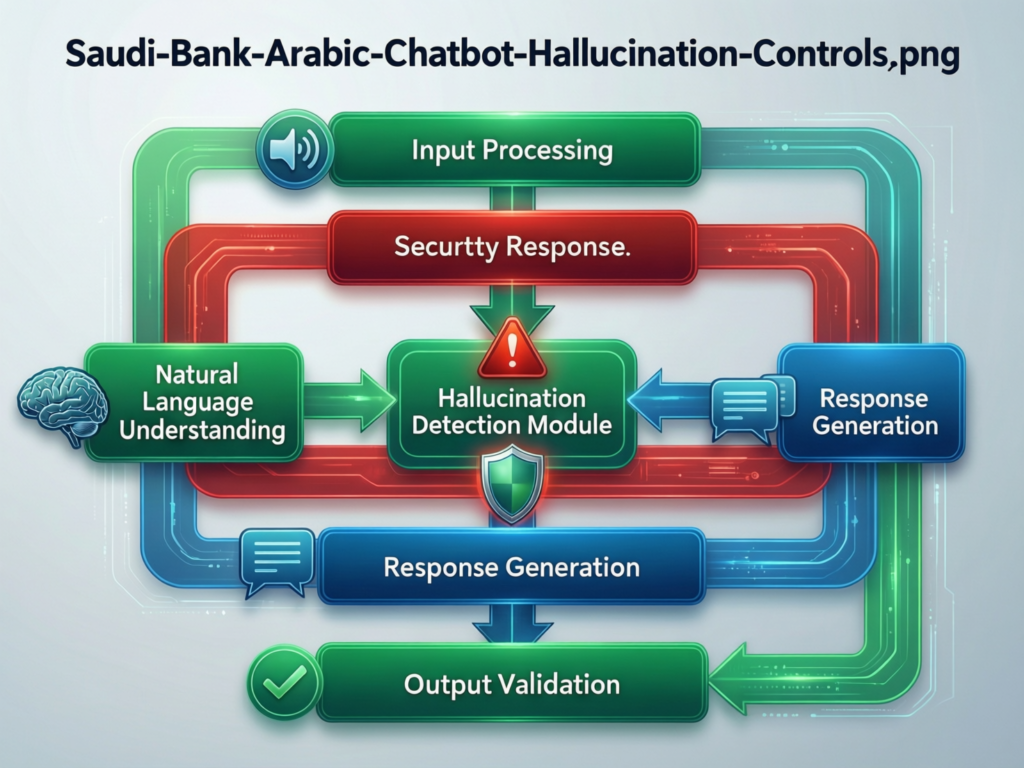

Think of the verification layer as a safety and governance “sandwich” between the model and channels like mobile apps, WhatsApp, IVR or web chat. Instead of sending raw LLM output straight to users, the layer retrieves trusted Arabic and English content, validates the draft answer, applies policy filters, then decides whether to send, edit, redact or escalate to a human. In regulated GCC sectors, this is where GenAI becomes auditable, explainable and approvable.

Core building blocks for Arabic hallucination control.

A robust architecture usually includes.

An in-region, Sharia-aware, PDPL-ready knowledge base hosted on clouds such as Amazon Web Services Bahrain Region, Microsoft Azure UAE Central or Google Cloud Platform’s Doha region.

Retrieval-augmented generation tuned for MSA and Gulf dialects.

Rule-based and ML validators, confidence scoring and policy filters (e.g., “never answer fatwa questions autonomously”).

A human review queue for high-risk topics and continuous feedback loops into model alignment.

Example blueprints for Saudi banks, UAE government portals and Qatar telcos.

Saudi retail bank (SAMA-regulated)

An Arabic GenAI assistant answering card, PDPL and complaints questions, but only where retrieved SAMA circulars support the response. Conflicts or low-confidence answers are routed to agents in Riyadh (plain text this time)

UAE smart-government FAQ

A portal in Dubai uses GenAI, but every answer must cite approved policies and TDRA-aligned service catalogues before it appears in the UI.

Qatar operator contact centre.

A telco bot in Doha runs RAG over billing, tariff and roaming data, combined with AI ethics guardrails from QCB and MCIT so it can’t fabricate contract terms.

Together, these show what a fact-checking layer looks like in real GCC banks, governments and telcos.

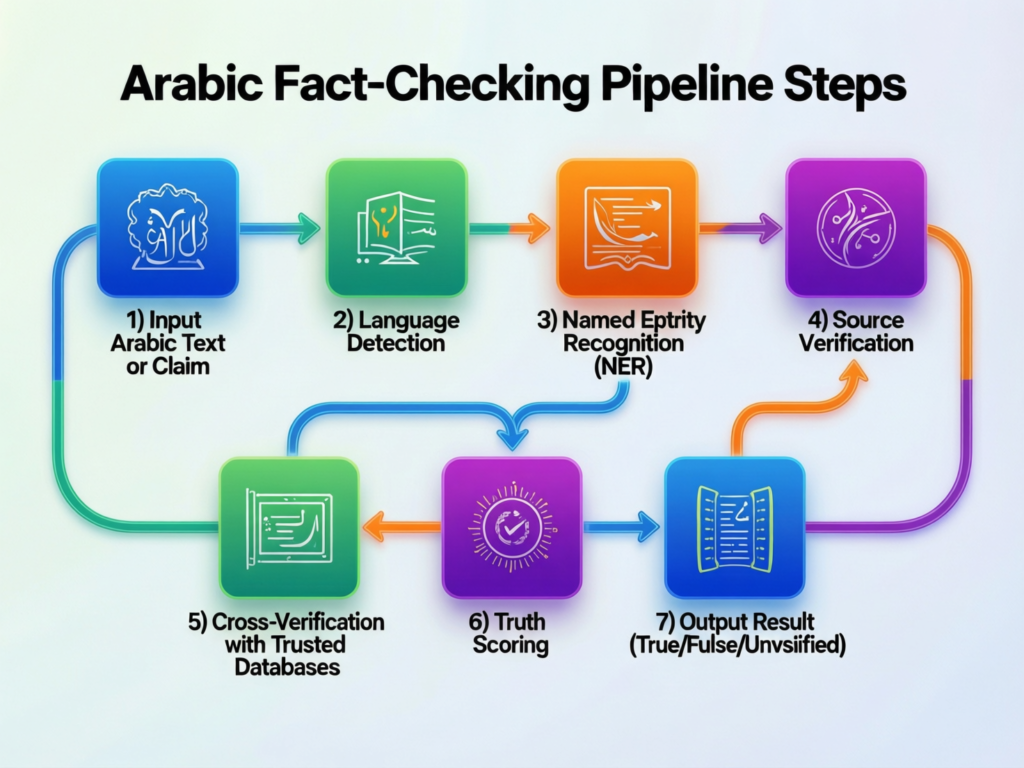

Implementing an Arabic Fact-Checking Pipeline in GCC Use Cases.

Map high-risk journeys in banking, government and telecom

Start by mapping journeys where hallucinations are truly unacceptable: credit fees, zakat, late payment penalties, visa rules, social benefits, telecom penalties, or religious questions. Score each flow by potential exposure to bodies like SAMA, TDRA, QCB and internal Sharia boards, and by citizen impact. This shortlist drives where you invest first in verification and human-in-the-loop review.

Build or curate an Arabic-first knowledge base and RAG layer.

Next, build an Arabic-first knowledge base hosted in-region (for example on AWS Bahrain, Azure UAE Central or GCP Doha) and tag every document by regulator, sector, jurisdiction and effective date. Include bilingual content and handle spelling variants so retrieval works regardless of whether users type“credit card” or “credit card ksa”. This is also where you apply data-governance rules from bodies like National Data Management Office (NDMO).

Add validation rules, human review and audit trails.

Finally, configure the verification engine: cross-check answers against retrieved sources; require citations for financial or legal claims; and block responses when confidence or coverage is low. Log prompts, retrieval snippets, model drafts, overrides and final answers with timestamps and user IDs. This creates the audit trail you’ll need for internal audit, risk committees and any future supervision visits.

Evaluating and Monitoring Hallucinations in Arabic Language Models

Building Arabic-specific hallucination benchmarks and test suites.

Before going live, create evaluation sets in domains like retail banking, Islamic finance, telecom tariffs, smart-city services and health, with scenarios in MSA plus Gulf dialects. Test sovereign Arabic LLMs and global models side-by-side and compare hallucination rates for GCC-specific content. Over time, your “Arabic hallucination benchmark” becomes a key internal KPI for GenAI releases.

Online monitoring.

For pilots in Riyadh, Dubai or Doha, run the chatbot in shadow mode: log and evaluate answers without exposing them to users. Once live, sample sessions for manual review and run Arabic red-teaming exercises that probe for invented regulations, fake hadith, or fabricated circulars. Findings should feed both model tuning and stricter validation rules.

Dashboards and alerts for hallucination incidents.

Set up dashboards showing hallucination incidents by journey, channel and regulator (e.g., “SAMA-facing journeys”, “TDRA-governed journeys”). Configure alerts when certain thresholds are crossed, and plug them into your existing incident-response process. Material issues should be summarised for internal risk committees, alongside other operational and conduct risks.

Governance Playbook for GCC AI Teams.

Defining roles across product, data science, compliance and security

Preventing hallucinations is a team sport. Product owns user journeys and acceptance criteria; data science owns model selection and performance; platform/infra owns deployment and observability; information security owns access and logging; and legal / compliance sign off on rules and disclosures. Make hallucination controls explicit in AI risk registers and model-approval workflows, not hidden in technical documents.

Aligning with NDMO, SAMA, TDRA, QCB, ADGM and DIFC expectations

Map each use case to its regulators: NDMO and Saudi Central Bank (SAMA) for Saudi banks and fintech; TDRA plus Abu Dhabi Global Market (ADGM) / Dubai International Financial Centre (DIFC) for UAE financial and digital services; QCB and MCIT for Qatar banking and public-sector deployments. Your verification layer, logging and reporting should make it easy to evidence accuracy, robustness and human oversight across all of them.

Build vs. buy: platform choices for verification layers

Some teams will build custom RAG and verification stacks; others will adopt off-the-shelf platforms. In RFPs, ask vendors about Arabic language coverage (MSA + Gulf), in-country hosting options, data residency, audit-logging detail, regulator-ready reporting and support for human-in-the-loop. Look for partners who understand GCC patterns just as you might when choosing web development services or specialised Webflow development for your front-end stack.

Concluding Remarks

For GCC organisations, preventing hallucinations in Arabic language models means: understand the linguistic and data causes; design a verification layer between the model and user; implement a fact-checking pipeline step by step; evaluate and monitor hallucinations continuously; and embed all of this in a regulator-ready governance model.

Maturity stages for hallucination control in GCC organisations

Most teams move from ad-hoc pilots in a single department, to reusable verification patterns in banking or government, and ultimately to an enterprise-wide verification platform covering Saudi, UAE and Qatar operations. At higher maturity, hallucination metrics, audits and regulator reporting are as routine as uptime, latency or cybersecurity incidents.

90-day plan for a verification-layer proof of concept

A pragmatic 90-day plan might focus on one high-risk, high-volume Arabic chatbot for example a complaints bot in Riyadh, a Dubai smart-city service assistant, or a Doha telco contact-centre bot. Define clear success metrics (hallucination rate, resolution time, regulator-sensitive journeys), implement the three-step fact-checking pipeline above, and document everything so it can scale to other journeys and markets.

If you’re planning an Arabic-first chatbot for a bank, telco or government entity in the GCC, you don’t have to choose between innovation and safety. The team at Mak It Solutions can help you design and implement verification layers, RAG pipelines and governance that work with your existing stack. Explore our web development trends in the Middle East for KSA & UAE or indexing controls guides, then reach out for a tailored GenAI verification roadmap for your Saudi, UAE or Qatar operations.( Click Here’s )

FAQs

Q : Is preventing AI hallucinations explicitly required under SDAIA’s Generative AI guidelines for public- and government-sector projects in KSA?

A : SDAIA’s public-sector GenAI guidance emphasises accuracy, non-misleading content, human oversight and respect for PDPL and other Saudi laws. While it may not use the word “hallucination” on every page, it clearly frames risks from incorrect or fabricated outputs, especially in citizen services and communications. For ministries, authorities and municipalities, that means they are expected to deploy content verification, disclosure and governance measures — and be able to show how they prevent or catch harmful AI errors in line with Saudi Vision 2030’s focus on trusted digital government.

Q : How should a UAE bank document and report AI hallucination incidents to satisfy internal audit and local regulator expectations?

A : A UAE bank should treat hallucination incidents like other operational risk events. Each case needs a record of the user journey, prompt, retrieved documents, model output, corrections, impact assessment and final resolution. Aggregate reports should highlight root causes, fixes (e.g., new rules, better training data) and lessons learned. Aligning this with TDRA’s digital governance principles and financial-centre expectations in ADGM or DIFC helps internal audit and regulators see that hallucinations are monitored and controlled within a formal risk framework, not left to chance.

Q : Can Qatar ministries host verification-layer knowledge bases outside national borders, or do AI hallucination controls need in-country data residency?

A : Qatar’s AI and data-protection framework generally favours strong control over sensitive government and citizen data, with cross-border transfers subject to conditions. For many ministries, the safest posture is to host verification-layer knowledge bases and logs within Qatar or trusted regional zones, and apply strict transfer controls when data leaves. Where cross-border hosting is used, contracts, technical safeguards and DPIAs should show how citizen data and policy content stay protected while still supporting robust hallucination controls and AI reliability in line with Qatar National Vision 2030.

Q : What Arabic datasets and benchmarks are recommended when evaluating hallucination rates in sovereign Arabic LLMs used in GCC projects?

A : No single public dataset covers all GCC scenarios, so most teams blend several sources. Start with high-quality MSA corpora, add Gulf-dialect data from customer-service transcripts (properly anonymised) and layer in domain-specific documents from banking, telecoms, logistics and government. Build your own evaluation sets around real user questions, using both MSA and dialect. For sovereign Arabic LLMs, compare their performance on these tests to global models and track hallucination metrics over time, especially for SAMA-, TDRA- and QCB-sensitive journeys that matter most to regulators.

Q : How can GCC organisations prove to regulators that their Arabic chatbots use a robust verification layer and human oversight, not just a base LLM?

A : Evidence usually combines design documents, policies and live artefacts. You should be able to show architecture diagrams of the verification layer, RAG design, validation rules, escalation thresholds, reviewer workflows and monitoring dashboards. Policy-wise, map hallucination controls into AI risk, data-governance and conduct frameworks. Finally, keep sample logs, audit trails and red-teaming reports that demonstrate how hallucinations are detected, corrected and fed back into improvement. Together, these materials help convince regulators that your chatbot is a governed system, not just a raw model experiment.