AI Governance Operating Model for US, UK and EU CIOs

AI Governance Operating Model for US, UK and EU CIOs

AI Governance Operating Model for US, UK and EU CIOs

An AI governance operating model is the set of roles, structures, processes and tools that control how AI use cases are proposed, approved, built, deployed and monitored across the enterprise. In 2026, CIOs in the United States, United Kingdom and European Union need a risk-based, regulator-aligned operating model that covers both machine learning and generative AI so they can scale innovation without losing control of compliance, security or ethics.

Introduction.

If your enterprise is serious about AI in 2026, you need more than a policy PDF you need an AI governance operating model that actually runs day to day. AI is now mainstream: one recent global index reported that roughly 78% of organisations were using AI in 2024, up from 55% the year before.

But governance has not kept up. A 2025 study found that while about 71% of enterprises are using or piloting AI, only around 30% feel ready to scale it safely across the business.At the same time, the European Union’s AI Act is coming into force with a strict, risk-based regime, while NIST’s AI Risk Management Framework (AI RMF 1.0) has become a key reference point for “responsible AI” in the United States.

In other words: boards want AI everywhere, regulators are sharpening their pencils, and CIOs are stuck in the middle.

Note.

This article is for general information only and does not constitute legal advice. Always involve your legal and compliance teams when interpreting regulations.

Board pressure, GenAI adoption and regulatory heat in US, UK and Europe

Boards in New York, London and Frankfurt are now asking the same questions:

Where are our AI systems?

Who is accountable if something goes wrong?

Are we compliant with EU AI Act, GDPR/DSGVO, UK GDPR, NIST AI RMF and sector rules?

IBM research in 2025 suggested nearly 74% of organisations have only “moderate or limited” coverage for technology, third-party and model risks within their AI governance. (see That governance gap is exactly where CIOs, CDOs and CISOs are now being pulled in by boards, audit committees and regulators.

Who this guide is for (CIOs, CDOs, CISOs, heads of risk and compliance)

This playbook is written for CIOs and their closest partners: CDOs, CISOs, heads of risk and compliance, and business leaders who increasingly own AI-heavy products. It assumes you’re already running AI in at least one critical business function, for example:

A US bank using AI for credit decisioning in New York

A UK NHS supplier building diagnostic support tools for clinicians in London

A BaFin-regulated bank in Frankfurt using AI for trading surveillance

A pan-European group with operations in Germany, France, the Netherlands and the Nordics

Your challenge: design an AI governance operating model that keeps regulators, customers and your board comfortable without killing innovation.

Snapshot answer what “good” AI governance looks like in 2026

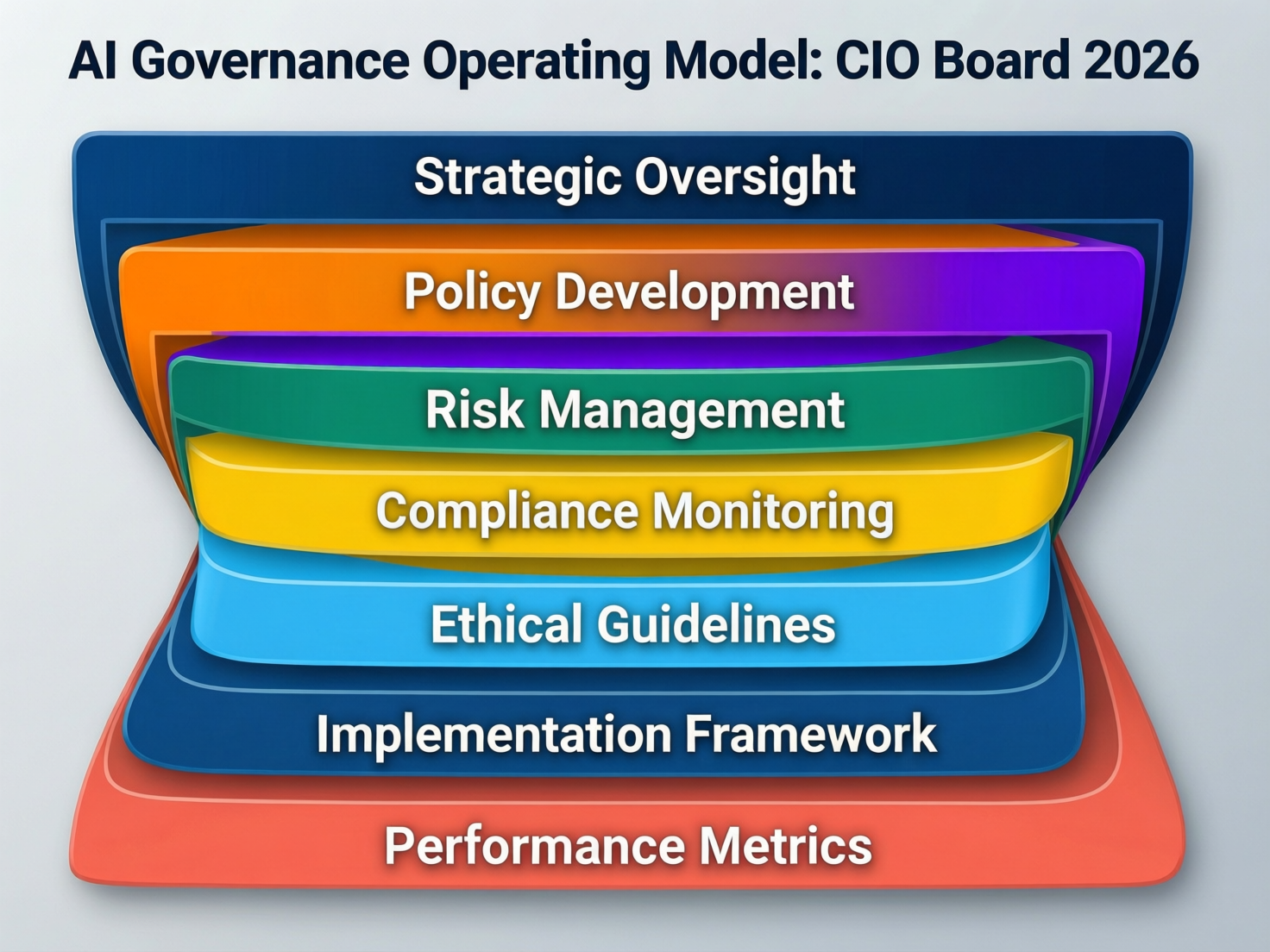

At a glance, a “good” AI governance operating model in 2026 has five pillars:

Clear ownership

A named executive owner (often the CIO) with shared accountability across CDO, CISO, legal and the business.

Risk-based controls

AI risk management and assurance aligned to EU AI Act risk levels, GDPR/DSGVO, UK GDPR and NIST AI RMF with heavier controls where impact is higher.

GenAI guardrails

A distinct layer for generative AI tools and assistants (e.g. ChatGPT-style copilots) covering hallucinations, IP leakage and toxic outputs.

Integrated tooling

AI lifecycle governance embedded in existing GRC, ITSM and cloud-native controls (e.g. ServiceNow, ModelOp, and cloud platforms such as AWS, Microsoft Azure and Google Cloud).

Measurable outcomes

Governance tied to metrics incidents, model drift, bias findings, approvals and time to market rather than only documents.

Mak It Solutions’ recent work on AI observability, prompt-injection defence and enterprise AI agents shows how these pillars can be made concrete across US, UK and EU environments. (Mak it Solutions)

What Is an AI Governance Operating Model?

Working definition for CIOs in 2026

For CIOs in 2026, an AI governance operating model is the set of roles, structures, processes and tools that control how AI use cases are proposed, approved, built, deployed, monitored and ultimately retired across the enterprise. It turns responsible AI policies and ethics principles into repeatable workflows attached to real systems Jira, ServiceNow, MLOps platforms, data catalogues and GenAI sandboxes not just slideware.

It spans traditional machine learning, rule-based decision engines and the new wave of GenAI assistants, copilots and agents.

How it differs from an AI governance framework or policy deck

An AI governance framework or policy deck describes what you believe: principles such as fairness, transparency, security and human oversight, and requirements such as “high-risk AI must have documented human-in-the-loop review.” Frameworks are important, but they’re only step one.

An AI governance operating model describes who does what, when, using which systems in day-to-day operations. For example:

Who triages new AI ideas into a AI/model inventory?

Which committee approves an EU AI Act “high-risk” system before go-live?

How are production models monitored for drift and bias, and who gets paged when something breaks?

The framework fits on a page; the operating model shows up in your calendars, tickets, dashboards and RACI.

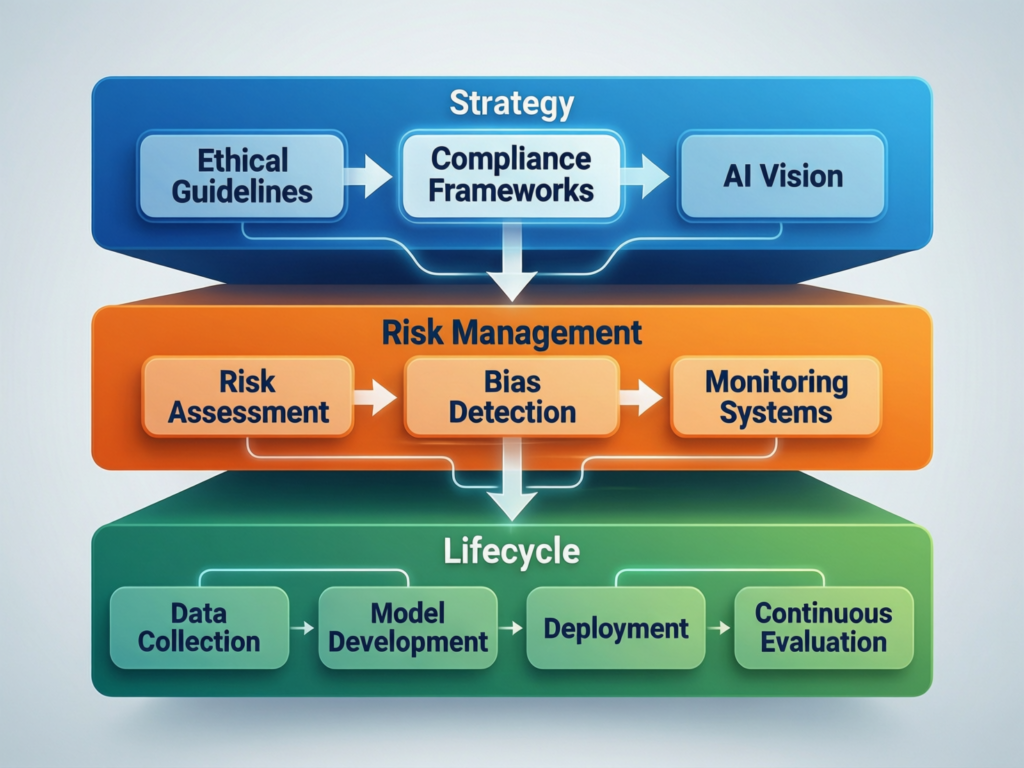

The three-tier model.

A practical way to structure your AI governance operating model is a three-tier model.

Strategy (Board / C-suite / CIO)

Sets AI ambition, risk appetite and funding, approves the AI policy framework, and defines which uses are off-limits.

Risk, compliance & ethics oversight

Translates laws and standards (EU AI Act, GDPR/DSGVO, UK GDPR, HIPAA, PCI DSS, SOC 2, NIST AI RMF) into control requirements and tests.

Operational lifecycle governance: The engine room from intake → design → build → validate → deploy → monitor → audit → retire. This is where AI lifecycle governance lives: risk scoring, data protection impact assessments, model validation, explainability checks, monitoring and periodic audits.

US vs UK vs EU/German nuances in AI governance models

While the basic structure is similar across geographies, the emphasis differs.

European Union / Germany.

The EU AI Act introduces four risk categories (unacceptable, high, limited, minimal) with strict obligations for high-risk systems plus strong links to GDPR and national laws such as Germany’s Bundesdatenschutzgesetz (BDSG).BaFin-regulated banks in Frankfurt will typically fold AI into existing model risk management and operational risk committees.

United Kingdom.

UK GDPR and the ICO’s guidance remain central, while sector regulators like the Financial Conduct Authority and NHS-linked bodies set expectations in financial services and health.Open Banking rules and growing FCA powers make explainability and customer-outcome monitoring non-negotiable in London’s financial hub.

United States.

The NIST AI RMF is a voluntary but influential standard for AI risk management, complementing binding sector rules such as HIPAA in health and various state privacy laws. US CIOs tend to lean heavily on existing cyber, privacy and model risk functions.

A good AI governance operating model flexes to these differences while still feeling like one global way of working.

Who Owns AI Governance? CIO Roles, Committees and RACI

CIO vs CDO vs CISO.

In practice, four ownership patterns show up most often.

CIO-led

Common in enterprises where AI is embedded in core systems and infrastructure (for example, manufacturers or retailers). The CIO owns the operating model; CDO, CISO and legal are key partners.

CDO-led

Effective in data-heavy organisations (banks, insurers) where the CDO already runs data governance and model risk.

CISO-anchored

Rarely the primary owner, but increasingly seen where AI risk is framed as an extension of cyber, third-party and information security.

Hub-and-spoke

A central AI governance hub (often under CIO/CDO) sets standards and runs core processes, while business units in the US, UK, Germany, France, the Netherlands or the Nordics take local ownership of specific models.

What matters most is not which title is at the top, but that business owners share accountability with technology and risk, and that model risk management for machine learning and GenAI is explicitly in scope.

AI steering committee, ethics council and cross-functional governance board

At minimum, a CIO in 2026 should stand up three cross-functional groups:

Enterprise AI Council

Sets AI strategy, approves policy and the AI risk taxonomy, and arbitrates tough calls (for example, whether a borderline use case is “high-risk” under the EU AI Act).

Model Risk & Assurance Committee

Oversees model validation, performance, drift, explainability and model risk management, often by expanding existing MRM committees in banks.

GenAI Working Group

Focused on shadow AI, copilots, AI assistants and prompt-driven use cases across US, UK and EU teams.

Many organisations also add a Responsible AI / Ethics Council with external advisors, especially in public sector, healthcare and critical infrastructure.

Sample AI governance RACI matrix for CIOs in the US, UK and Germany

You don’t need a massive spreadsheet to start. A simple AI governance RACI for key activities might look like:

Use-case intake & inventory.

Responsible – product owner or business lead

Accountable – CDO

Consulted – CIO, risk, security

Informed – data protection officer (DPO)

Risk classification & DPIA.

Responsible – risk / compliance

Accountable – DPO (EU/UK) or privacy officer (US)

Consulted – product owner, CISO

Model approval for high-risk AI.

Accountable – Enterprise AI Council

Responsible – model owner

Consulted – legal, CISO, DPO, works council where applicable (common in Germany)

Monitoring & incident response.

Responsible – model owner plus SRE/ML Ops

Accountable – CIO or delegated head of AI platforms

Consulted – CISO, risk, communications

Embedding business ownership product, operations and HR in the loop

To avoid “IT-only governance,” push accountability into business lines.

Product & operations teams own the AI systems that change customer outcomes or operational processes.

HR owns AI used in hiring, performance management or learning, working closely with legal and employee representatives in countries like Germany and France.

Finance and risk own capital, provisioning and risk appetite for AI investments.

The CIO orchestrates the AI governance operating model, but does not own every decision. Shared ownership is crucial for both compliance and adoption.

Designing an Enterprise AI Governance Operating Model & Framework

Centralised, federated and hybrid AI governance models

There is no one “correct” structure; instead, three patterns dominate.

Centralised

A single global AI governance team handles intake, review and approvals. This works well for Fortune 500 banks and highly regulated sectors where consistency matters more than speed.

Federated

Business units in the US, UK, Germany or the Nordics run their own AI governance within group standards. This fits diversified groups and German Mittelstand manufacturers with strong local autonomy.

Hybrid

Core policies, templates and tooling are centralised, but day-to-day approvals for lower-risk AI sit with local units (for example, a UK public-sector department or a French retail business)

Most enterprises end up hybrid: centralised for high-risk and GenAI platform decisions, federated for lower-risk departmental AI.

From framework to operating model.

To turn your responsible AI policies and ethics principles into an operating model:

Map each principle (for example, fairness) to concrete checks bias testing, representative data sampling, diverse human review panels.

Assign those checks to specific workflow steps: design reviews, model validation gates, pre-deployment approvals.

Attach them to actual systems: MLOps pipelines, GRC tools, ticketing workflows.

For example, “transparency” becomes a rule that every high-risk system must have documented model cards, decision rationale logging and business-friendly explanations before deployment.

AI model lifecycle governance.

Robust AI lifecycle governance covers.

Intake & inventory

New ideas are logged, scoped and tagged with potential impact and regulatory flags (for example, high-risk under the EU AI Act).

Risk scoring & DPIAs

Impact and likelihood are assessed, including GDPR/DSGVO or UK GDPR data protection impact assessments where required.

Design & build

Data minimisation, security, explainability and robustness patterns are embedded from the start.

Validation & approval

Independent testing, fairness checks and sign-off by the Model Risk Committee or equivalent.

Deployment & monitoring

SLAs/SLOs, drift detection, performance dashboards and human-in-the-loop processes Mak It Solutions’ work on AI observability is a good reference here. (Mak it Solutions)

Audit & retirement

Regular reviews, documentation updates and decommissioning plans.

AI governance council structure and charter

Your AI governance council charter should cover.

Membership

CIO/CDO, CISO, head of risk, DPO, key business leaders from US, UK and EU units.

Meeting cadence

Monthly for operational decisions, quarterly for strategy and risk appetite.

Approval thresholds

For example, all EU AI Act high-risk systems, NHS-adjacent health tools, or models touching PCI DSS-scoped card data must pass through the council.

Escalation paths

Clear routes to the board risk committee when AI risks exceed tolerance or cross-border data transfers trigger Schrems II concerns.

Compliance, Risk and the EU AI Act Inside Your Operating Model

Aligning AI governance with EU AI Act, GDPR/DSGVO, UK GDPR and US NIST AI RMF

European CIOs in particular cannot treat EU AI Act, GDPR/DSGVO and UK GDPR as after-the-fact checklists. The AI Act’s risk-based regime banning “unacceptable risk” uses outright and imposing heavy obligations on high-risk systems must be baked into use-case intake, design, testing, deployment and monitoring.

Similarly, the NIST AI RMF gives US organisations a structured way to identify, assess and mitigate AI risks across the lifecycle; if it sits only in a PDF, you’re not compliant in spirit.

Regulators and boards will increasingly ask how your AI governance operating model enforces these rules not just whether you’ve heard of them.

Sector overlays.

Different sectors add extra layers on top of the AI Act and privacy rules:

Financial services

Banks and insurers map AI governance into existing model risk management, stress testing and conduct frameworks (BaFin in Germany, FCA in the UK)

Health

HIPAA (and its evolving Security Rule) in the US and NHS rules in the UK expect strict controls around PHI, explainability and human oversight. (HHS.gov)

Payments & SaaS

PCI DSS and SOC 2 push for strong logging, change management, access control and incident handling all vital for AI systems making or supporting payment decisions.

Public sector & critical infrastructure

National security and resilience regulations can drive extra approval layers and stricter hosting constraints.

Your AI risk management and assurance approach should explicitly map each control in these regimes to the AI lifecycle.

Documentation, model inventories and risk classification

A mature AI governance operating model will reliably generate:

A model register / inventory: every model, rule engine and GenAI use case with owner, purpose, region, data, risk level and regulator references.

Risk classification: mapping internal risk tiers to EU AI Act categories and sector rules.

Technical documentation: model cards, data lineage, validation reports, benchmark results and limitation statements.

Human oversight plans: where humans sit in the loop, what they can override, and how they are trained.

Incident logs: including hallucination incidents, data leaks and bias findings, and the remediation steps taken.

These artefacts are what auditors, BaFin, the FCA or data protection authorities will expect to see.

Cross-border data, cloud hosting and Schrems II constraints

Your AI governance operating model must reflect where data lives and where models run:

Use region-specific deployment patterns on AWS, Microsoft Azure and Google Cloud to keep EU personal data in EU regions, and UK data under UK-GDPR-compliant setups.

For EU–US transfers, embed your legal team’s approach to the EU–US Data Privacy Framework and standard contractual clauses into design and procurement workflows.

Under Schrems II, treat US-hosted or US-administered services with extra care; your operating model should define when EU-only, Germany-only or on-prem hosting is mandatory.

This is especially important for French and Dutch public-sector bodies and German industrials who often require strict data residency.

Operating Model for Generative AI Tools and Assistants

Why GenAI needs its own governance layer

Generative AI introduces new risks: hallucinations, IP leakage, data exfiltration through prompts, prompt injection attacks, synthetic misinformation and toxic outputs. That’s why CIOs need a distinct GenAI operating model layered on top of existing ML governance.

Recent surveys suggest that by late 2024, around 71% of organisations were regularly using generative AI in at least one business function, often in customer service, marketing or software development. Without dedicated guardrails, shadow AI and uncontrolled copilots will quickly outrun your policies.

Shadow AI, employee usage policies and guardrails

A practical GenAI governance operating model should include.

Approved tools list.

Which GenAI platforms (for example, enterprise ChatGPT, Microsoft 365 Copilot, GitHub Copilot) are allowed for which roles.

Usage policies

Clear do/don’t examples for confidential data, personal data, source code and regulated content; contextualised for US, UK and EU law.

Technical guardrails

Data loss prevention, network egress controls, tenant isolation and content filters, supported where possible by policies-as-code.

Security patterns

Controls to mitigate prompt injection attacks and tool-calling abuse aligned with patterns Mak It Solutions explores in its secure AI agents and LLM security checklists. (Mak it Solutions)

GenAI use-case intake and design review

For GenAI, your intake and design review process should explicitly ask.

What’s the business value and is GenAI actually the right tool?

What data will be sent to or generated by the model (PII, PHI, payment data, trade secrets)?

What prompt and context design is required to minimise hallucinations and leakage?

Where is human-in-the-loop review mandatory (for example, clinical decision support, credit decisions, HR decisions)?

Is there a safer non-GenAI option (for example, retrieval-augmented generation for internal knowledge vs free-form internet-connected chat)?

High-risk GenAI systems (for example, used in hiring or credit worthiness) should be treated as high-risk AI under the EU AI Act with corresponding documentation, testing and oversight.

Example GenAI RACI and workflow for US, UK and EU enterprises

A lightweight GenAI workflow might look like.

Business owner in London, New York or Berlin submits a GenAI idea into the AI intake portal.

CIO/CDO function classifies it, checks architecture and ensures it uses approved platforms.

CISO & data protection / legal review data flows, cross-border transfers and alignment to EU AI Act, GDPR/DSGVO, UK GDPR or US sector rules.

GenAI Working Group reviews prompts, tools and human oversight design.

Enterprise AI Council approves high-risk or cross-border cases.

Responsibilities differ slightly by region, but the pattern stays the same: business owns value and process; technology owns platforms and integration; risk/compliance own controls.

AI Governance Strategy, Roadmap and Maturity Model for 2026

Where are you today? Simple AI governance maturity model

A simple five-level AI governance maturity model for 2026.

Ad-hoc experiments

Shadow AI, scattered pilots, little or no inventory.

Emerging

Basic AI policies, informal review by security or data teams, some monitoring.

Defined

Documented AI governance operating model, RACI, committees and model inventory in place.

Integrated

Governance workflows embedded in delivery, DevOps, MLOps and GRC tools; consistent use across US, UK and EU units.

Optimised

Metrics-driven AI governance with dashboards, automation, playbooks and periodic external reviews (for example, by Deloitte, McKinsey & Company, Accenture or IBM, alongside partners like Mak It Solutions).

Most enterprises Mak It Solutions sees in the US, UK and Europe are somewhere between 2 and 3, with GenAI pushing them to move faster.

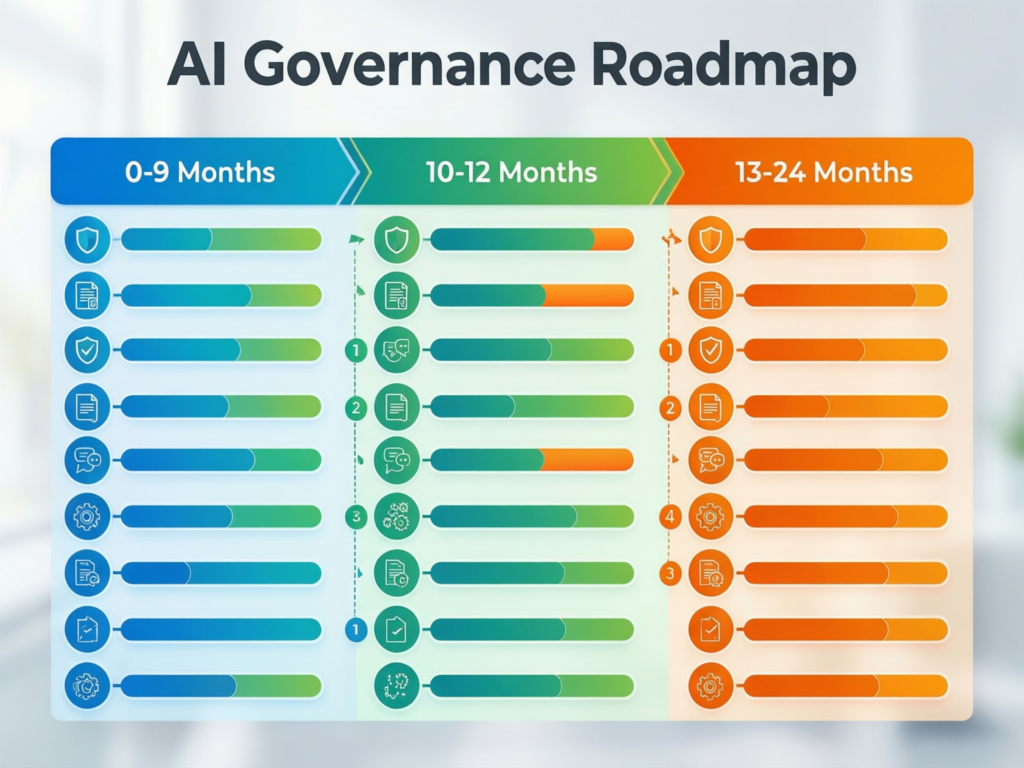

Phased roadmap: 90 days, 12 months, 24 months

A pragmatic roadmap balances innovation speed with risk controls and compliance:

0–90 days (Stabilise & discover)

Publish interim AI and GenAI usage policies; spin up an AI Council; build a first-pass model inventory; classify obvious high-risk use cases; stop the most dangerous shadow AI.

3–12 months (Build & embed)

Design and document your AI governance operating model; implement intake and approval workflows; integrate with ITSM/GRC; stand up model monitoring for priority systems; align with EU AI Act, GDPR/DSGVO, UK GDPR and NIST AI RMF.

12–24 months (Optimise & automate)

Roll out dashboards, automation, policy-as-code and AI observability; extend governance into multi-agent systems and enterprise AI agents; run regular audits and tabletop exercises. (Mak it Solutions)

Tooling landscape: platforms, cloud-native controls and integrations

Your tooling choices should support not replace the AI governance operating model. Common layers include:

Lifecycle & governance platforms

Tools such as ModelOp and ServiceNow that orchestrate AI lifecycle governance, approvals and documentation.

Cloud-native controls

Guardrails, content filters, key management and region controls from AWS, Microsoft Azure and Google Cloud.

Monitoring & observability

Drift, cost and quality monitoring for ML and GenAI the focus of Mak It Solutions’ AI observability work. (Mak it Solutions)

GRC & ITSM integration

Mapping AI risk and incidents into existing risk registers and incident workflows, not inventing parallel processes.

Build vs buy: when CIOs should partner with vendors or consultancies

Deciding whether to build in-house or work with partners depends on:

Regulatory intensity

If you’re a BaFin-regulated bank or NHS-adjacent supplier, you’ll likely combine global consultancies (Deloitte, Accenture, IBM) with specialised partners like Mak It Solutions for implementation, automation and integration.

Scale & complexity

Large, multi-country enterprises often need enterprise-grade platforms and SI partners; mid-market firms may get further by combining targeted tools with advisory sprints.

Existing capabilities

Where you already have strong data governance, DevOps and security, you can keep more in-house and use Mak It Solutions for accelerators (for example, AI governance council charters, EU AI Act playbooks, technical reference architectures).

Practical Next Steps for CIOs in the US, UK and Europe

10-step checklist to stand up an AI governance operating model

Here’s a practical checklist you can run in the next quarter.

Define scope & objectives

Agree what “AI” covers (ML, rules, GenAI, agents) and what success looks like for the board.

Map stakeholders

Identify CIO, CDO, CISO, legal, risk, DPO, key business owners and regional leads (US, UK, Germany, France, Netherlands, Nordics).

Baseline policies

Publish or refresh AI and GenAI usage policies, aligned with existing data, security and privacy policies.

Build a model inventory

Catalogue existing AI models, rules engines, copilots and agents, including vendors and cloud regions.

Define an AI risk taxonomy

Map internal risk levels to EU AI Act categories, GDPR/DSGVO and sector rules (HIPAA, PCI DSS, SOC 2, Open Banking). (PCI Security Standards Council)

Stand up governance committees

Create the Enterprise AI Council, Model Risk & Assurance Committee and GenAI Working Group.

Document RACI

Clarify responsibilities for intake, risk assessment, approvals, monitoring and incident management.

Pilot end-to-end governance on 2–3 use cases

For example, a customer-service chatbot in the US, a risk model in a UK bank, and a predictive maintenance system in Germany.

Select and integrate tooling

Choose governance, monitoring and observability tools, and integrate them into your existing MLOps and ITSM stack.

Communicate & train

Run training for developers, product teams and business leaders; publish playbooks and FAQs; measure adoption.

Example roll-out scenarios in US, UK and German/EU organisations

US Fortune 500 bank (New York)

Extends existing model risk and CCAR governance to cover GenAI; uses NIST AI RMF as a unifying language; central AI Council approves all models impacting credit, trading or customer advice.

UK NHS supplier (London)

Treats all clinical-adjacent AI as EU AI Act high-risk; aligns to UK GDPR and NHS procurement frameworks; adds stringent human-in-the-loop and documentation checks.

German BaFin-regulated bank or DACH manufacturer (Frankfurt / Munich)

Uses EU AI Act and BaFin model-risk guidance as the backbone; prioritises EU-only cloud hosting and detailed documentation in German and English.

Similar patterns apply to enterprises in France and the Netherlands, with strong focus on EU AI Act and GDPR/DSGVO, while Nordic organisations often lead on ethical AI and sustainability reporting.

How to engage with partners.

Most CIOs don’t have the time or bandwidth to invent all of this from scratch. A common pattern is:

Run a 2–3 day AI governance discovery workshop with internal stakeholders and a partner.

Adopt target operating model templates for committees, RACI, lifecycle workflows and AI governance council charters.

Use accelerators for EU AI Act / NIST AI RMF mapping, GenAI risk playbooks and reference architectures.

Mak It Solutions can support with end-to-end design and implementation from advisory and templates through to dashboards, automations and secure platform builds working alongside your internal teams and, where relevant, global consultancies.

Key Takeaways

An AI governance operating model turns principles and policies into daily workflows, owners and tools across both ML and GenAI.

The three-tier structure (strategy, risk oversight, lifecycle operations) scales well across US, UK and EU enterprises facing different regulatory pressures.

Clear RACI and cross-functional committees (AI Council, Model Risk Committee, GenAI Working Group) are non-negotiable for accountable AI.

Compliance with EU AI Act, GDPR/DSGVO, UK GDPR, NIST AI RMF and sector rules must be designed into intake, design, deployment and monitoring not bolted on at the end.

GenAI needs its own guardrails, covering shadow AI, prompts, data flows and human oversight, especially for high-risk use cases.

A 90-day / 12-month / 24-month roadmap, supported by the right platforms and partners, lets CIOs balance innovation speed with AI risk management and assurance.

If you’re a CIO or tech leader in the US, UK or Europe and you don’t yet have a clear AI governance operating model, this is the moment to fix it before regulators or incidents force your hand. Mak It Solutions can help you design and implement a pragmatic, EU AI Act- and NIST-aligned AI governance operating model that fits your culture, stack and budget.

Book a working session with our team to review your current AI landscape, map the gaps, and shape a 90-day plan to stand up committees, workflows and tooling that actually work in production.( Click Here’s )

FAQs

Q : How much does it typically cost to implement an AI governance operating model in a large enterprise?

A : Costs vary widely, but large enterprises in the US, UK and EU often invest in the low-to-mid seven-figure range over 2–3 years when you include people, processes and tooling. Initial spend usually covers discovery workshops, target operating model design, building the model inventory and integrating workflows into existing GRC/ITSM tools. Ongoing costs then shift to committee operations, AI observability platforms, compliance updates and internal training. Many CIOs reduce cost by reusing existing data governance, model risk and cyber infrastructure rather than standing up entirely new silos.

Q : What are common mistakes CIOs make when standing up an AI governance council for the first time?

A : The most common mistake is treating the AI governance council as a rubber-stamp committee that meets rarely and reviews only a subset of use cases. Others include over-staffing it with IT while under-representing product, operations and HR, and failing to define clear approval thresholds so everything ends up in the council’s queue or nothing does. Some CIOs also skip the documentation step, leaving decisions trapped in meeting minutes rather than being reflected in workflows, model inventories and dashboards. A good council is small, empowered, cross-functional and tied tightly to the operating model.

Q : Can an existing data governance or model risk management function be reused as an AI governance operating model, or do we need something new?

A : You almost never need to start from zero. Existing data governance and model risk management functions are natural foundations for an AI governance operating model, especially in financial services and large enterprises. What you do need is to extend their scope to cover GenAI, AI agents, new data sources, cross-border hosting and the specific requirements of the EU AI Act, GDPR/DSGVO, UK GDPR and NIST AI RMF. That usually means adding GenAI expertise, new intake and approval workflows, updated RACI and closer integration with security, privacy and engineering.

Q : Which tools and platforms are most useful for automating parts of the AI governance lifecycle (e.g., inventories, monitoring, documentation)?

A : Useful tooling typically falls into four buckets.

(1) Lifecycle governance and inventory platforms that track models and approvals;

(2) MLOps and observability tools that monitor performance, drift, cost and quality for ML and GenAI;

(3) Cloud-native controls from providers like AWS, Azure and Google Cloud to manage regions, keys, network boundaries and guardrails; and

(4) GRC / ITSM integrations (for example, on ServiceNow) that connect AI risks and incidents to existing risk registers and workflows. Most enterprises end up with a blend of dedicated AI governance tools and existing platforms they already trust.

Q : How can CIOs demonstrate ROI from AI governance to boards and executive committees in US, UK and European organisations?

A : Boards respond to both risk reduction and value acceleration. CIOs can show fewer or less severe incidents, improved audit outcomes and better regulatory relationships (for example, smoother BaFin or FCA interactions) as tangible risk benefits. On the value side, a clear AI governance operating model typically shortens time-to-approval for new AI initiatives, reduces duplicated work and gives business leaders more confidence to invest in higher-impact AI. Tracking metrics such as lead time from idea to deployment, percentage of AI models with full documentation, and proportion of GenAI use cases running on approved platforms makes the ROI visible and defensible.