AI Content Authenticity for Arabic GCC Brands

AI Content Authenticity for Arabic GCC Brands

AI Content Authenticity for Arabic GCC Brands

AI content authenticity means proving who created a piece of content, how it has been edited, and whether AI was used using secure, tamper-evident signals rather than guesswork. For Arabic media and GCC brands, it matters because regulators in Saudi, UAE and Qatar increasingly expect clear disclosure, strong digital media provenance and deepfake-resistant safeguards around sensitive news, financial and government content.

Introduction

Across Riyadh, Dubai, Abu Dhabi and Doha, Arabic feeds are filling with AI-generated clips, cloned voices and hyper-realistic images and not all of them are harmless. Ministries, banks and media groups worry that one convincing deepfake in Arabic could damage trust in leaders, brands or even national reputation overnight.

AI content authenticity is how GCC organisations prove their Arabic content is genuine, untampered and properly disclosed when AI is involved. Instead of guessing “is this AI?”, teams use digital media provenance, content credentials and cryptographic signatures to show where content came from, who touched it, and what changed along the way. This guide connects that reality to Saudi PDPL, UAE cyber rules, Qatar banking supervision and a practical roadmap your teams can start using today.

What Is AI Content Authenticity for Arabic Media in the GCC?

AI content authenticity is the ability to prove the origin, integrity and disclosure status of content not just detect whether AI was used. For Arabic media in the GCC, that means helping viewers, regulators and platforms see trustworthy signals that a video, article or voice note is genuine, unaltered and clearly labelled when synthetic. This is critical for ministries, banks, telcos and newsrooms that communicate daily in Arabic at national scale.

AI Content Authenticity vs AI Detection

AI detection tools try to answer “was this generated by AI?” using statistical models. They can be useful, but results are probabilistic, easy to bypass and often unreliable on short Arabic clips.

AI content authenticity instead focuses on content authenticity verification: cryptographic signatures, secure metadata and content credentials that travel with the file. Think of it as a digital passport attached to the media, rather than a border guard guessing at the door.

Why AI Content Authenticity Matters for Arabic News, Social and Government Portals

Arabic newsrooms in Riyadh and Dubai, government portals in Abu Dhabi and Doha, and customer apps for GCC banks all face the same challenge: if the public can’t trust what they see and hear, engagement and compliance both suffer. Political clips, bank announcements, religious messages and public safety alerts are prime targets for impersonation. Authenticity layers help these institutions show “this really came from us” across websites, apps and social channels.

Key Risks for Gulf Audiences.

Deepfake voice scams targeting bank customers, synthetic videos misrepresenting leaders, and fake fatwa clips circulating on encrypted channels are no longer theoretical. They risk financial fraud, social tension and reputational harm for Saudi Arabia, the UAE, Qatar, Kuwait, Bahrain and beyond. For regulators and boards, AI content authenticity is now part of national security and consumer protection, not just marketing hygiene.

How Digital Provenance Works for AI Content

Digital provenance helps Saudi and UAE organisations prove that content is genuine by attaching signed, tamper-evident information at the moment of creation. When done properly, anyone later whether a newsroom in Jeddah or a regulator in Abu Dhabi can verify that an Arabic video or statement hasn’t been secretly changed along the way, supporting AI-generated media transparency.

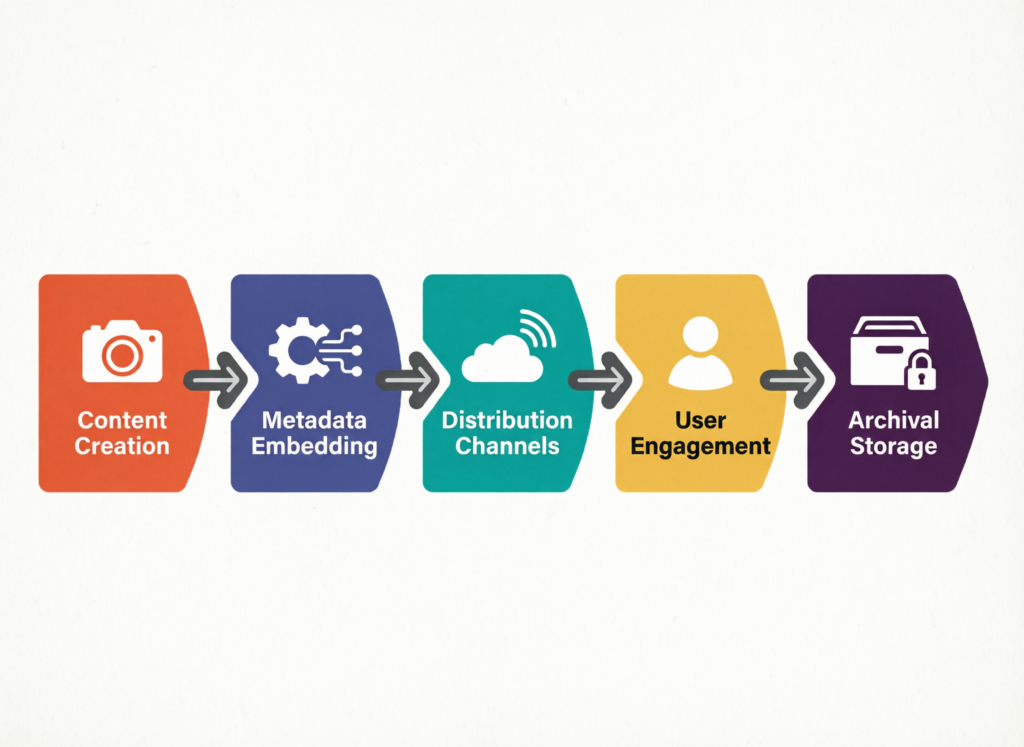

From File to Feed.

The basic flow is: creation → signing → publishing → verification. At creation or first ingest, the asset is signed using cryptographic keys and enriched with secure metadata about who made it, when and on which device or system. That signature and metadata travel with the file into your CMS, content pipelines and social exports. When users or platforms verify it, any hidden edits or tampering break the signature and signal risk in real time.

Content Credentials, CAI and C2PA Explained in Simple Terms

The Content Authenticity Initiative (CAI) and the Coalition for Content Provenance and Authenticity (C2PA) define open standards for this process. “Content credentials” are like a structured, machine-readable log of edits, embedded directly into image, video or audio files together with cryptographic signatures. They record which newsroom, agency or ministry created or edited the content and what was changed, in a way that platforms and verification tools can trust.

Applying Provenance to Arabic Text, Images and Video

For GCC teams, provenance must work for Arabic text and right-to-left layouts as smoothly as it does for English. That means implementations in Arabic news portals, fatwa video platforms, influencer Reels and even WhatsApp-style export flows. UI labels must handle mixed Arabic English wording and local fonts so users can quickly see if a clip is “Official”, “AI-assisted” or “Synthetic”, without breaking the reading experience.

GCC Regulations, PDPL and Deepfake Rules Shaping AI Content Authenticity

Regulators across the GCC are moving toward clearer expectations on synthetic media, transparency and data protection. For AI content authenticity, that means aligning your provenance approach with Saudi PDPL, UAE cyber and media laws, and Qatar’s financial supervision and digital ID initiatives.

PDPL, SDAIA Guidance and Sectoral Rules

In Saudi Arabia, the PDPL framework plus guidance from SDAIA and the National Data Management Office (NDMO) push organisations toward stronger data governance and accountability for digital services. Financial institutions under the Saudi Central Bank (SAMA) increasingly treat deepfake-resistant onboarding, KYC and communications as part of operational risk. Authenticity signals, clear disclosure and data residency for AI pipelines all matter when you’re operating regulated portals or Arabic customer apps.

Cyber Laws, Deepfake Restrictions and Media Rules

The UAE’s cybercrime legislation and media rules restrict harmful use of synthetic media, especially around national figures, symbols and social cohesion. The Telecommunications and Digital Government Regulatory Authority (TDRA) and media regulators expect responsible digital communication, including for AI-generated content. For agencies in Dubai and enterprises in Abu Dhabi, that translates into policies on labelling AI images, careful use of national landmarks, and traceable approval workflows inside your CMS and mobile apps.

Qatar and the Wider GCC.

Qatar’s financial and digital strategies driven by the Qatar Central Bank (QCB) and Qatar Digital ID initiatives tie identity, KYC/AML and secure digital channels together. Deepfake-resistant video banking, authenticated ministry announcements and tamper-evident statements are becoming baseline expectations. Similar trends appear in Kuwait, Bahrain and Oman, where supervisors increasingly look for watermarking, provenance or other content integrity signals in high-risk journeys.

Tools, Standards and Solution Types for AI Content Authenticity in the GCC

For GCC buyers, the AI content authenticity stack usually combines detection, watermarking, provenance and identity layers. The right mix depends on whether you’re a newsroom in Riyadh or Dubai, a government communication unit in Abu Dhabi or Doha, or a regional bank.

The Content Authenticity Stack.

Detection tools support synthetic media and deepfake detection after the fact. Watermarking embeds subtle patterns into AI-generated media. Provenance and content credentials provide tamper-evident history from creation. Identity verification ties content to a verified person or entity via systems like UAE Pass or Qatar Digital ID. For high-risk use cases, such as fintech or cross-border logistics, you typically need at least provenance + identity + selective detection.

Implementing C2PA Content Credentials and Provenance APIs

Technically, you integrate C2PA-compatible tools into your editors, DAM, CMS and publishing pipelines often through SDKs and APIs. Cloud-based options can be hosted in AWS Bahrain, Azure UAE Central or GCP Doha to meet data residency expectations. Many teams pair this with secure web development services and robust business intelligence dashboards to monitor authenticity signals across channels.

Evaluating Vendors for Media, Government and Banks in the GCC

When evaluating vendors, GCC buyers should ask about Arabic support, right-to-left layouts, regulator alignment (PDPL, TDRA, SAMA, ADGM, DIFC) and how tools handle regional threat models. A Riyadh fintech operating from ADGM or DIFC, a Dubai media agency scaling AI-assisted campaigns, and a Doha ministry newsroom will each weight these differently but all need clear SLAs, proven integrations with existing digital marketing and mobile app development stacks.

Implementing AI Content Authenticity in GCC Organisations

Practically, GCC companies can start improving AI content authenticity in weeks by mapping high-risk content, designing clear policies and piloting tools. The goal is not perfection on day one, but measurable reduction in fraud and confusion for Arabic users.

Map Your High-Risk Arabic Content and Stakeholders

List high-risk journeys: digital onboarding, leadership speeches, religious content, investment advice, emergency alerts, payment approvals and signature-like voice notes. Map which teams own each touchpoint—legal, compliance, IT/security, marketing, PR and call centres in Riyadh, Dubai, Abu Dhabi, Doha and Jeddah. This gives you a realistic view of where to embed provenance and where human review must stay in the loop.

Design Policies for Disclosure, Watermarking and Approval

Next, define when AI use must be disclosed, how labels appear in Arabic and English, and when watermarking or provenance is mandatory. Align those policies with Saudi PDPL, UAE cyber/media rules and Qatar financial guidance, referencing bodies like SAMA, TDRA, QCB and ADGM. Document this in your governance playbook and align it with existing web design and Laravel or Flask development standards.

Integrate Tools, Run Pilots and Measure Trust

Finally, connect your CMS, media pipelines and apps to provenance tools and identity systems. Run focused pilots for example, a corporate newsroom in Riyadh, a Dubai e-commerce app or a Doha SME portal hosting in regional cloud regions. Measure fraud attempts, dispute rates, complaint volumes and user trust survey scores before and after. Then scale across other channels using agile sprints.

Building User Trust in AI-Generated Arabic Content

Technology alone doesn’t create trust; the way you label and present AI-assisted Arabic content matters just as much. GCC users respond best when disclosures are clear, respectful and consistent across web, app and social experiences.

Clear Labelling and Arabic-First UX for AI-Generated Content

Decide on short, culturally sensitive phrases in Arabic (and English where needed) to label AI-generated or AI-assisted posts. Place labels close to headlines or avatars, with intuitive icons that also work in dark mode and right-to-left layouts. Mobile-first UX, especially for Jeddah retail brands and logistics players, should avoid clutter while making authenticity indicators easy to notice and tap for details.

Human-in-the-Loop Workflows for Sensitive GCC Topics

For religion, national symbols, leadership communications and financial advice, keep humans in the loop. That could mean editors in Riyadh reviewing AI-drafted statements, Sharia or subject-matter experts validating complex fatwa or finance content, and compliance officers signing off before publication. AI can draft and pre-screen, but final responsibility stays with named people whose roles are clear.

Measuring Digital Trust.

Define KPIs: user trust surveys, sentiment on key posts, complaint volumes, fraud attempts blocked, dispute resolution times and escalation counts to regulators. Visualise these in BI tools built on your business intelligence services and monitored by leadership. Over time, link progress to strategic programmes like Saudi Vision 2030 or national digital transformation dashboards.

The Future of AI Content Authenticity in the GCC

The direction of travel is clear: AI content authenticity is moving from “nice to have” to mandatory in sensitive sectors. GCC organisations that invest early will be better aligned with both local regulators and emerging global standards.

From Optional to Mandatory.

Expect mandatory provenance, watermarking or disclosure rules for high-risk areas such as finance, healthcare, elections and government communications. Kuwait, Bahrain and Oman are likely to follow Saudi, UAE and Qatar in tightening expectations around synthetic media in Arabic, especially where national reputation is involved.

Collaboration Between Regulators, Media and Tech Providers

Regulators, broadcasters, telcos and startups will need shared taxonomies, labels and technical standards. Joint pilots say between a broadcaster in Dubai, a telco in Riyadh and a cloud provider in Doha can test interoperable watermarking and provenance signals across platforms.

How GCC Organisations Can Stay Ahead of Global Standards

By embracing CAI/C2PA-style initiatives early, hosting solutions in regional clouds and aligning UX with global best practices (such as those promoted by bodies like the, GCC organisations can position Saudi Arabia, the UAE and Qatar as leaders in Arabic digital trust. Partnering with experienced services teams to integrate these layers into existing stacks speeds that journey.

Concluding Remarks

AI content authenticity is no longer a theoretical topic for conferences it is a practical requirement for any GCC organisation publishing Arabic content at scale. Digital provenance, content credentials and thoughtful UX give your audiences confidence that what they see and hear from you is genuine and clearly disclosed.

The good news: standards like C2PA, regional cloud regions and mature development and analytics capabilities already exist today. The next step is to translate them into a concrete roadmap for your ministry, bank, telco or brand.

This article is for general information only and does not constitute legal, regulatory or financial advice. Always consult your legal and compliance teams before making decisions.

If you’re responsible for Arabic content in a ministry, bank, telco or enterprise and want to make AI content authenticity real not just a slide this is the moment to move. Mak It Solutions can help you assess your current risk surface, design GCC-aligned authenticity policies, and integrate provenance and labelling into your web, mobile and data stacks.

Explore our core services, or reach out to our team to request a tailored workshop focused on Saudi, UAE and Qatar requirements, including PDPL, cyber rules and sector guidance. Together, we can turn AI content authenticity into a competitive trust advantage for your organisation.

FAQs

Q : Is AI-generated marketing content allowed under Saudi PDPL and sector rules?

A : Yes, AI-generated marketing content can be used under Saudi PDPL if you respect data protection, consent and transparency requirements. Organisations should disclose when AI is used to generate or personalise content, avoid processing more personal data than necessary, and ensure any profiling aligns with PDPL and Saudi Vision 2030 priorities. In regulated sectors supervised by SAMA, additional marketing and fair-treatment rules apply, so banks and fintechs should align AI campaigns with both PDPL and sector circulars, and maintain human oversight over targeting and messaging.

Q : Do UAE laws require labels or watermarks on AI-generated images of national leaders or landmarks?

A : While UAE laws do not spell out one single standard label, cybercrime and media regulations restrict misleading or offensive use of images of national leaders, symbols and landmarks. In practice, marketing agencies and enterprises in Dubai and Abu Dhabi should clearly label AI-generated images and avoid implying official endorsement. Watermarking or content credentials are strong ways to show AI involvement and deter misuse. TDRA and media regulators expect responsible digital conduct, so proactive disclosure can reduce legal and reputational risk for brands operating in the UAE.

Q : How can Qatar banks and fintechs use AI content authenticity to reduce voice and video deepfake fraud?

A : Qatar banks and fintechs can combine strong KYC/AML controls from QCB with AI content authenticity tools to secure digital channels. For example, they can require verified in-app messaging instead of unsecured voice notes, watermark or sign all official video communications, and use provenance plus biometric checks for remote onboarding. Synthetic media and deepfake detection tools can run in the background to flag suspicious calls or uploaded clips. Clear customer education in Arabic, and collaboration with Qatar Digital ID initiatives, can further reduce social-engineering and impersonation risk.

Q : What data residency considerations apply when GCC organisations use cloud-based AI content authenticity tools?

A : When using cloud-based tools, GCC organisations must consider where content and logs are stored and processed. Many choose regional cloud regions such as AWS Bahrain, Azure UAE Central or GCP Doha to align with data-residency expectations from PDPL, TDRA, QCB and other regulators. Sensitive sectors finance, health, government—may require that cryptographic keys and raw media never leave the GCC. Due diligence should cover sub-processors, backup locations and incident-response obligations, and be aligned with internal data-classification frameworks overseen by legal, compliance and information-security teams.

Q : Can GCC government entities publish Arabic AI-generated content on social media if it is clearly disclosed as synthetic?

A : Yes, many GCC government entities can use AI-generated Arabic content for non-sensitive purposes such as educational explainers or accessibility enhancements—provided it is clearly disclosed and does not mislead audiences. Best practice is to label such posts as “AI-assisted” or “synthetic” in Arabic and English, maintain human review for accuracy and tone, and avoid using AI for highly sensitive statements about policy, security or leadership. Aligning with national digital strategies and guidance from bodies such as SDAIA, TDRA or QCB helps show regulators that AI is being used responsibly.