AI Content Moderation: From Toxicity to Trust

AI Content Moderation: From Toxicity to Trust

AI Content Moderation: From Toxicity to Trust

AI content moderation uses machine learning to automatically detect, flag, label or remove harmful or non-compliant user content across text, images, audio and video, usually in combination with human reviewers. Done well, it helps platforms in the US, UK and EU reduce misinformation, deepfakes and toxicity while meeting laws like the DSA, UK Online Safety Act and the EU AI Act.

Introduction.

In the last few years, every major region from Washington D.C. to London and Brussels has seen deepfake scandals, viral conspiracy theories and coordinated disinformation campaigns around elections, wars and public health. Platforms that once treated “trust and safety” as a cost centre now face multi-million-euro fines, public hearings and app store pressure if they get content moderation wrong.

AI content moderation is the use of machine learning models to automatically detect, flag, label or remove harmful or non-compliant user-generated content across text, images, audio and video. Regulators care because these systems shape elections and public debate; brands care because toxicity and scams destroy customer trust; platforms care because backlogs and 24/7 risk make all-human review impossible at scale.

This guide looks at what AI content moderation is, how it works on social and community platforms, where it fails, how it links to laws like the DSA, UK Online Safety Act and EU AI Act, and how teams in the US, UK, Germany and wider Europe can design a compliant hybrid AI–human moderation workflow.

What Is AI Content Moderation?

AI content moderation uses machine learning models to automatically detect, flag, label or remove harmful or non-compliant user-generated content across text, images, audio and video, usually alongside human reviewers. In practice, this means classifiers and detection models sit in the posting, reporting and recommendation pipelines, helping trust and safety teams scale decisions while documenting how they handle risk.

For anyone searching “how does AI content moderation detect misinformation and toxicity”, the short version is: models convert content into numerical features, score it against policies (e.g., hate speech, misinformation, self-harm), and then either allow, block or queue items for human review.

From Manual Review to Automated Content Moderation

Before AI, most platforms relied on large human teams often in BPOs manually reviewing posts, images and reports. As social media, gaming and creator platforms grew into billions of daily posts, this model broke: backlogs grew, 24/7 coverage across time zones became table stakes, and multilingual content outpaced the language skills of any single team.

AI content moderation emerged to.

Pre-filter obviously benign or obviously harmful items

Reduce the queue size for human moderators

Provide consistent application of often complex policies

Even smaller communities in Austin or Manchester now serve global user bases in dozens of languages, where multilingual toxicity detection and automated triage are essential just to keep up.

How AI Content Moderation Works on Social Media Platforms

On modern social platforms, AI content moderation is typically embedded across multiple layers:

Text classifiers: detect hate speech, harassment, spam, threats, self-harm, NSFW and more in posts, comments, DMs and usernames.

Computer vision models: recognise nudity, graphic violence, extremist symbols or deceptive edits in images and video.

Audio and voice models: transcribe and analyse voice chats, Spaces, live streams and podcasts.

Multimodal LLMs: jointly reason over text, image and sometimes metadata to catch more subtle harms and context.

Real-time streaming filters: moderate live chat for gaming and creator platforms where latency must be under a few hundred milliseconds.

These systems use a mix of signals:

The content itself (text, pixels, audio waveforms)

User reports and block/mute activity

Social media recommendation algorithms and harms (e.g., content that gets pushed to many minors or rapidly goes viral)

Risk scores per user, topic or domain

Most platforms use a combination of cloud ecosystems (Google Cloud, AWS, Azure) plus specialist vendors like Hive, Spectrum Labs, GetStream, Checkstep and Utopia Analytics for more targeted models or custom policies.

Where AI Fits in a Hybrid AI–Human Moderation Workflow

In a hybrid AI–human content moderation workflow, AI provides the first line of defence and humans handle edge cases, escalations and policy interpretation.

A typical setup:

Auto-approve: content below a low-risk threshold is let through, possibly with lightweight logging.

Auto-block: content above a high-risk threshold (e.g., clear CSAM signals or explicit terrorist propaganda) is blocked and sometimes reported to authorities.

Grey-area queue: content in the middle goes to human reviewers in BPOs or in-house trust and safety teams following strict SLAs.

Specialist BPOs like Conectys still play a major role for 24/7 coverage and complex languages, especially for US, UK and EU-27 platforms that need native fluency in niche dialects.

Quick comparison

| AI Content Moderation | Human Moderators | |

|---|---|---|

| Speed & scale | Processes millions of items per minute | Limited by team size and shifts |

| Consistency | Applies policies the same way every time | Can drift or vary between reviewers |

| Context & nuance | Still struggles with sarcasm, satire, politics | Better at local context, cultural references and edge cases |

| Cost | High upfront, lower marginal cost | Ongoing per-review or per-FTE cost |

| Wellbeing impact | Shields humans from the worst content | High exposure to traumatic content without strong safeguards |

The sweet spot is trust and safety automation that maximises AI for speed and documentation, while using humans for context, appeals and governance.

AI for Misinformation, Deepfakes and Content Integrity

AI can detect patterns of misleading narratives, synthetic media artefacts and coordinated inauthentic behaviour, but it still struggles with context, satire and fast-moving political events. That’s why election integrity teams in San Francisco, London, Berlin or Brussels pair AI systems with human fact-checkers, OSINT analysts and external partners.

AI Misinformation Detection.

AI misinformation detection focuses on identifying misleading or false claims, especially around elections, public health and conflicts. Models look for:

Known disinformation narratives and conspiracy tropes

Sudden spikes in low-credibility domains or coordinated posting

Bot-like behaviour and coordinated inauthentic behaviour (CIB) patterns

During recent US and EU election cycles, platforms and fact-checking networks have flagged large volumes of misleading political posts each week, with AI systems surfacing the riskiest items for human review.

In the UK, political misinformation around parties, manifestos or NHS funding often blends opinion and fact, making pure automation risky. Across the EU-27, the EU Code of Practice on Disinformation and the Digital Services Act (DSA) push very large online platforms to assess and mitigate systemic risks around disinformation, media pluralism and electoral processes.

Fact-checking partners in Dublin, Paris or Madrid feed verdicts back into models so “fake news” patterns get caught earlier but AI remains a decision support tool, not an arbiter of truth.

Deepfake Detection and Content Authenticity Standards

Deepfake detection models look for.

Inconsistent facial landmarks, lighting or shadows

Lip-sync mismatches and artefacts in compressed video

Audio fingerprints and cloned-voice artefacts

Traces of known generation models or tampering in metadata

At the same time, the ecosystem is moving toward content authenticity and provenance:

C2PA (Coalition for Content Provenance and Authenticity) defines an open standard for attaching cryptographically verifiable “content credentials” to images, audio and video so users can see how media was created and edited.

The EU AI Act (Article 50) requires clear labelling of AI-generated or manipulated content, especially deepfakes that could mislead people.

Countries like Spain are moving ahead with national legislation that mandates AI-generated content labelling and empowers AESIA to enforce it with large fines.

For platforms, this becomes a product capability: “AI for content authenticity and provenance” — combining deepfake and synthetic media detection with support for watermarking, C2PA credentials, and clear “AI-generated” labels in feeds and search.

Limits of Algorithmic Misinformation Detection

Despite progress, algorithmic misinformation detection has hard limits.

False positives: satire, memes or legitimate dissent flagged as “fake news”.

False negatives: subtle dog-whistling, coded language or brand-new narratives that models haven’t seen.

Adversarial actors: state-backed operations and troll farms that constantly adapt tactics.

Language and context gaps: local dialects in Hamburg or Manchester, or niche political debates, are harder to model.

That’s why AI content moderation cannot be a truth ministry. Under the DSA and Online Safety Act, platforms are expected to have transparent policies, appeals processes and external oversight, not to decide capital-T Truth.

The goal is to reduce clearly harmful manipulation and coordinated deception, not to algorithmically police every contested claim.

AI Toxicity Detection, Hate Speech and Online Harms

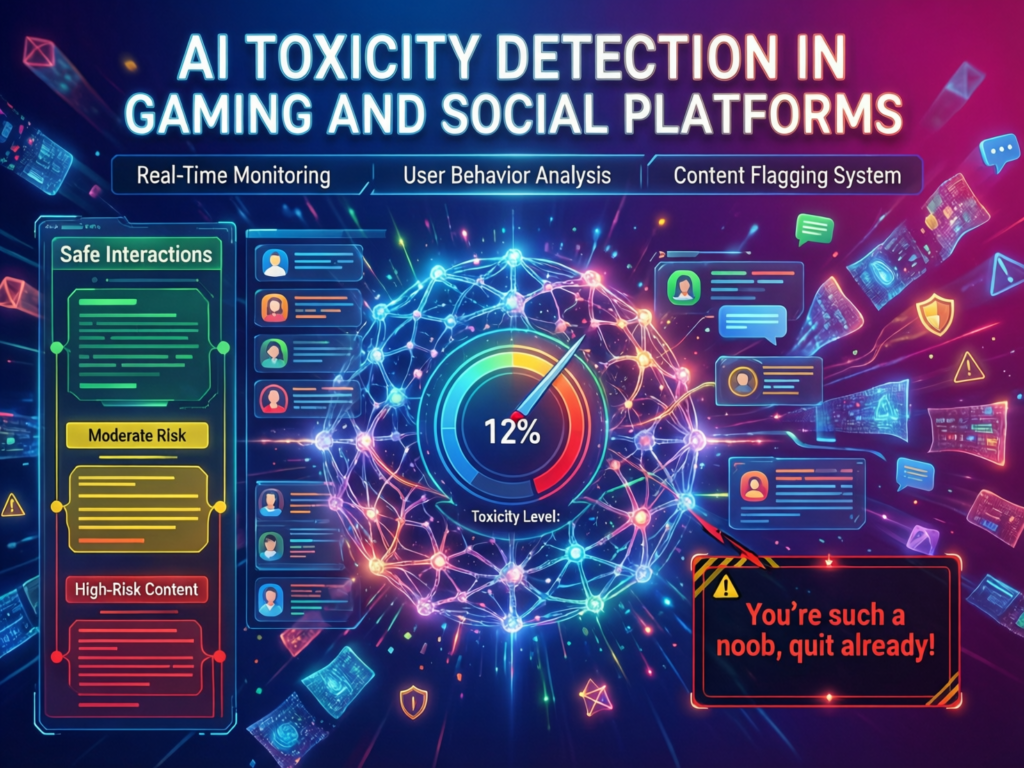

AI toxicity detection models score language for hate, harassment, threats and sexualised or self-harm content, but they need careful thresholds and auditing to avoid bias and over-blocking. Most platforms now run some form of harmful content detection AI across comments, DMs and live chats.

What AI Toxicity Detection Models Actually Look For

At a basic level, AI toxicity detection models are trained on large datasets of labelled text to recognise:

Explicit slurs and hate speech

Threats of violence and self-harm

Harassment patterns over time (e.g., dogpiling or brigading)

“Dog whistles” and coded phrases in specific subcultures

Contextual cues who is speaking, to whom, in what community

Use cases include.

Auto-hiding toxic replies to creators in US gaming communities and live streams

Moderating fan forums for UK sports communities where rivalry can tip into hate speech

Protecting youth-oriented communities around education or healthcare content (e.g., NHS-related forums) from bullying and self-harm triggers

Public transparency reports from major platforms often note that a large majority of content removed for hate or harassment is first detected by automated systems, with humans reviewing only a fraction of total items.

Bias, Over-Blocking and Free Expression Concerns

These same models can be biased:

Dialects used by Black communities in the US or migrant communities in Germany may be over-flagged as “toxic”.

Political slogans in protests can get treated as threats.

Reclaimed slurs in LGBTQ+ spaces can confuse classifiers.

Culturally, there are also differences: US debates often emphasise First Amendment culture, while the EU and UK Online Safety Act talk about “illegal and legal but harmful” content, especially for children.

To maintain legitimacy, platforms need:

Strong appeals processes and clear explanations for decisions

Transparency reports breaking down model performance by region and topic

Collaboration with civil society groups in London, Berlin, Amsterdam or Nordic countries to review training data and edge cases

Designing Safer Communities in US, UK and EU Markets

Designing safer communities is not just “turning up the filters”. Teams can:

Tune thresholds and policies for specific verticals: UK sports forums, German fintech/open banking communities, or EU-27 gaming and creator platforms.

Choose vendors that explicitly support “AI hate speech detection EU languages” and broad multilingual toxicity detection.

Use trust and safety automation to nudge, warn or slow down posting, not only to delete content.

For example, a fintech forum in Frankfurt may prioritise anti-fraud and harassment detection, while a gaming platform in Austin might focus on voice-chat toxicity in English, German and Spanish. In both cases, success is measured less by takedown counts and more by user retention, report rates and community health.

DSA, Online Safety Act, EU AI Act & Global Standards

In the US, UK, Germany and wider Europe, AI content moderation is increasingly judged by whether it helps platforms meet systemic risk, transparency and safety duties under laws like the DSA, UK Online Safety Act and EU AI Act. Boards and regulators now ask: “Show us how your models reduce risk — and how you know they’re working.”

Mapping AI Content Moderation to EU DSA and EU AI Act

For very large online platforms (VLOPs) and search engines, the DSA requires:[Digital Strategy][1]

Regular systemic risk assessments (e.g., disinformation, gender-based violence, threats to electoral processes)

Risk mitigation measures in recommendation and moderation systems

Independent audits and data access to regulators and researchers

AI content moderation tools help by.

Logging decisions with policy tags and risk scores

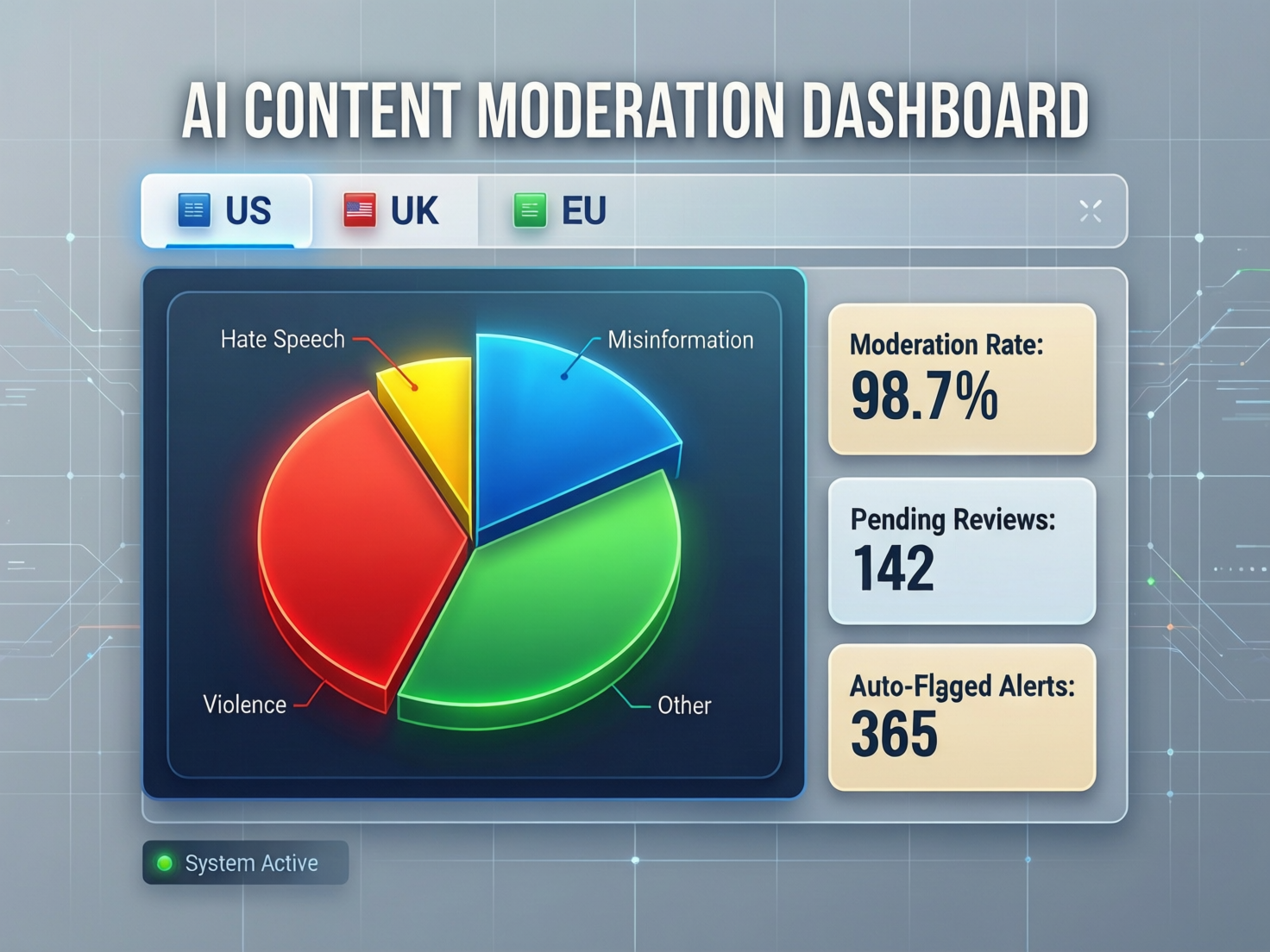

Offering DSA-compliant AI content moderation dashboards to show risk trends

Supporting DORA-style resilience for critical services via robust, auditable pipelines

Under the EU AI Act, transparency and labelling duties especially Article 50 require clear marking of AI-generated content and deepfakes, plus explanations of high-risk systems to users and regulators.[Artificial Intelligence Act][3]

Vendors increasingly market “EU AI Act–ready” moderation stacks: clear logging, C2PA support, AI-generated content flags, and governance workflows that map to both DSA and AI Act requirements.

UK Online Safety Act, Ofcom Guidance and Harmful Algorithms

The UK Online Safety Act gives Ofcom wide powers to ensure platforms reduce illegal content and manage “legal but harmful” risks, especially to children.

Ofcom’s guidance and roadmap emphasise.

Safety-by-design in recommendation algorithms

Robust age assurance and content filters

Risk assessments and transparency for how AI moderation and ranking systems impact user exposure to harmful content

For product teams in London or Manchester, that translates into roadmaps that:

Integrate AI content moderation with recommendation ranking changes

Provide granular controls (e.g., “safer mode” feeds for teens)

Log algorithmic changes and run safety experiments regulators can inspect

US, Germany and Sectoral Compliance

Outside Europe, compliance is more sectoral:

US: health platforms must align with HIPAA privacy rules; fintechs and payment apps must stay PCI DSS– and SOC 2-friendly, ensuring moderation logs don’t leak sensitive cardholder or health data.

Germany: platforms supervised by BaFin or BNetzA need DSGVO-konforme KI-Content-Moderation, with strict data residency and retention policies.

Sector bodies like the NHS, Open Banking and AI authorities such as AESIA in Spain increasingly expect clear documentation of how moderation systems treat sensitive data and high-risk content.

For Mak It Solutions clients, this often means combining AI content moderation with secure cloud architectures, encrypted logging, regional data residency (e.g., AWS eu-central-1 in Frankfurt, Azure UK South) and clear retention policies aligned with GDPR / UK-GDPR / DSGVO.

Choosing and Implementing AI Content Moderation Tools

As soon as you move beyond basic profanity filters, you’re in build vs buy territory: do you rely on Google Cloud / AWS / Azure safety APIs, or integrate specialised vendors?

Build vs Buy: When to Use Cloud APIs vs Specialised Vendors

Cloud safety APIs (Google Cloud, AWS, Azure) are usually best when:

You’re an early-stage US social media startup wanting “good enough” coverage quickly.

You’re already heavily invested in that cloud and want simple integration.

Your language and harm coverage needs are relatively standard.

Specialist vendors like Hive, Spectrum Labs, GetStream, Checkstep or Utopia Analytics make sense when:

You need high-accuracy, domain-specific models (e.g., fintech fraud in Berlin, sports abuse in UK communities).

You require strong EU data residency and on-prem or virtual private cloud options.

You want professional services and policy consulting, not just APIs.

Cost is not only per-API call: latency, appeals tooling, analytics dashboards and policy iteration speed all affect the total cost of ownership.

Evaluating AI Content Moderation Tools: RFP Checklist

When drafting an RFP or vendor evaluation spreadsheet, include.

Coverage:

Does it handle misinformation, deepfakes, toxicity, self-harm and spam — not just a single harm type?

GEO & language support

US English plus EU-27 languages, not only “global English”.

Compliance mappings

Clear product docs for DSA, UK Online Safety Act, GDPR / UK-GDPR, DSGVO, HIPAA, PCI DSS.

Policy controls

Can your trust and safety team manage labels, thresholds and workflows without devs?

Metrics

Precision/recall, time-to-decision, queue sizes, user appeals resolution time, and auditability.

Deployment options

SaaS, VPC, on-prem; data residency in the EEA or UK if needed.

Integrations

With your case management, CRM, data warehouse and BI tools (e.g., a Business Intelligence stack like the one Mak It Solutions implements for analytics clients)

Global spending on trust and safety and AI moderation is expected to reach tens of billions of dollars by the mid-2020s, so a rigorous RFP can easily save significant budget over the life of a platform.

Designing a Production-Ready Hybrid Workflow

To design a production-ready AI content moderation system on Google Cloud or AWS:

Define policies and taxonomies

Work with trust and safety, legal and data protection officers to turn community guidelines into clear labels (e.g., HATE, MISINFO_ELECTION, SELF_HARM).

Ingest and pre-process content

Stream posts, comments, images and video metadata into a moderation pipeline (e.g., using Pub/Sub or Amazon Kinesis).

Run AI models and apply thresholds

Call cloud or vendor APIs; assign scores; auto-approve, auto-block or queue for human review based on thresholds that differ by GEO, age group and surface (feed vs DMs).

Human escalation, QA and red-teaming

Route edge cases to trained reviewers; log decisions; regularly red-team the system with adversarial examples, especially before elections in the US, UK or EU.

Measure, audit and iterate

Feed decisions into a BI layer; monitor disparities across languages; prepare audit packs for regulators (DSA, Ofcom) and internal governance committees.

Mak It Solutions often helps clients in the USA, UK and Germany connect this workflow to broader engineering and cloud strategies for example, combining moderation logs with front-end and analytics data to understand how safety changes affect engagement and revenue.

Future of Algorithmic Content Moderation

Algorithmic Content Moderation, Democracy and Platform Power

As AI Overviews and recommendation systems reshape what people see online, questions of bias, decoloniality and democratic accountability get sharper. Parliaments and regulators in Brussels, London and Washington increasingly see platforms as systemic actors whose algorithms can influence elections, public health behaviours and media pluralism and laws like the DSA reflect that by treating “systemic risk” as a key concept.

Expect more scrutiny of who writes the policies, who audits the models and which voices are at the table when harm definitions are set.

From Reactive Takedowns to Proactive Design

The next phase of online safety and harmful content regulation focuses less on takedowns and more on design.

Adjusting recommendation systems to avoid rabbit holes and spirals of self-harm content

Adding friction to virality (e.g., limits on forwarding, prompts before resharing)

Building transparency and explainability into the stack so regulators and researchers can inspect how AI decisions are made

Ofcom, the European Commission and national authorities are all pushing for more transparency and regular independent audits of algorithmic systems.

Practical Roadmap for US, UK and EU Trust & Safety Teams

A pragmatic roadmap for trust and safety leaders.

Assess risks and maturity: benchmark current policies, tooling and metrics across US, UK and EU-27 user bases.

Align with regulators: map obligations under DSA, Online Safety Act, EU AI Act, GDPR / UK-GDPR and sectoral rules like HIPAA, PCI DSS.

Pilot AI models: start with lower-risk use cases (spam, obvious hate), measure performance, open feedback channels with users.

Scale hybrid workflows: expand to misinformation and deepfakes, with strong human oversight and clear appeals.

Invest in governance: create cross-functional councils (policy, legal, infra, data) and engage partners like Mak It Solutions for architecture, analytics and implementation support.

Key Takeaways

AI content moderation is a force multiplier, not a silver bullet it needs human review, strong governance and clear policies to work.

Misinformation and deepfakes require integrity tooling plus authenticity standards (C2PA, Article 50 labelling), not just text filters.

Toxicity and hate speech models must be audited for bias and tuned to cultural and legal contexts in the US, UK, Germany and wider EU.

Compliance is now central: DSA, the UK Online Safety Act and the EU AI Act expect evidence of systemic risk management, transparency and audits.

Tool choice matters: evaluate cloud and specialist vendors on coverage, language support, compliance, latency and governance features.

A production-ready hybrid AI–human workflow should be built as part of your broader cloud, data and BI strategy, not as a bolt-on.

Concluding Remarks

AI content moderation has moved from “nice to have” to “critical infrastructure” for any platform operating in the US, UK or EU. The winners won’t be those with the loudest “AI safety” marketing they’ll be the teams that combine robust models, careful policy design, transparent reporting and respectful user experiences.

If you’re planning your next-generation trust and safety stack, you don’t need to do it alone. Mak It Solutions works with organisations across the USA, UK, Germany and wider Europe on secure cloud architecture, analytics and product development that align with modern online safety regulation. Share your current moderation challenges, and the team can help you scope a hybrid AI–human workflow, from technical design to dashboards and compliance-ready reporting.

If you’re ready to turn AI content moderation from a reactive patchwork into a strategic advantage, this is the moment to get expert support. The Mak It Solutions team can help you map your risks, select the right tools, and design a production-ready hybrid workflow tailored to US, UK and EU requirements.

Book a short discovery call via the contact page to discuss your platform, or explore the services to see how web, cloud and analytics capabilities fit into a modern trust and safety roadmap.

FAQs

Q : How accurate is AI content moderation compared to human reviewers in 2026?

A : Accuracy depends on the harm type, language and training data, but for clear-cut spam, nudity or explicit hate speech, modern models can match or even exceed average human consistency. For nuanced political misinformation, satire or coded harassment, humans still outperform AI. Many large platforms report that automated systems now generate the first flag for well over 90% of removed content, with human reviewers validating a smaller subset of borderline cases. The most reliable setups use AI for triage and humans for context and appeals.

Q : What data do AI content moderation systems need to train safely under GDPR / UK-GDPR?

A : Training data typically includes examples of policy-violating and policy-compliant content: text snippets, images, short videos and metadata such as language or country. Under GDPR / UK-GDPR, you must have a lawful basis (often legitimate interests), minimise personal data, and avoid unnecessary sensitive data in training sets. Pseudonymisation, strict access controls, regional data residency (e.g., in the EEA or UK) and clear retention limits are essential. Platforms should also document data sources, consent flows and DPIAs, and be ready to explain this to DPAs or the ICO if asked.

Q : How can smaller platforms or startups afford enterprise-grade AI content moderation tools?

A : Smaller platforms don’t need to start with a full enterprise stack. Many cloud providers offer pay-as-you-go safety APIs with generous free tiers, which cover basic toxicity and NSFW detection. Early-stage teams can combine these with lightweight manual review tools and gradually add specialist vendors for harder use cases (elections, deepfakes, financial scams) as they grow. Smart scoping focusing first on the riskiest surfaces and user cohorts plus efficient internal workflows often matter more than buying the most expensive toolset on day one.

Q : Which EU languages are hardest for AI hate speech detection, and how can teams close those gaps?

A : Languages and dialects with fewer high-quality labelled datasets for example some Central and Eastern European languages or minority dialects are typically hardest for AI hate speech detection. Models trained mostly on English or a few big EU languages can miss local slurs or over-flag reclaimed language. Teams can close gaps by working with local NGOs and linguists, commissioning targeted annotation projects, and running disparity analyses across languages. Choosing vendors that publicise their EU language coverage and partner with European civil society groups is also a strong signal.

Q : How long should platforms keep moderation logs for compliance and user appeals?

A : Retention should balance regulatory expectations, user privacy and operational needs. Many platforms keep detailed moderation logs (including model scores and reviewer notes) for somewhere between 6–24 months to support user appeals, audits and investigations. Under GDPR / UK-GDPR and sectoral rules (e.g., financial or health regulators), you’ll need a documented retention schedule, clear purposes for each log type, and secure deletion processes. Some teams keep aggregated or pseudonymised statistics longer for trend analysis while deleting raw personal data after a shorter window.