Digital Content Provenance: Standards, Law and Tools

Digital Content Provenance: Standards, Law and Tools

Digital Content Provenance: Standards, Law and Tools

Digital content provenance is the verifiable record of how digital media was created, edited and published, using cryptographic signatures and metadata so others can check whether it’s authentic and unchanged. In 2026, US, UK and EU teams combine open standards like C2PA, AI-generated content labelling rules and verification tools to reduce deepfake risk and restore trust in digital content.

By 2026, leaders in the United States, United Kingdom, Germany and the wider European Union have reached the same conclusion: you can’t scale digital products, media or AI without a way to prove which content is real. Deepfakes, AI voice cloning and one-click image generators have overwhelmed people’s ability to “just spot fakes” by eye. Recent surveys suggest around three-quarters of people worry that deepfakes will fuel misinformation, and most don’t trust themselves to reliably detect them.

At the same time, AI copilots are now embedded in office suites, design tools, CRMs and developer workflows. They generate emails to customers, investor decks, UI copy, and even medical or financial explainer content. When almost every workflow can generate synthetic text, images or video, “we wrote this by hand, trust us” is no longer a credible assurance.

That is where digital content provenance comes in. Done well, provenance gives you a portable, cryptographically verifiable trail for each asset who created it, which tools were used, which edits were applied and whether the file has been tampered with since. For US, UK and EU organisations, it’s quickly becoming both a risk control and a compliance requirement, not just a “nice-to-have.”

From deepfakes to AI copilots: why “trust in content” just broke

Trust in digital content has eroded for three overlapping reasons

Deepfakes and cheap manipulation.

Anyone can now create realistic synthetic video, audio or images of public figures and ordinary employees with consumer tools. Election deepfake incidents and voice-fraud scams have already hit regulators’ agendas in the US and EU.

Explosion of AI-generated content.

Studies suggest that a majority of new web pages and social posts in 2025 contained detectable AI-generated text.

Metadata is fragile.

Even when creators add captions or labels, simple actions like screenshotting, recompressing or re-uploading often strip that information away.

Once your audience assumes “this might be fake,” your brand, newsroom or public institution is spending trust on every interaction. Deepfake detection tools help, but they are probabilistic and often hard to explain to courts, regulators or the public.

What we mean by digital content provenance.

Digital content provenance is the verifiable record of how a piece of digital media was created, edited and published. In practice, that means combining:

Cryptographic content authenticity proofs (signatures, certificates, key infrastructure)

Provenance metadata and Content Credentials describing capture, tools and edits

Verification tools that allow downstream users to check whether an asset is genuine and unchanged

Unlike a simple “AI-generated” label, provenance is designed to survive being copied across platforms and to be machine-verifiable, not just visually reassuring.

Who cares most: legal, security, newsroom and product leaders

Provenance is no longer just a topic for R&D or standards bodies. In 2026, the most active internal champions are:

Legal and compliance managing EU AI Act and Digital Services Act (DSA) risk, state-level deepfake rules in the US and sector obligations like HIPAA/PCI DSS disclosures.

Security and fraud teams treating deepfake scams and synthetic brand impersonation as part of cybercrime exposure, which is projected to cost businesses trillions globally.

Newsrooms and marketing especially in New York City, London and Berlin, where reputational damage from one viral fake can be existential.

Product and platform leaders building provenance into CMS, DAM, mobile apps and verification portals so that users don’t have to be cryptography experts to trust what they see.

What Is Digital Content Provenance?

Digital content provenance is the verifiable record of how a piece of digital media was created, edited and published, using cryptographic signatures and metadata so anyone down the chain can check whether it’s authentic and unchanged. In 2026, serious teams treat provenance as digital media provenance infrastructure that travels with assets across tools, clouds and platforms, not just a label in one app.

How provenance proves authenticity vs simple content integrity checks

Integrity and authenticity are related but different.

Integrity answers: Has this file been changed?

Authenticity answers: Who created or approved this under which identity or key?

Traditional integrity checks (hash-based checksums, basic digital signatures) can detect tampering, but they don’t carry rich context about capture devices, AI models or edit history.

Provenance standards like those from the Coalition for Content Provenance and Authenticity (C2PA) use signed manifests that.

Bind content hashes to structured metadata (creator, device, software, AI model)

Chain edits over time, producing a tamper-evident log of transformations

Can be verified by anyone with the relevant public keys or certificates.

That means your legal team can show who signed a given news photo and which newsroom system exported it a much stronger proof than “our detector rated it 93% likely real.”

Digital provenance vs digital watermarking and simple labels

Teams often confuse digital watermarking and digital content provenance.

Watermarking embeds a signal inside the pixels or audio that can be detected later.

Visible labels and platform badges (e.g. “AI-generated” icons) live in the UI of specific platforms.

Provenance, by contrast, is.

Portable across platforms the signed manifest and metadata can travel with the asset file itself

More resilient to cropping and recompression because cryptographic proofs can cover the underlying encoded asset or a defined region, not just specific pixels

Machine-readable for AI-generated content labelling and watermarking rules emerging under the EU AI Act and industry codes of practice.

In practice, high-trust systems combine all three: watermarking, UI labels and verifiable provenance manifests.

Provenance for AI-generated images, video and text in 2026

Modern generative tools from OpenAI, Google DeepMind, Adobe and others can now attach provenance or Content Credentials directly at export.

Key trends in 2026:

Content Credentials as the default “AI nutrition label.” They bundle how content was captured or generated, which tools were used and whether AI was involved.

Platform-level adoption. Content Credentials and C2PA are being integrated into cameras, cloud CDNs and web platforms for example, Cloudflare’s “Preserve Content Credentials” feature for hosted images.

Regulatory expectations. EU guidance increasingly expects machine-readable AI content labelling in addition to visible notices, especially for deepfakes and public-interest information.

For US, UK and EU teams, the upshot is simple: by 2026, regulators assume you can technically label AI outputs the question is whether you actually do it in a robust, auditable way.

Deepfake Detection vs Content Provenance.

Deepfake detection tries to spot manipulated media after the fact, while digital content provenance proves the origin and edit history of media from the moment it’s created. In 2026, serious organisations need both to deliver deepfake risk management and content trust at scale.

What is the difference between deepfake detection and provenance?

Think of deepfake detection as forensics and provenance as paperwork plus cryptography:

Detection uses model-based classifiers to infer whether an image, video or audio clip is synthetic or manipulated. It’s probabilistic and can produce false positives or false negatives.

Provenance relies on cryptographic content authenticity proofs and signed manifests that record each capture and edit step. If the chain is intact and signatures verify, you don’t need to guess.

Most high-risk workflows now run detection and provenance checks: if provenance is missing or broken, they fall back to model-based risk scoring and human review.

When deepfake detection is not enough for legal and compliance teams

For legal, audit and regulatory scrutiny, “our model said 92% fake” is rarely sufficient:

Election ads.

State-level deepfake laws in the US already target misleading election communications; being able to show signed provenance for campaign assets will often carry more weight than a detector output.

Financial communications.

Banks under BaFin or ECB supervision need clear audit trails for investor updates and market-moving statements a signed provenance log is much easier to defend.

Healthcare information.

In regulated environments like NHS channels or US health portals, provenance helps show that critical content wasn’t altered by an unauthorised AI or third party.

Detection is still useful to triage large volumes of user-generated content, but provenance is what you lean on when the lawyers, regulators or journalists start asking hard questions.

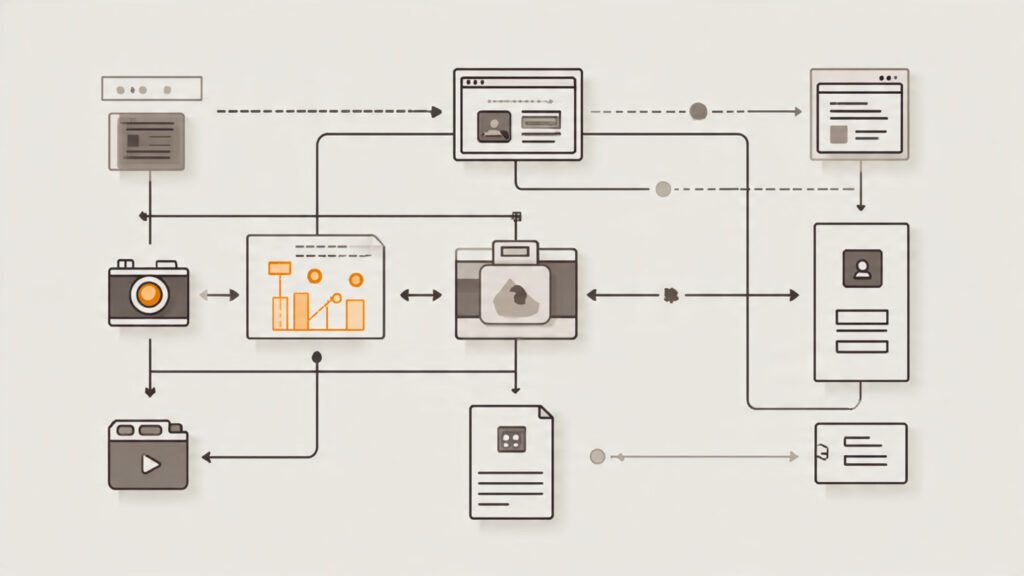

Combined architectures.

A modern combined architecture for high-risk media might look like:

A newsroom in New York City using deepfake classifiers at ingest, then enforcing C2PA manifests on all approved footage before publishing to web, apps and syndication feeds.

Election campaigns in London combining watermarking, provenance manifests and public verification portals where voters can drop in a video and check whether it’s an official asset.

A streaming platform in Berlin that rejects uploads with broken provenance for “trusted creator” tiers, while still scanning all other uploads with deepfake detectors.

The pattern is the same: use detection for screening, but anchor your most important legal and reputational decisions in strong provenance.

The Standards Shaping Digital Content Provenance (C2PA, Content Credentials, Open Ecosystems)

C2PA and Content Credentials are emerging as the default way to encode verifiable provenance metadata into images, video and other media so tools from different vendors can trust the same proof.

Overview of C2PA and Content Credentials

The Coalition for Content Provenance and Authenticity (C2PA) is an industry standards body defining an open technical standard for capture-time signing, manifests and verification of digital media provenance.

The Content Authenticity Initiative (CAI) and its Content Credentials specification turn these ideas into a user-visible system.

A small icon or label that users can click to see “who made this, how and with what tools”

Under the hood, a structured, signed manifest attached to the asset

Major players like Cloudflare, the BBC, The New York Times and camera manufacturers, platforms and cloud providers are joining this ecosystem to avoid a fragmented set of incompatible labels.

C2PA vs other content authenticity standards and proprietary schemes

Before C2PA, many organisations tried to patch together.

X.509-style PKI signing of assets

Platform-specific authenticity badges or blue-tick schemes

Homegrown watermarking solutions

C2PA’s advantage is that it’s.

Vendor-neutral and open: any tool can read and write manifests to the shared spec

Extensible: custom assertions (e.g. “AI model = X”) fit alongside standard ones

Interoperable: a provenance assertion created in an editing tool can be verified on a social network, CDN or future AR device using the same core standard.

In the long run, this open ecosystem is more sustainable than dozens of incompatible, proprietary “verified” badges.

Open-source tools and reference implementations for provenance

If you’re designing digital content provenance infrastructure, you don’t have to start from scratch. The CAI and C2PA community publish.

Open-source SDKs and command-line tools for signing and verifying manifests

Sample integrations for CMS, DAM and CDN workflows

Test vectors and reference content to validate implementations

Developers building CMS, DAM or CDN integrations can:

Add signing to export pipelines

Build verification endpoints or browser extensions

Integrate provenance views into existing asset dashboards

This is where US, UK and EU product teams can move quickly: use open reference implementations and focus engineering effort on UX, governance and sector-specific policy.

Regulation and Compliance.

In 2026, the EU AI Act, the Digital Services Act, and UK and US guidance all push platforms and brands to label AI-generated content and manage deepfake risk, making digital content provenance a key part of compliance.

EU AI Act Article 50, watermarking and provenance requirements

Article 50 of the EU AI Act sets transparency obligations for AI systems that generate or manipulate content:

Providers must ensure machine-readable marking of AI-generated or AI-manipulated media.

Deployers must disclose when they use AI to create realistic synthetic content, including deepfakes.

The EU’s draft Code of Practice on marking and labelling of AI-generated content recommends combining technical signals (watermarking, provenance metadata) with visible labels, especially for public-interest information and deepfakes.

For EU publishers and platforms, that makes provenance not just a best practice but an obvious way to prove you tried to meet the “spirit of the law”, not just add a small “AI” icon somewhere.

DSA, platform liability and deepfake obligations in the EU

Under the DSA, “very large online platforms” and search engines face heightened duties to mitigate systemic risks like disinformation and deepfake-driven manipulation. Recent designations such as WhatsApp channels crossing the DSA user threshold – show how widely these obligations will reach.

In practice, provenance helps DSA-regulated platforms to:

Flag unlabelled synthetic media in high-reach channels

Provide regulators and auditors with evidence of how official accounts sign and label their own content

Offer verification portals to citizens and journalists, especially in Brussels and other EU capitals where enforcement scrutiny is highest

US and UK regulatory patchwork: No Fakes laws, FTC, Ofcom and UK-GDPR

Outside the EU, the picture is more fragmented but pointing in the same direction.

US.

A patchwork of state-level election deepfake laws and proposed federal rules like the NO FAKES Act target unauthorised synthetic likenesses and deceptive political content. The Federal Trade Commission (FTC) is also signalling that deceptive AI content could fall under unfair or deceptive practices.

UK.

Ofcom has set out a strategic approach to AI in media and online safety, and UK Parliament research has analysed AI content labelling options, including C2PA-style approaches.UK-GDPR and sector codes already require accurate, non-misleading communications.

Germany/EU finance.

Supervisors like BaFin and the European Central Bank (ECB) expect banks and insurers to maintain trustworthy digital channels – provenance makes it easier to evidence that sensitive client communications weren’t spoofed or altered.

For compliance teams, provenance becomes a control you can point to in risk registers, audits and board briefings: “Here is how we know what’s real.”

How to Prove AI-Generated Content Is Authentic End-to-End

To prove AI-generated content is authentic, organisations combine capture-time signing, C2PA manifests, secure storage, verification APIs and user-facing indicators across their CMS, DAM and publishing stack. The trick is to do this without breaking existing editorial, marketing or product workflows.

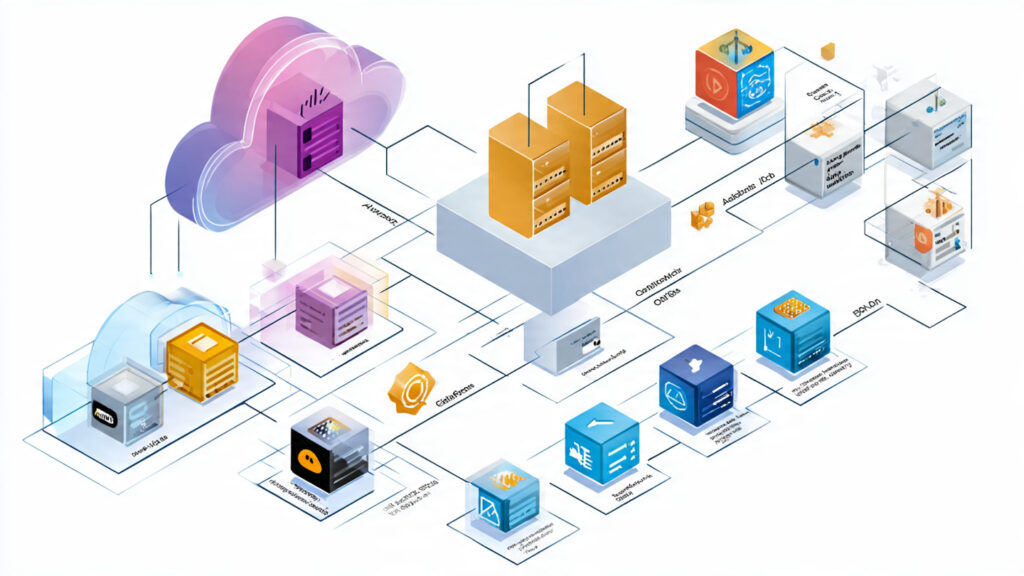

Designing a provenance architecture for CMS, DAM and media pipelines

A reference architecture for digital content provenance might look like this.

Capture and generation. Cameras, design tools and AI systems export assets with C2PA manifests attached, signed using keys managed in Amazon Web Services (AWS), Microsoft Azure or Google Cloud KMS/HSM services.

Ingestion into CMS/DAM. Your CMS or DAM stores both the asset and its provenance log, validates signatures on ingest, and rejects or flags assets with missing or broken manifests.

Editorial and product workflows. As editors crop, re-caption or translate, tools update the manifest, adding new signed steps rather than overwriting history.

Delivery via CDN/app. Your web, mobile or OTT apps expose a “Content Credentials” UI element that lets users inspect provenance, and your CDN preserves metadata instead of stripping it.

Verification APIs. Third-party fact-checkers, regulators or clients can call dedicated APIs to verify that a given asset hashes to a known provenance record.

This is what we mean by digital media provenance infrastructure: a set of services and conventions that sit underneath content workflows, rather than a one-off plugin.

Choosing content authenticity verification tools and platforms

When you evaluate vendors and tools, look for:

C2PA and Content Credentials support rather than proprietary schemes

Cryptographic robustness (key management, hardware security modules, certificate lifecycle)

APIs and dashboarding for compliance teams (reports, exportable logs, alerts)

Certifications like SOC 2, ISO 27001 and region-specific data residency controls for US, UK and EU workloads

You’ll likely mix and match.

Secure capture tools similar to Truepic for field reporting and on-site verification

PKI-focused providers similar to DigiCert for certificates and timestamping

Custom integrations developed with partners like Mak It Solutions to weave provenance into your existing web, mobile and analytics stack.

Monitoring, reporting and audit trails for regulated sectors

For regulated sectors, provenance is only as useful as your evidence pack:

Healthcare (e.g. NHS, US health providers).

Track which patient-facing pages or campaigns use AI-generated content and link each to a provenance log entry and human review.

Finance (PCI DSS, SOC 2, BaFin/ECB).

Include provenance checks in change-management and content-release controls for example, proving that investor updates or product disclosures were signed by specific roles and not altered in transit.

SaaS and support.

For AI support agents or automated ticket resolution, log when AI generates customer-visible responses and attach provenance proof so you can reconstruct who or what said what later.

Dashboards should make it easy to answer.

“Which high-risk journeys use AI, and how are they labelled?”

“Where do we see broken or missing provenance?”

“What percentage of our outbound assets are covered by provenance today?”

GEO Playbook: US, UK and Germany/EU Provenance Strategies

United States.

In the US, provenance strategies are shaped by.

Election integrity.

With more states adding election deepfake laws, media groups in New York and national campaigns are piloting provenance for official ads, debates and fact-check content.

Brand safety and fraud.

Enterprises in healthcare (HIPAA), finance (PCI DSS) and SaaS now treat AI-driven impersonation and fake support communications as part of cyber risk. Provenance helps them show auditors and insurers how they authenticate content.

Guidance, not yet one federal rule.

Draft bills like NO FAKES and sector guidance from agencies such as the FTC keep provenance on board agendas, even before a single federal standard exists.

United Kingdom.

In the UK, provenance is tied to public trust and broadcast standards.

London-based publishers and broadcasters are testing C2PA-aligned workflows to assure viewers that flagship news and documentary content is genuine.

NHS communications teams are developing AI operating frameworks that emphasise transparency, safe use of AI tools and clear human oversight provenance fits naturally into this.

UK-GDPR expectations around accuracy and fairness in public communications make provenance a helpful supporting control, even before explicit deepfake labelling rules are finalised.

Germany and wider EU.

In Germany and the wider EU, the combination of AI Act, DSA and DSGVO (GDPR) is pushing organisations towards provenance as part of their standard stack.

Public broadcasters in Berlin and Frankfurt are experimenting with provenance on news footage and public-interest explainers.

Banks and insurers under BaFin and ECB supervision see provenance as a way to defend against spoofed communications and sophisticated fraud.

Brussels, as the regulatory hub, is driving early adoption through codes of practice and enforcement expectations around deepfake labelling and transparency.

Building an Internal Business Case for Digital Content Provenance

Quantifying risk: deepfake exposure, misinformation and brand damage

To build a business case, translate abstract deepfake headlines into numbers your CFO understands:

Estimate exposure based on sector incidents (election deepfakes, CEO voice-fraud, fake brand ads).

Map potential impacts: regulatory fines, incident response costs, lost revenue, permanent trust damage.

Use realistic benchmarks: surveys show roughly two-thirds of people are worried about deepfake-driven misinformation if your brand or institution becomes associated with “fake” content, your trust deficit grows fast.

From there, it’s easier to show that a modest investment in digital content provenance infrastructure is a sensible insurance premium.

Stakeholder map: who to involve across legal, security, product and editorial

Successful programmes involve.

Legal/compliance interpret AI Act/DSA/No Fakes/UK-GDPR/DSGVO requirements

Security and fraud integrate provenance into identity/access management and incident response

Product/engineering embed provenance into CMS, DAM, mobile and API layers

Editorial/marketing design content workflows that preserve, not strip, provenance metadata

In US, UK and EU organisations, it’s often helpful to appoint a content trust lead who speaks both compliance and product language and can coordinate across teams.

Roadmap: 90-day pilot to global rollout

Treat digital content provenance as a staged rollout rather than a big-bang project.

Days 0–30 – Inventory and risk map.

Identify critical journeys (election content, investor reports, health information, customer support) and map current AI usage and labelling practices.

Days 30–60 – Pilot in one or two workflows.

For example, sign and verify all news images in one desk, or all investor communications in one region, using C2PA-aligned tools. Track impact on speed, UX and compliance.

Days 60–90 – Measure and decide.

Review metrics (coverage, incidents, editor feedback), refine your digital content provenance architecture and define a roadmap for phased expansion across products and geographies.

Beyond 90 days – Industrialise.

Integrate provenance into your change-management, third-party risk and vendor-selection processes so it becomes part of “how we ship content,” not a one-off experiment.

Key takeaways

Digital content provenance turns authenticity from a feeling into a verifiable, portable proof.

Standards like C2PA and Content Credentials are converging into the default language for provenance, with strong momentum across tools and platforms.

Regulation (EU AI Act, DSA, US deepfake laws, UK guidance) is making provenance an important compliance tool as well as a security control.

The most effective programmes combine detection, watermarking, provenance manifests and clear human oversight.

You don’t have to replace your stack you can incrementally upgrade CMS, DAM, CDN and app workflows to carry and expose provenance.

If you’re responsible for legal, security, product or editorial strategy in the US, UK or EU and you don’t yet have a clear digital content provenance roadmap, this is your window to act before regulations and incidents force your hand. The team at Mak It Solutions can help you assess your current stack, design a C2PA-aligned provenance architecture and run a 90-day pilot without derailing ongoing delivery. Explore our web, mobile and analytics services on makitsol.com, then book a discovery call to scope a provenance readiness workshop tailored to your sector and region.( Click Here’s )

FAQs

Q : How does digital content provenance fit with existing information security and PKI investments?

A : Digital content provenance builds on, rather than replaces, your existing PKI and information security stack. In most architectures you reuse certificate authorities, HSMs/KMS, key rotation policies and logging pipelines to sign provenance manifests and validate them at ingest and delivery. That means your security team stays in control of keys and policies, while product and editorial teams focus on how provenance shows up in tools and user interfaces.

Q : Can we retrofit provenance onto legacy content libraries, or does it only work for new AI-generated assets?

A : You can absolutely add provenance to legacy content, but you need to be honest about what you can and can’t prove. For older assets, you typically sign a manifest that says “as of this date, this file was in our archive and we vouch for it,” often backed by human review and existing logs. For new AI-generated content, you should aim for capture-time signing so you can show a continuous chain from creation through to publication and reuse.

Q : What are the biggest implementation pitfalls when rolling out C2PA and Content Credentials in large newsrooms?

A : Common pitfalls include treating provenance as a “bolt-on” after the CMS rather than as part of core workflows, failing to train editors and freelancers on how not to strip metadata, and ignoring performance or UX impacts of verification. Large newsrooms also underestimate the governance work: you need clear policies for which desks or programmes must use provenance, how exceptions are handled and how disputes or challenges are escalated.

Q : How should US, UK and EU companies train staff and creators to avoid stripping or breaking provenance metadata?

A : Training should be practical and tool-specific. Show people which export options preserve C2PA/Content Credentials, which social platforms strip or retain them, and how to check that assets still verify after common edits. Include simple playbooks: “If you need to crop, do it in this tool, not that one.” For freelancers and agencies, bake provenance expectations into contracts and deliverable checklists so it becomes part of standard delivery, not a special favour.

Q : What metrics should we track to measure ROI from digital content provenance (risk reduction, trust, engagement)?

A : Useful KPIs include: percentage of high-risk assets shipped with valid provenance; number of incidents where provenance helped resolve a dispute or takedown quickly; reduction in time spent investigating suspected fakes; and uplift in trust or satisfaction scores for key channels. Over time you can also track regulatory findings, insurance terms and legal costs to quantify how provenance contributes to a lower overall risk profile for your brand or institution.