Enterprise AI Agents for US, UK & EU: A Practical Guide

Enterprise AI Agents for US, UK & EU: A Practical Guide

Enterprise AI Agents for US, UK & EU: A Practical Guide

Enterprise AI agents are autonomous software systems that use foundation models, tools and enterprise data to plan and execute multi-step business tasks across service, operations and knowledge work. In 2026, enterprises that win with these agents design a secure architecture, add a dedicated governance framework, and measure ROI against clear productivity, quality, risk and customer experience metrics across the US, UK and EU.

Introduction

By 2026, enterprise AI has shifted from experiments to always-on answer engines and AI Overviews sitting on top of everything your customers and employees search for. Google’s own documentation makes it clear that AI-generated answers now depend heavily on how well your content and systems are structured for these features.

At the same time, surveys show that well over two-thirds of organisations now use AI in at least one business function, with a growing share experimenting with enterprise AI agents that can take actions, not just generate text. A recent Deloitte analysis suggests AI agent usage could rise from roughly a quarter of enterprises today to around three-quarters within two years yet only about one in five report robust safeguards.

That governance gap is the focus of this guide. We’ll define enterprise AI agents, walk through architecture patterns, then spend most of our time on governance, risk and ROI especially for enterprises in the United States, United Kingdom and Germany (and the wider EU). The short version: governance is the difference between clever pilots and scalable, regulator-ready impact.

Enterprise AI Agents.

What is an enterprise AI agent in 2026 and how is it different from a chatbot?

Enterprise AI agents are software entities that use foundation models, tools and organisational data to autonomously plan and execute multi-step tasks in business workflows. Unlike traditional chatbots, which mostly answer questions inside a single session, enterprise AI agents can call APIs, trigger workflows, collaborate with other agents and maintain goals over time. They’re built to sit inside systems of record service, operations, risk, finance rather than just inside a chat window.

Core capabilities of enterprise AI agents

Modern enterprise AI agents typically combine.

Planning and toolcalling across CRM, ITSM, ERP and data warehouses, chaining multiple steps together.

Multi-agent collaboration, where specialist agents handle research, routing, approvals or QA before anything reaches production.

Observability and feedback loops with logs, traces and human feedback so teams can debug behaviours and continuously tune prompts, tools and policies.

These capabilities are what turn “agentic AI systems” from fancy chat experiences into autonomous AI workflows embedded in your operating model.

Priority enterprise use cases.

In 2026, three clusters dominate enterprise AI agent roadmaps.

Service.

Autonomous L1/L2 support, intelligent triage and knowledge search, including multi-lingual support for customers in places like New York City, London and Berlin. Well-implemented agents routinely handle an estimated 50–70% of routine tickets before humans get involved.

Operations.

Incident response, IT automation and supply-chain coordination especially in complex estates where ITSM tools, CI/CD, monitoring and collaboration platforms already exist.

Knowledge work.

Drafting policies, RFPs, risk memos and board papers with human-in-the-loop review, plus enterprise knowledge graph driven agents that can reason over policies, contracts and controls.

Platforms like ServiceNow, Kore.ai, Sana and Aisera increasingly ship these patterns as out-of-the-box workflows for IT, HR and customer service.

How enterprise AI agents fit into existing operating models

The most successful enterprises treat AI agents as “co-pilots + co-workers”, not magic black boxes. Humans still own decisions and outcomes; agents own repetitive execution, orchestration and summarisation.

In practice that means.

Embedding agents where work already happens (Salesforce, ServiceNow, Jira, SAP, M365), not forcing users into a separate “AI portal”.

Making accountability explicit: who signs off on payments, approvals or customer decisions; when “recommend-only” vs “auto-approve” is allowed.

Setting up a dedicated governance and monitoring function something we’ll dig into later that owns the agent taxonomy, policies and lifecycle.

Enterprise AI Agent Architecture & Platforms

What architecture do enterprises need to deploy secure AI agents across apps, data and workflows?

A secure enterprise AI agent architecture layers identity, policy and tools on top of your existing data estate. At minimum you need:

A policy-aware orchestration layer that handles planning, routing and toolcalling.

Retrieval-augmented generation (RAG) over your content, tickets and logs.

Tight IAM integration, so each agent run gets the minimum permissions it needs and nothing more.

In 2026, most firms deploy agents close to systems of record, with zero-trust networking, per-task permissions and data minimisation that align with existing IAM and audit practices instead of bypassing them.

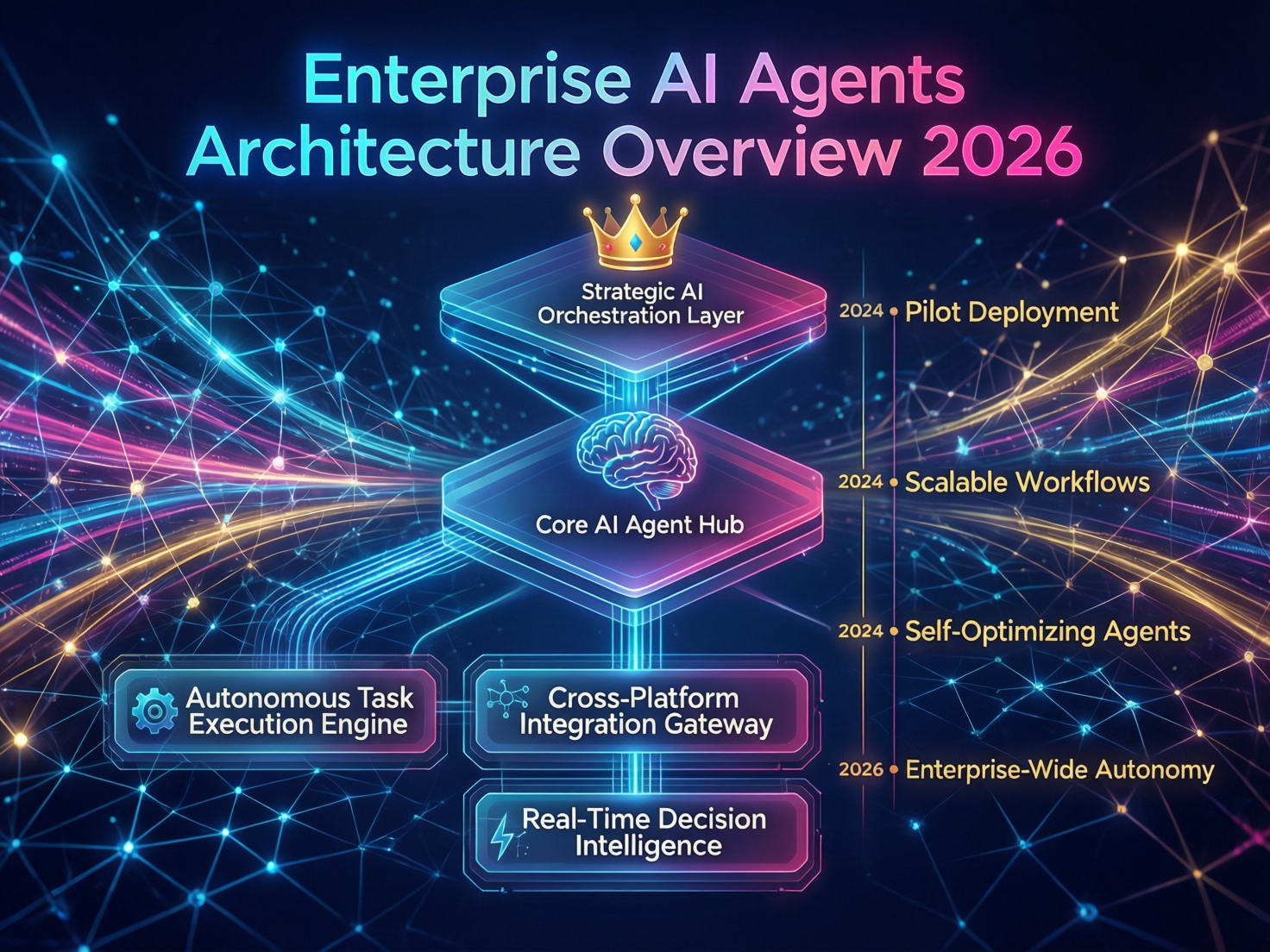

Reference architecture for enterprise AI agents

A pragmatic reference architecture looks like this.

Ingestion + RAG.

Index documents, tickets, logs, CRM/ERP data and wiki content, including region-specific stores for the EU or UK.

Orchestrator.

A central “brain” that selects the right tools, plans steps, coordinates multiple agents and decides when to escalate to a human.

Connectors.

Deep connectors into ITSM, CRM, HRIS, CI/CD, monitoring tools and messaging platforms like Teams or Slack.

Safety layer.

Policy checks, runtime guardrails, red-teaming harnesses and kill switches for sensitive workflows (payments, trading, clinical decisions).

On cloud, this often maps to agent frameworks from Amazon Web Services, Microsoft Azure or Google Cloud, plus your chosen observability stack.

Multi-agent AI architecture for complex enterprises

Larger enterprises increasingly prefer multi-agent patterns.

Specialist roles.

Such as Advisor (analysis + recommendation), Operator (tool execution), Reviewer (policy + compliance checks) and Auditor (explainability + evidence)

Shared vs task-scoped memory.

Shared memory for reusable knowledge, task-scoped memory for sensitive context so you don’t leak data between customers or geos.

Escalation ladders.

Agent → senior agent → human expert → approvals, with thresholds based on value and risk.

These patterns are particularly powerful in regulated sectors in Frankfurt or London where BaFin and PRA/FCA expect clear segregation of duties and auditable workflows.

AI agent orchestration platforms and vendor landscape

CIOs now face three broad choices.

In-house frameworks (open-source orchestration, custom tools, your own UI). Maximum control, maximum engineering burden.

Copilot-style platforms from suites like Microsoft 365 or Salesforce excellent for knowledge work and collaboration, less flexible for back-end workflows.

Agent orchestration platforms focused on complex workflows, observability and governance.

When evaluating, prioritise.

Security certifications (SOC 2, ISO 27001), auditability and governance depth.

Integration quality with ITSM, CRM, HR and DevOps tools you already use.

EU data residency and sovereign cloud options for EU and UK workloads.

Support and roadmap for agent safety, policy management and multi-region rollouts.

Use open-source frameworks as reference implementations and for low-risk pilots; rely on battle-tested platforms for mission-critical workflows.

GEO-aware architectures (US, UK, Germany/EU)

GEO-aware architectures boil down to three design choices.

Data residency

Route European data to EU regions or sovereign clouds, ensure logs and vector indexes also stay local, and segregate US vs EU traffic at the network and identity layers.

Sector constraints

Healthcare (HIPAA, NHS guidance), finance (SOX, banking supervision, PCI DSS), and public sector workloads each need their own “rails” for tool access, logging and approvals.

Evidence by design

Store regulator-friendly traces who did what, using which model and which data for every high-risk task.

If you’re already running multi-cloud (for example, AWS in the United States and Azure or EU sovereign clouds in Germany), reuse those patterns for AI agents rather than building a parallel stack.

Risk, Governance & Compliance for Enterprise AI Agents

Why are AI agents increasing model risk and operational risk for large organisations?

AI agents amplify model risk because they don’t just generate text they take actions: updating customer records, triggering payments, approving discounts or escalating incidents. A single misaligned policy or hallucinated field can cascade into thousands of downstream errors.

They also introduce opaque chains of prompts, tools and sub-agents, which makes root-cause analysis harder when something goes wrong. As Deloitte’s AI Institute points out, governing agents is tougher precisely because of this autonomy and complexity, and most organisations’ safeguards are lagging their adoption curves.

Why do CIOs and CROs need a dedicated AI agent governance framework, not just generic AI policies?

Generic AI policies tend to focus on data privacy, model selection and acceptable use. Agent governance must go further.

Define levels of autonomy (read-only, recommend, auto-execute with approvals)

Control tool access and segregation of duties (who can move money, update KYC data, change risk limits).

Require human sign-off for higher-risk actions, with clear thresholds by business line and geography.

Regulators in the US, UK and EU are increasingly explicit that once AI systems execute core processes claims handling, lending decisions, trading, clinical triage they expect specific controls, not generic “use AI responsibly” statements.

Components of an AI agent governance framework

A practical governance framework typically includes:

Taxonomy. Define classes of agents by business area, risk level and autonomy (e.g., Tier 0 read-only, Tier 1 recommend, Tier 2 execute with approvals).

Policy guardrails. What data each class may read; what they can write, approve or only recommend; which sectors (e.g., NHS data) are out of scope.

Controls. Four-eye checks, counterparty verification, limits, exception workflows and playbooks for pausing or rolling back agents.

Continuous monitoring. Drift, hallucination rates, policy violations, incident patterns feeding back into training, prompts and tools.

Compliance and regulatory expectations (US, UK, Germany/EU)

For most enterprises, the fastest way to bring AI agents into compliance is to map them to regimes you already know.

US

SOX for financial reporting; HIPAA for protected health information; PCI DSS for card data; SOC 2 for service organisations.

UK

UK-GDPR and ICO guidance on automated decision-making, plus PRA/FCA expectations on model risk and operational resilience.

Germany/EU

DSGVO/GDPR plus EU AI Act risk categories. High-risk AI systems (for example in banking, employment or critical infrastructure) will face stricter obligations from 2026–2027, including risk management, data quality, logging and human oversight requirements.

Supervisors such as BaFin and UK financial regulators worry about opaque automation and customer detriment. They will expect you to explain, in plain language, how an agent reached a decision and how you can override or reverse it.

AI agent monitoring, logging and auditability

Every enterprise AI agent should produce a traceable narrative for each task.

The initial user request and system prompts.

Which tools were called, with what parameters and which data sources.

Intermediate reasoning artefacts (where permissible) and the final outputs.

Retention should align with financial, healthcare and sector-specific regulations, and logs must be discoverable for internal audit and regulators. PCI and healthcare guidance already emphasise that AI does not remove your obligations; AI systems must meet the same standards for data security, auditability and access control as any other in-scope system.

Dashboards for risk, compliance and internal audit teams covering agent volume, failure modes, overrides and incidents are now a baseline requirement, not a “nice to have”.

Measuring ROI & Total Cost of Ownership for Enterprise AI Agents

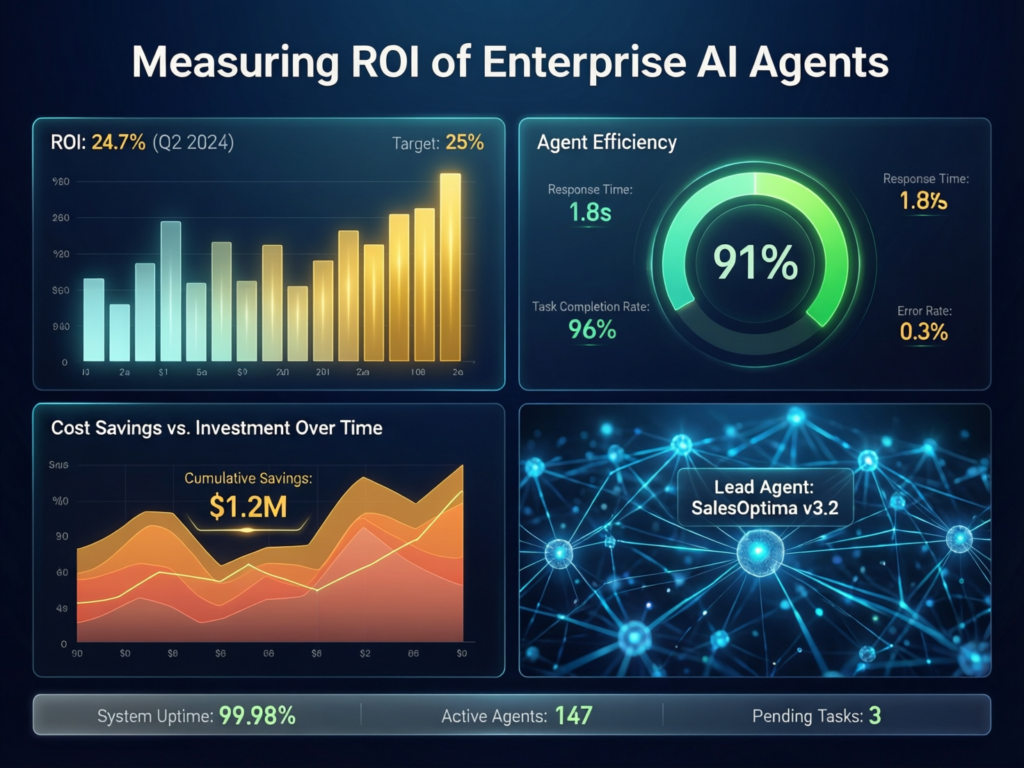

How can enterprises measure ROI for AI agents across service, operations and knowledge work?

Start with baseline metrics for each workflow: handle time, resolution rate, backlog, employee hours on repetitive tasks and error/re-open rates. For every agent use case, estimate hours saved, higher throughput and reduced escalations, then convert that into FTE capacity and quality improvements.

Balance these benefits against platform, infrastructure, integration and governance costs. Mature programmes also explicitly quantify risk reduction (fewer incidents, faster containment), improved customer satisfaction and revenue enablement from better experiences in cities like London, Berlin or New York.

None of this is financial or legal advice; treat ROI models as directional and align them with your own finance, risk and legal teams.

Value levers and KPIs for enterprise AI agents

Useful KPIs include.

Productivity. Tickets or cases handled per hour, percentage of work fully automated, backlog cleared per week.

Quality. CSAT/NPS, first-contact resolution, error and re-open rates, regulatory breaches avoided.

Speed. Time-to-resolution, cycle times in IT, finance, HR and risk.

By 2025, global AI spending was already forecast to reach around $1.5 trillion, highlighting just how important it is to tie investments to measurable outcomes. ([Gartner][16])

ROI benchmarks and sector examples (US, UK, Germany/EU)

Benchmarks move quickly, but indicative ranges look like this.

US banks in New York City and other hubs: 20–40% reduction in handle time for common service and operations tickets; 10–20% reduction in operational losses from faster incident response.

UK public sector and NHS suppliers: meaningful gains in triage and back-office processing, but stricter limits on autonomy for clinical or citizen-facing decisions.

German manufacturing and Mittelstand: big wins in supply-chain coordination, maintenance and documentation automation when agents are integrated with MES/ERP in places like Frankfurt and Munich.

Local labour costs, union agreements and regulatory friction heavily influence the ROI equation what’s attractive in Austin may be marginal in London or Frankfurt.

Cost structure and TCO.

Total cost of ownership (TCO) spans.

Direct costs. Models, platforms, infrastructure, connectors, observability, security tooling.

Indirect costs. Change management, training, risk and compliance overhead, legal review, incident response.

In many enterprises, the cheapest architecture on paper is not the cheapest over three years once you add governance and support. A simple decision matrix complexity vs criticality vs internal capabilities often points to a hybrid: build core agent patterns on a platform, then extend with custom tools and integrations where you differentiate.

Adapting Architecture & Governance for USA, UK and EU Regulations

How should global enterprises adapt AI agent architecture and governance for USA, UK and EU regulations?

Global firms should design a common agent architecture, then layer regional “rails” on top. In the US, focus on sectoral rules (HIPAA, financial regulations, PCI DSS) and SOC 2-style controls. In the UK, align with UK-GDPR, ICO guidance on automated decision-making and PRA/FCA expectations on operational resilience. In the EU, classify agents under the AI Act, enforce strict data residency and prove human oversight for higher-risk decisions.

US operating patterns (regulation, risk and delivery)

In the US, you’ll typically see.

Sector-specific patterns for healthcare (HIPAA + HHS AI strategy), payments (PCI), securities trading and advice (SEC/FINRA).

Agent access aligned with existing SOX, HIPAA, PCI DSS and SOC 2 controls, not separate exceptions.

Regional instances and logging to support state and federal inquiries, often across multiple cloud regions.

A practical example: using regional instances of agents for US healthcare customers, with PHI kept inside HIPAA-compliant environments and separate agents for marketing data.

UK-specific expectations

In the UK, regulators emphasise data dignity and explainability.

UK-GDPR and ICO guidance give individuals rights against solely automated decisions with legal or similar effects, which directly impacts highly autonomous agents in lending, employment or public services.

PRA/FCA supervisors focus on operational resilience, outsourcing risk and model risk management; AI agents must fit inside these frameworks.

Public sector and NHS–style patterns favour cautious use on clinical or citizen data, with strong human oversight and transparent audit trails.

Germany and continental Europe

For Germany and continental Europe, three design themes dominate.

BaFin-heavy environments. Banks and insurers running AI agents in critical processes must meet banking supervision expectations on outsourcing, risk management and IT operations.

EU AI Act obligations. Classify agents by risk (unacceptable, high, limited, minimal) and implement risk management, logging and transparency requirements for high-risk systems.

EU sovereign cloud and data-local architectures. Sensitive workloads often run on EU-only or German sovereign clouds, with agents restricted to local tools, logs and models.

For multinational architectures, this often means running separate EU and non-EU agent stacks with harmonised policies but different data and tool scopes.

Operating Models & Next Steps for CIOs and Transformation Leaders

Target operating model for AI agents

A scalable operating model for enterprise AI agents usually includes.

Roles.

Head of AI, AI product owners, line-of-business sponsors, risk and compliance leads, enterprise architecture, security and data teams.

RACI across the agent lifecycle.

Who owns ideation, design, deployment, monitoring and decommissioning; who can approve higher autonomy levels or new tools.

Platform vs domain balance.

A central platform team defines standards and reusable components, while domain teams own specific agents and business outcomes.

Phased roadmap: from pilots to scaled programmes

A pragmatic roadmap for 2026–2027.

Phase 1

Guardrailed pilots. Low-risk, high-volume workflows (e.g., knowledge search, L1 support) with strong guardrails and human review.

Phase 2

Multi-agent cross-domain automation. Expand to IT, operations and finance workflows, add richer tool access and start GEO-specific deployments.

Phase 3

Enterprise-wide operating model. Embed AI agents into strategy and budgeting, integrate with enterprise risk management and internal audit, and treat agents as part of core process design.

Each phase should have its own risk appetite statement, success metrics and “exit criteria” before you move to the next.

Choosing platforms, partners and next actions

When selecting platforms and partners, use an evaluation checklist covering governance depth, integrations, GEO support and real reference customers in sectors similar to yours.

Specialist partners like Mak It Solutions can help you design multi-cloud, GEO-aware architectures, run AI agent readiness assessments and build agent governance frameworks that align with SOX, GDPR/DSGVO, UK-GDPR and sector rules across the US, UK and EU.

A simple next step is to commission an enterprise AI agent architecture review or governance workshop, focusing on 2–3 concrete use cases and your 2026–2027 regulatory roadmap.

Key Takeaways

Enterprise AI agents move beyond chat to execute multi-step, tool-driven workflows across service, operations and knowledge work.

A secure architecture layers identity, policy, RAG and observability on top of existing systems, with GEO-aware patterns for the US, UK and EU.

Dedicated agent governance taxonomy, autonomy levels, tool access and audit trails—is now expected by regulators, not just AI-savvy enterprises.

ROI measurement must cover productivity, quality, risk reduction and customer experience, not just FTE savings.

GEO-specific designs for HIPAA, UK-GDPR, DSGVO and the EU AI Act are essential for banks, insurers, healthcare providers and the public sector.

A phased roadmap, strong operating model and the right mix of platforms and partners (including firms like Mak It Solutions) turn pilots into scalable programmes.

If you’re a CIO, CRO or transformation leader in New York, London, Frankfurt or elsewhere in the US, UK or EU, now is the moment to move from isolated AI experiments to governed, GEO-aware enterprise AI agents.

Mak It Solutions’ architecture, cloud and analytics teams can help you review your current stack, design a compliant agent blueprint and prioritise high-impact use cases. Share a few details about your environment and priorities on our contact form, and request an “Enterprise AI Agent Architecture & Governance” session we’ll respond with a proposed agenda and next steps tailored to your regulators, systems and ambition level.

FAQs

Q : Can enterprise AI agents run entirely on-premises for regulated industries?

A : Yes enterprise AI agents can run entirely on-premises or within private clouds, but you must treat them like any other high-risk system. For US healthcare or finance, that might mean deploying models and orchestration inside HIPAA-, SOX- or PCI-aligned environments with no internet access and strict network segmentation. In the UK or EU, many firms run agents in sovereign or local clouds with data residency controls and VPN or direct-connect links to on-prem data. The trade-off is more engineering and MLOps complexity, but for some banks, insurers and hospitals, that’s the only viable option.

Q : What skills and roles do enterprises need to build and govern AI agents at scale?

A : You’ll need both technical and governance skills. On the technical side: ML engineers, prompt/agent engineers, software engineers, data engineers and platform SREs. On the governance side: risk and compliance specialists, security architects and business owners who understand processes and regulations in detail. Many enterprises also appoint a head of AI or AI programme director to coordinate architecture, use cases and change management. In regulated sectors, internal audit and model risk teams must be involved early, not after deployment.

Q : How do enterprise AI agents compare with traditional RPA and workflow automation tools?

A : Traditional RPA excels at structured, deterministic tasks click this button, move this field while enterprise AI agents handle unstructured inputs, reasoning and decision support. In many 2026 architectures, agents and RPA coexist: the agent interprets email or chat messages, reasons over policies and suggests actions, and RPA bots execute deterministic steps in legacy systems. Compared with RPA-only estates, agentic AI systems are more flexible but also carry more model risk, so they demand stronger governance, testing and monitoring.

Q : What are common failure modes when piloting AI agents, and how can CIOs avoid them?

A : Common failure modes include: picking high-risk use cases first, underestimating integration work, skipping human-in-the-loop review, and treating governance as an afterthought. Many pilots also fail because success metrics are vague“be more efficient” rather than tied to handle time, accuracy or risk. CIOs can avoid these traps by starting with narrow, measurable workflows; building a minimum governance framework (taxonomy, policies, logging) before launch; and running structured red-teaming and user acceptance tests. Clear rollback plans and kill switches are essential.

Q : How should enterprises choose between a single “platform of record” for agents vs multiple specialised tools?

A : Choosing between a single platform and multiple specialised tools is a governance vs flexibility decision. A single platform simplifies security, IAM, observability and compliance, which is attractive for highly regulated enterprises in the United States, the UK or Germany. Multiple specialised tools, on the other hand, can deliver faster innovation in specific domains (customer service, DevOps, finance) but increase integration and governance overhead. Many large organisations land on a “federated” model: one or two strategic platforms that set standards, plus a small number of domain-specific tools that plug into shared identity, logging and policy services.