Generative AI Security Risks in the Workplace 2025

Generative AI Security Risks in the Workplace 2025

Generative AI Security Risks at Work: Productivity vs Reality

Generative AI can dramatically boost employee productivity, but it also introduces new security, privacy and compliance risks such as data leakage, shadow AI and prompt injection attacks. Organisations in the US, UK and EU need a clear governance framework, secure architectures and data loss prevention controls to safely capture the upside while protecting regulated and sensitive data.

Introduction

Generative AI is now embedded in everyday work from quick email drafts to full-blown code generation. For many teams in New York, London, Berlin or Amsterdam, it already feels like a productivity booster that’s impossible to roll back.

At the same time, CISOs, DPOs and security leaders see a very different picture: uncontrolled data flows, opaque AI supply chains and “shadow AI tools” that bypass traditional controls. The core question is no longer whether employees use generative AI at work, but how to balance its productivity benefits with security, privacy and regulatory obligations across the US, UK, Germany and the wider EU.

Productivity Promise vs Security Reality

Employees in US, UK and European organisations already use generative AI every day for writing, summarising, coding and analysis—often before security or legal teams have signed anything off. Surveys in 2024 suggest that well over half of knowledge workers have used tools like ChatGPT or Copilot for work tasks, with a significant share doing so without formal approval.

How employees already use generative AI in US, UK and European workplaces

Knowledge workers typically start with “lightweight” tasks: email drafting, meeting summaries, slide outlines and code snippets. In US tech hubs like San Francisco or Seattle, developers lean heavily on AI copilots for boilerplate code, test generation and log analysis. In London or Manchester, marketing and digital teams use AI for campaign copy, SEO ideas and social media planning.

In continental Europe Berlin, Paris, Amsterdam or Dublin teams often combine generative AI with existing data platforms, for example piping analytics outputs into an LLM to create executive-ready summaries. Banks and insurers in BaFin-regulated Germany experiment with internal chatbots for policy questions, while being far stricter about using public models for client-specific data.

Generative AI productivity gains for knowledge workers and employees

Early studies show that generative AI can cut certain knowledge tasks like drafting and summarisation by 20–40% in time, with the largest gains for less experienced staff. Developers report similar accelerations when pairing AI code assistants with modern cloud platforms such as Azure, AWS or Google Cloud.

Concrete benefits include:

Faster document and email creation

Quicker decision support via “chat with your data” use cases

Reduced context-switching when AI is embedded in tools like Microsoft 365 Copilot

But these gains often come before a formal risk assessment, let alone robust enterprise AI governance and risk management.

Why CISOs and data protection leaders are worried about GenAI at work

Security leaders see three overlapping problems.

Uncontrolled data flows

Sensitive data pasted into public tools with unclear retention and training practices.

New attack surfaces

Prompt injection, model manipulation and AI-enabled phishing that existing SOC playbooks don’t fully cover.

Regulatory uncertainty

How GDPR/DSGVO, UK GDPR, the EU AI Act, HIPAA or PCI DSS apply to generative AI in practice.

In short, productivity is real but so is the AI exposure gap between what employees are doing and what security controls can currently see.

What are the most serious generative AI security risks for companies today?

The most serious generative AI security risks for companies today include data leakage, shadow AI tools, prompt injection attacks, model manipulation, supply chain risks, AI-powered phishing, compliance violations and loss of intellectual property. For CISOs in US, UK and EU organisations, these risks translate into financial loss, regulatory penalties and reputational damage if left unmanaged.

Top generative AI security risks (snippet-ready list)

Data leakage via prompts, outputs and logs

Shadow AI tools outside SSO, DLP and CASB

Prompt injection and indirect prompt injection attacks

Model manipulation and poisoning (including third-party APIs)

AI-assisted phishing, fraud and social engineering

IP loss and trade secret exposure

Compliance failures (GDPR, UK GDPR, EU AI Act, HIPAA, PCI DSS, SOX, SOC 2)

prompt injection, model manipulation and the AI exposure gap

Prompt injection attacks trick an AI system into ignoring its instructions, leaking secrets or executing malicious actions. Indirect prompt injection uses content from websites, PDFs or emails that the model reads—attackers plant hidden instructions in data, not just in the user’s prompt.

Model manipulation goes further: adversaries can attempt data poisoning (polluting training data), jailbreak models or exploit weak guardrails in third-party APIs. Because many GenAI systems are integrated into productivity suites, ticketing tools or cloud infrastructure, this creates a new, hard-to-monitor attack surface for SOC teams.

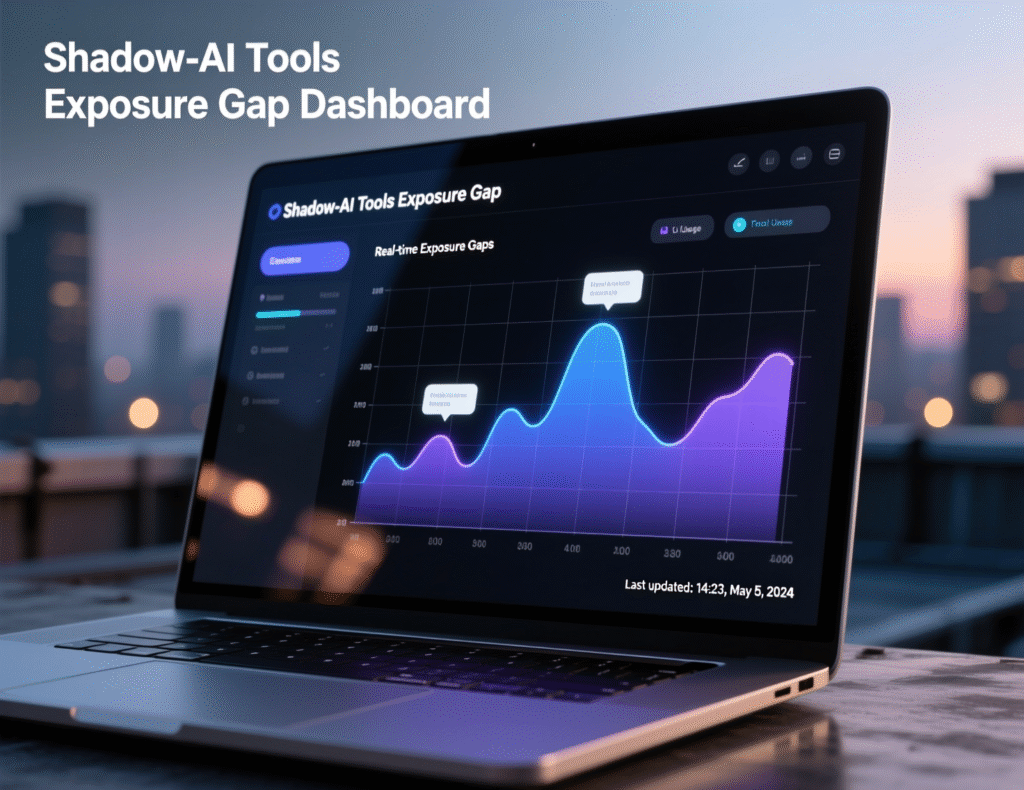

The “AI exposure gap” is the difference between where generative AI is actually used (employees, teams, tools) and where your existing security controls DLP, CASB, EDR, SIEM have visibility and policies.

Generative AI data leakage, IP loss and privacy violations

From a GDPR/DSGVO or UK GDPR perspective, uncontrolled use of generative AI can involve unlawful processing, unclear data transfers and missing transparency.If a sales team in Frankfurt pastes customer PII into a US-hosted chatbot with training retention switched on, that may trigger Schrems II concerns and require SCCs or additional safeguards.

In the US, HIPAA-regulated healthcare providers must avoid exposing PHI to tools that are not clearly designated as HIPAA-compliant business associates. Similarly, PCI DSS environments must keep cardholder data away from unmanaged AI services. IP loss is another big concern: engineers sharing internal architecture docs with public copilots may accidentally disclose trade secrets that can’t be “unleaked”.

Human-centric threats: phishing, fraud and social engineering powered by GenAI

Generative AI lowers the barrier for convincing phishing emails, deepfake audio and synthetic documents in any language. Attackers can now generate thousands of tailored spear-phishing messages targeting finance teams in New York, NHS trusts in London or industrial operators across the DACH region.

Industry reports indicate that AI-enhanced phishing volumes have grown noticeably since 2023, with higher click-through rates due to better localisation and tone. [VERIFY LIVE] In regulated sectors such as open banking, BaFin-supervised finance or NIS2-critical infrastructure, this directly impacts fraud losses, incident response workloads and regulatory reporting obligations.

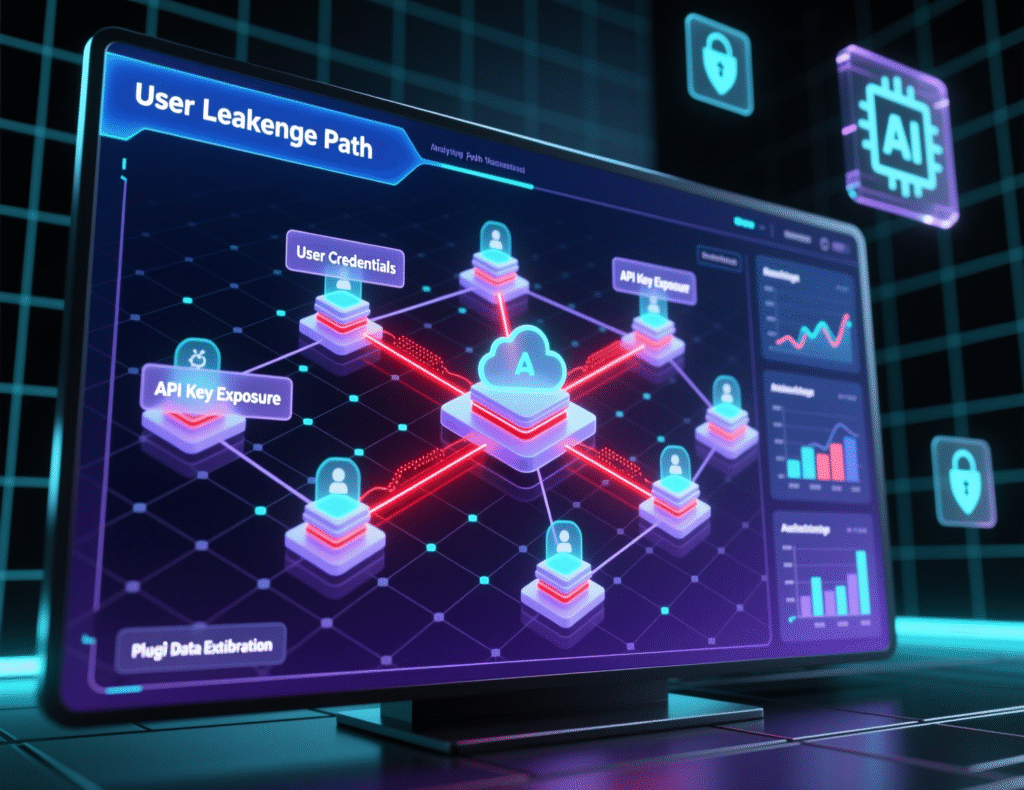

How can generative AI cause data leakage at work?

Generative AI can cause data leakage at work when employees input sensitive information into prompts, when models generate outputs that contain more data than intended, or when logs, plug-ins and integrations store data in uncontrolled locations. Leakage can involve personal data, financial details, health data, credentials, source code, contracts and other confidential records.

Where sensitive data can leak: prompts, outputs, logs, plug-ins and integrations

Common leakage paths include

Prompts

Employees paste customer lists, PHI, cardholder details or internal strategy docs directly into chat windows.

Outputs

Models sometimes regurgitate training or context data, exposing more than the user should see.

Logs and telemetry

Providers may log prompts and outputs for quality, security or product analytics.

Plug-ins and extensions

Browser plug-ins or office add-ins may sync data via their own clouds.

Integrations

BI tools, CRM systems or code repos wired into AI agents can widen the blast radius if access controls are weak.

Traditional DLP rules built for email and file shares often struggle to detect these patterns without GenAI-aware policies.

what happens to your data in US, UK and EU contexts

Public GenAI tools typically offer some combination of opt-in/opt-out training, region-based storage and enterprise plans with the fine print varying widely. Enterprise platforms on Azure, AWS or Google Cloud increasingly support dedicated instances, VPC isolation and data residency in EU or UK regions designed to align with GDPR and UK GDPR requirements.

For EU organisations, Schrems II still matters: exporting personal data to US-based providers requires SCCs and additional safeguards, even if the model is “EU hosted”. For UK and US entities, sectoral rules (NHS guidance, HIPAA, state privacy laws) may dictate whether public tools can be used at all or only via specific enterprise agreements.

US healthcare, European finance and UK public sector teams

US healthcare (HIPAA)

A clinician in Seattle pasting unredacted patient notes into a non-HIPAA-aligned chatbot risks an impermissible disclosure and reportable breach.

European finance (BaFin/ECB)

A risk model team in Frankfurt using public LLMs for stress-test narratives may violate outsourcing and data processing expectations if contracts and controls aren’t in place.

UK public sector (NHS, local government)

NHS trusts and councils are guided by NCSC and ICO recommendations emphasising DPIAs, minimisation and strong supplier due diligence before using AI with citizen data.

In each case, “harmless experimentation” can quietly turn into a notifiable data breach.

Why is “shadow AI” in the workplace so dangerous for security and compliance?

Shadow AI is dangerous because it creates invisible channels where sensitive data flows into unsanctioned AI tools that are outside corporate monitoring, DLP, SSO and incident response processes. This AI exposure gap makes it difficult for CISOs to prove compliance, respond to breaches or meet GDPR, UK GDPR, EU AI Act or sectoral obligations.

What counts as shadow AI tools in 2025

Shadow AI includes any AI tools, plug-ins or copilots used for work without formal approval or governance. Examples.

Personal accounts on public chatbots used with work data

Browser extensions that “summarise this page” or “rewrite this email”

Unvetted AI copilots wired into IDEs, CRM systems or cloud consoles

Team-level experiments on free tiers of SaaS AI platforms

By 2025, many organisations in New York, London, Berlin or Paris find hundreds of such tools when they finally inventory web traffic and OAuth grants. [VERIFY LIVE]

How shadow AI creates AI exposure gaps and bypasses corporate controls

Shadow AI bypasses:

SSO and IAM

No strong auth, no de-provisioning when staff leave

DLP and CASB

Traffic often appears as generic HTTPS to popular domains

Vendor due diligence

No DPIA, no security review, no contractual guarantees

As a result, your AI exposure gap widens: you may publish a strict generative AI policy, while reality is dozens of unapproved tools handling regulated data across US, UK and EU operations.

Detecting and reducing shadow AI across US, UK and European organisations

Practical steps include

Using secure web gateways, CASB and DNS logs to discover AI domains and plug-ins

Classifying tools by data sensitivity and region (e.g., EU personal data vs. US-only internal docs)

Establishing an “allow, restrict, block” catalogue for GenAI tools

Offering secure, well-documented alternatives (e.g., private LLM instances) to reduce the need for workarounds

NCSC, ENISA and national agencies like ANSSI regularly emphasise discovery and asset inventory as the first step in AI and cyber risk management.

How can organisations balance generative AI productivity with strong security controls?

Organisations can balance generative AI productivity with strong security by following a simple framework: discover, govern, enable. First, discover how AI is actually used; second, define governance, policies and technical controls; third, safely enable high-value use cases on secure platforms.

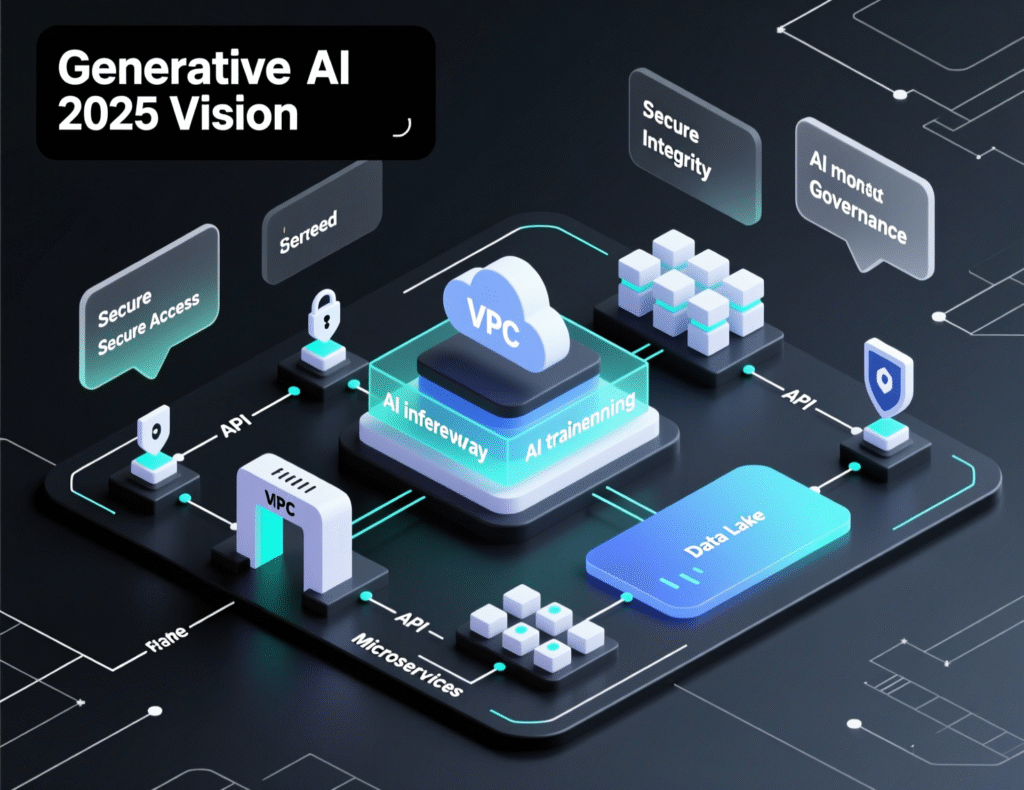

Secure GenAI architecture: private instances, VPCs and enterprise-grade platforms

Rather than banning AI outright, many CISOs are steering usage to secure reference architectures:

Private LLM instances hosted in VPCs on Azure, AWS or Google Cloud

Enterprise plans with clear data retention, training and residency commitments

Network controls that keep AI traffic within regional boundaries (e.g., EU regions for GDPR)

Integration with existing identity providers (Entra ID, Okta) and logging stacks

This approach lets a bank in Frankfurt or an NHS trust in Manchester provide AI chat and copilots with strong isolation, auditability and change management.

Data loss prevention for generative AI: policies, DLP rules and access controls

Data loss prevention for generative AI means adapting your existing DLP, IAM and zero-trust programmes:

Add GenAI-specific DLP rules (e.g., blocking card numbers in prompts to public tools)

Enforce role-based access to sensitive connectors (CRM, EMR, finance) within AI platforms

Tag and classify data sources before exposing them to AI agents

Monitor usage patterns for anomalous activity or bulk exports

Mak It Solutions often sees success when clients align GenAI DLP with their broader and data governance programmes instead of treating it as a separate silo.

Training, playbooks and “guardrail by design” for employees

Security-aware enablement includes:

Short, role-specific training on safe prompting and red-line data (e.g., PHI, card data, state secrets)

Clear playbooks for acceptable use in remote and hybrid settings

Guardrail-by-design UX pre-configured templates, redaction helpers, built-in consent notices

In US enterprises, for example, finance teams in New York might use AI only with redacted datasets, while EU teams in Dublin or Amsterdam operate under stricter consent and transfer rules driven by GDPR and the EU AI Act.

Governance and compliance for generative AI

To govern generative AI under GDPR, UK GDPR and the EU AI Act, organisations need a structured approach covering legal bases, DPIAs, data minimisation, vendor assessments and continuous monitoring. A practical checklist includes: inventory use cases, classify risks, perform DPIAs, define policies, update contracts, implement technical controls and review regularly.

Key legal and regulatory requirements across US, UK, Germany and the wider EU

Key frameworks include

GDPR/DSGVO and UK GDPR

Lawful basis, transparency, DPIAs, data subject rights, international transfers.

EU AI Act

Risk-based obligations for high-risk AI systems, transparency and technical documentation.

NIS2

Cybersecurity risk management and reporting for essential and important entities across energy, transport, health, digital infrastructure and more.

US sectoral rules

HIPAA for healthcare, PCI DSS for payments, SOX and SOC 2 for financial reporting and controls.

German entities must also respect national guidance (e.g., BaFin circulars, DSK recommendations), often in close dialogue with works councils.

Building an enterprise generative AI policy and acceptable use guidelines

A strong generative AI policy should define:

Approved tools and use cases (by department and region)

Prohibited data types and actions (e.g., feeding live customer PII into public tools)

Requirements for DPIAs and privacy reviews

Logging, monitoring and retention expectations

Incident response procedures for AI-related breaches

Mak It Solutions often aligns AI policies with existing and security standards so that AI becomes part of the broader secure SDLC, not an add-on.

AI risk management frameworks for NIS2-critical and regulated entities

NIS2-critical operators, banks and large SaaS providers should integrate generative AI into their overall cyber and operational risk frameworks:

Map AI systems to critical services and assets

Use NCSC, ENISA and ANSSI guidance on secure architecture, logging and incident response as baselines

Align with ISO 27001/27701 or SOC 2 where applicable

Ensure AI platforms feature in business continuity and disaster recovery plans

ENISA’s technical implementation guidance for NIS2 explicitly stresses risk-based controls, evidence of implementation and continuous improvement all directly relevant to GenAI.

How CISOs can close AI exposure gaps in US, UK and European workplaces

The AI exposure gap is the difference between where generative AI is used across your workforce and where your security, compliance and governance controls actually apply. CISOs can reduce this gap in three steps: discover current use, consolidate on secure platforms and embed GenAI risks into ongoing governance and board reporting.

discover, inventory and classify generative AI use at work

A pragmatic first-90-days plan.

Discover

Use network, CASB and identity logs to map shadow AI domains, plug-ins and OAuth grants.

Inventory and classify

Group use cases by sensitivity (e.g., marketing vs. claims handling vs. patient data) and geography (US, UK, EU).

Stabilise

Issue interim guidance, block clearly risky tools, and fast-track one or two secure enterprise AI options.

For a multinational with teams in New York, London and Munich, this quickly surfaces regional differences in regulatory exposure, especially for GDPR/DSGVO and UK GDPR workloads.

Roadmap for secure generative AI at scale

Once the immediate exposure is understood, CISOs can:

Move from isolated pilots to a small number of vetted platforms (e.g., Azure OpenAI in EU regions, private models on AWS)

Embed AI controls into vendor risk management, SOC 2 reviews and contract templates

Define architecture and integration patterns (e.g., API gateways, tokenisation, data minimisation)

This roadmap helps avoid a patchwork of disconnected experiments and instead builds a scalable, auditable AI platform layer.

Turning generative AI risk into a managed program

Boards increasingly ask not “Are we using AI?” but “Are we doing it safely?”. Useful KPIs include:

Number of sanctioned vs. unsanctioned AI tools over time

Percentage of high-risk use cases covered by DPIAs and formal policies

GenAI-related incidents, near misses and phishing detections

Coverage of GenAI DLP rules across channels

Framing generative AI within existing cyber and compliance dashboards makes it easier to justify investment in discovery tooling, secure platforms and expert partners such as Mak It Solutions.

FAQs

Q : Can employees safely use public tools like ChatGPT with confidential company data?

A : In most cases, employees should not paste confidential or regulated data into public generative AI tools unless there is a formally approved enterprise agreement and clear guidance from security and legal teams. Public chatbots may retain prompts and outputs for logging, abuse detection or model improvement, and data may be processed outside your jurisdiction. To stay compliant with GDPR, UK GDPR, HIPAA or PCI DSS, treat confidential data as “no-go” for personal AI accounts and prefer managed, enterprise AI platforms with signed DPAs and strong controls.

Q : Should our organisation build its own generative AI model or rely on a vendor platform for better security and compliance?

A : Building your own model offers maximum control but requires deep ML, infrastructure and security expertise, plus ongoing governance under frameworks like the EU AI Act. Most CISOs in US, UK and EU enterprises currently favour reputable vendor platforms (e.g., on Azure, AWS or Google Cloud) with private instances, strong contractual assurances and regional hosting, then layering their own identity, DLP and logging on top. This usually provides a faster, more secure path than training and operating foundation models entirely in-house.

Q : How do data residency and cross-border transfer rules affect which generative AI tools we can use in the EU and UK?

A : For EU and EEA data subjects, GDPR and Schrems II require that personal data transfers to third countries such as the US are covered by SCCs and appropriate safeguards, even if tools claim “EU hosting”. UK GDPR imposes parallel requirements for transfers from the UK. This means you should favour AI providers that can guarantee regional processing (e.g., EU or UK regions), clear subprocessor lists and documented transfer mechanisms, and avoid tools that cannot explain where data is stored or accessed.

Q : What should be included in a generative AI acceptable use policy for remote and hybrid workers?

A : A good acceptable use policy explains which AI tools are allowed, which data types are prohibited, and how employees should protect credentials and client information when working remotely. It should cover safe prompting, restrictions on uploading customer or citizen data, rules for using AI on personal devices and expectations for logging, monitoring and incident reporting. Many organisations also include simple examples “do” and “don’t” scenarios for staff in sales, engineering, legal and support roles.

Q : How often should we reassess generative AI risks and update our governance framework in US, UK and European operations?

A : Given the pace of AI change and evolving regulation, reassessing generative AI risks at least annually is a minimum, with more frequent reviews for high-risk sectors such as healthcare, finance and critical infrastructure. In practice, many CISOs now align AI risk reviews with existing GDPR DPIA cycles, ISO 27001 audits or NIS2 readiness programmes. Major events new AI platforms, regulatory updates, significant incidents should also trigger interim reviews and policy updates across US, UK and EU operations.

Key Takeaways

Generative AI already delivers tangible productivity gains for employees, but it also introduces data leakage, shadow AI and new technical attack surfaces.

The most serious risks cluster around data protection, human-centric threats and an AI exposure gap where tools and usage outpace governance and monitoring.

Data loss prevention for generative AI means adapting DLP, IAM and zero-trust controls to prompts, outputs, logs, plug-ins and AI integrations.

Governance under GDPR, UK GDPR, the EU AI Act, HIPAA, PCI DSS and NIS2 requires DPIAs, clear policies, vendor due diligence and continuous monitoring.

CISOs can close AI exposure gaps via a 90-day plan: discover and inventory usage, consolidate on secure platforms, then embed GenAI into existing risk and board reporting frameworks.

If you’re a CISO, CIO or security leader wrestling with generative AI in a multi-region environment, you don’t have to solve it alone. Mak It Solutions helps organisations in the US, UK and Europe design secure GenAI architectures, governance frameworks and data protection controls tailored to their reality.

Ready to turn generative AI from a shadow risk into a managed advantage? Book a consultation with Mak It Solutions to scope your AI exposure gap and design a secure, productivity-friendly roadmap. ( Click here’s )