Serverless Architecture Use Cases: Where It Shines

Serverless Architecture Use Cases: Where It Shines

Serverless Architecture Use Cases: Go / No-Go 2025

Serverless architecture is best for event-driven, pay-per-use workloads such as APIs, automation workflows, and data pipelines where you don’t want to manage servers yourself. It’s usually the wrong choice for long-running, ultra-low-latency, or heavily regulated workloads that need strict control over infrastructure, networking, and data flows.

In practical terms: reach for serverless when your traffic is spiky, stateless, and short-lived. Reach for containers or VMs when your workloads are always-on, heavy, or under tight regulatory scrutiny in the US, UK, or EU.

Introduction

Serverless today is almost “cloud-native on autopilot”: you ship functions and glue them together with managed services; your provider handles scaling, patching, and much of the underlying ops. In 2025, SaaS, fintech, and enterprise teams in the US, UK, Germany and across the EU are revisiting serverless as cloud costs rise, regulatory pressure grows, and teams want to ship faster without exploding headcount.

The global serverless computing market is estimated at around $28 billion in 2025, with forecasts that it could exceed $90 billion by 2034. At the same time, worldwide public cloud spending is expected to surpass $700 billion in 2025, so choosing the right hosting model now has real budget impact.

This guide walks through the most important serverless architecture use cases, where it fails, how it compares to VMs, containers and PaaS, and a pragmatic decision framework (with GEO/compliance nuance) so you can leave with a clear go / no-go answer for your next project.

Serverless Architecture Use Cases at a Glance

What Counts as Serverless Architecture Today?

Modern serverless architecture combines two big ideas.

Function as a Service (FaaS) functions that run on-demand on platforms like AWS Lambda, Azure Functions, Google Cloud Functions / Cloud Run functions, and Cloudflare Workers.

Backend as a Service (BaaS) / managed backends services like DynamoDB, Firebase, S3, Cosmos DB, managed queues, and auth systems that you don’t administer yourself.

Key characteristics of serverless in 2025.

Scale-to-zero no traffic = no active instances (ideal for spiky or low-traffic workloads).

Pay-per-use cloud computing model billed per request, GB-second, or operation, not per always-on server.

Managed infrastructure the provider handles OS patching, cluster scaling, and much of the security baseline.

Event-driven architecture in the cloud functions respond to events (HTTP calls, file uploads, queue messages, cron schedules, IoT telemetry).

Top 5 Serverless Use Cases in Modern SaaS and APIs

For SaaS and API-driven apps in New York, London, Berlin or beyond, the most common serverless architecture use cases are:

Web & mobile backends and microservices

Stateless HTTP APIs, auth flows, user profile CRUD, and notifications.

Public APIs & partner integrations

Open Banking APIs in the UK, B2B integrations with EU partners, internal APIs powering US product teams.

Event-driven automation

Webhook consumers (Stripe, Shopify), image processing on upload, scheduled billing runs, CRM syncs.

Data & ETL pipelines

Micro-batch ETL jobs, log enrichment, and real-time analytics ingestion into BigQuery, Redshift, or Snowflake.

IoT & real-time streams

Device telemetry, Industry 4.0 production lines in Germany, smart city sensors streaming into Kinesis, Event Hubs, or Pub/Sub.

Surveys suggest that more than 70% of AWS customers and around 60% of Google Cloud customers already use at least one serverless service.

When Serverless Is Usually the Wrong Tool

Patterns where serverless is often a bad fit.

Long-running or CPU-intensive jobs ML training, large batch jobs, video encoding farms.

Ultra-low-latency trading or real-time gaming where every millisecond matters.

Consistently high-throughput workloads that run hot 24/7 (containers/VMs often win on cost).

Heavily regulated workloads needing tight network controls, custom HSMs, or non-standard hardening.

AEO Micro-answer Common serverless use cases

The most common real-world serverless architecture use cases for modern SaaS and API-driven applications are stateless web and mobile backends, public APIs and microservices, event-driven workflows (like webhooks and scheduled jobs), lightweight data/ETL pipelines, and IoT or real-time streaming pipelines.

High-Impact Use Cases

Web & Mobile Backends, APIs, and Microservices

For new products in San Francisco or Seattle, or UK fintechs in London building Open Banking APIs, serverless is often the fastest way to ship a secure, scalable backend.

Typical patterns

Stateless HTTP APIs via API Gateway + Lambda, Azure Functions + API Management, or Cloud Run / Cloud Functions.

Authentication flows: user sign-up/login, password resets, MFA checks.

CRUD for profiles, subscriptions, and preferences.

Webhook handlers for payments, invoices, and notifications.

For EU B2B platforms serving customers in Berlin, Munich, or Amsterdam, serverless APIs deployed in eu-central-1 (Frankfurt) or eu-west-1 (Dublin) help meet GDPR/DSGVO data-residency expectations while keeping ops light.

File Uploads, Webhooks, Queues & Schedules

Serverless really shines when events drive your workloads.

File uploads resize images or transcode video when a file lands in S3, Blob Storage or Cloud Storage.

Background tasks ending email/SMS, billing runs, nightly reconciliations.

Stripe / payment webhooks react to invoice.payment_succeeded or dispute events in milliseconds.

Queues & schedules SNS/SQS, Azure Service Bus, or Pub/Sub + Cloud Scheduler for periodic or retryable tasks.

In cities like New York, London, or Berlin, SaaS teams often see extremely spiky traffic (launch days, campaigns, seasonal peaks). With serverless, you get scale-to-zero microservices between bursts and automatic scale-out when traffic spikes, without capacity planning or overprovisioning.

Data Processing, ETL, and Real-Time / IoT Streams

For analytics and Industry 4.0 use cases in the DACH region, serverless is ideal for small, composable ETL steps.

Streams & telemetry ingest IoT sensor data via Kinesis + Lambda, Event Hubs + Functions, or Pub/Sub + Cloud Functions/Dataflow.

Micro-batch ETL transform data every few minutes, enrich logs, scrub PII before it lands in your warehouse.

IoT use cases predictive maintenance, production quality checks, smart logistics and fleet telemetry.

Analysts put the function-as-a-service (FaaS) market at roughly $15–17 billion in 2024, with growth rates above 25–30% annually through 2030. That growth is driven largely by these real-time, event-driven data patterns.

AEO Micro-answer Why serverless for spiky traffic?

Serverless often beats traditional VM or container hosting for spiky, event-driven traffic because it scales automatically with demand, bills per request or GB-second instead of per idle server, and can scale to zero when idle. That combination reduces cost and removes a lot of capacity-planning overhead for bursty workloads.

Limitations, Pitfalls, and ‘Do Not Use’ Cases

Long-Running, CPU-Intensive, and Stateful Workloads

Every FaaS platform enforces execution time limits (often in the 5–15 minute range) and charges per GB-second. That quickly becomes painful for:

ML training jobs on large models.

Video encoding farms for streaming platforms.

Complex financial batch jobs that run for hours.

For these, it’s usually cheaper and simpler to run on:

Reserved or savings-plan VMs on AWS, Azure, or GCP.

Kubernetes clusters with autoscaling tuned for batch workloads.

This is especially true for US or EU enterprises that already run container platforms and want predictable, controllable compute for heavy workloads.

High-Throughput, Latency-Critical, or Always-On Systems

Serverless functions still face.

Cold start latency (typically ~200–700 ms depending on language and config)

Concurrency limits and noisy neighbors in multi-tenant environments.

Less control over underlying hardware and networking.

If you’re running:

A trading platform in London or Frankfurt,

A multiplayer gaming backend, or

Ultra-low-latency fintech APIs,

you’re usually better off on tuned Kubernetes or bare VMs where you control CPU pinning, warm pools, and network paths end-to-end.

Cost Overruns and Compliance Traps in Regulated Sectors

Three big gotchas for regulated sectors.

Hidden cost risks

Chatty microservices calling each other thousands of times per request.

Unbounded concurrency when a sudden spike triggers millions of parallel executions.

Verbose logging of every function invocation.

Compliance nuance

HIPAA workloads in US healthcare must align with HHS/OCR rules.

PCI DSS for payments demands strong network segmentation and logging controls.

GDPR/DSGVO and UK-GDPR require data minimisation, strict residency and DPIAs for high-risk processing.

Sector-specific expectations

NHS or EU public-sector workloads often need detailed infra evidence (subnets, firewalls, OS baselines) that’s harder to provide with fully managed serverless.In Germany, BaFin and BSI expectations around outsourcing and operational risk can make full serverless adoption more complex for banks and insurers.

AEO Micro-answer Workloads that are not a good fit

Serverless is usually a poor fit for long-running or CPU-intensive workloads, ultra-low-latency trading or gaming systems, always-on high-throughput services, and heavily regulated workloads that need strict, auditable control of the underlying infrastructure, network and data flows.

VMs, Containers, and PaaS

Serverless vs VM-Based Architectures and Classic Hosting

VMs / classic hosting are still the best choice when:

You’re lifting-and-shifting a legacy .NET/Java monolith inside a US enterprise.

You need custom OS-level agents, niche runtimes, or specialised networking.

You value predictable monthly spend from reserved instances.

Serverless tends to win when.

You’re building a new SaaS backend from scratch.

You want to avoid managing patching, scaling groups, and base images.

You’re comfortable with managed services and event-driven patterns.

Many Mak It Solutions clients mix these approaches, running legacy components on VMs while building new APIs serverless-first, then gradually modernising over time (similar to the cloud trade-offs discussed in their cloud repatriation insights). (makitsol.com)

Serverless vs Kubernetes and Containers for High-Throughput Workloads

For US startups running spiky workloads, serverless can look very cheap early on: you pay only when code runs. But as throughput stays high and consistent, running on EKS/AKS/GKE or another Kubernetes platform often becomes more cost-efficient.

For EU enterprises with strict networking and security controls, containers shine because you can:

Standardise across teams.

Implement a consistent service mesh, mTLS and policy enforcement.

Keep a single deployment model for both on-prem and cloud.

A hybrid pattern is increasingly common.

Use serverless at the edge (API gateways, lightweight adapters, cron jobs)

Run core compute (matching engines, reporting, ML pipelines) on containers.

Serverless vs PaaS (Heroku/App Engine) and Hybrid Architectures

PaaS platforms like Heroku or App Engine.

Focus on long-running apps with autoscaling.

Expose more OS/sysadmin control than pure FaaS.

Bill per dyno/instance, not per request.

Modern teams often use:

FaaS for bursty jobs & event handlers,

PaaS or containers for steady-state services,

BaaS for auth/storage (Cognito, Firebase Auth, Supabase, etc.)

Region-specific choices matter: running in London (UK South) for FCA-regulated fintechs, Frankfurt (eu-central-1) for German banks, or Stockholm for Nordic public-sector workloads can control latency and data residency while still leveraging serverless.

AEO Micro-answer Deciding between serverless, containers and VMs

Teams in the US, UK and EU usually choose serverless for new, event-driven, stateless services; containers for high-throughput, latency-sensitive or highly customised workloads; and VMs for legacy apps or niche infrastructure needs, often combining all three in a hybrid architecture instead of picking just one.

Design, Security, and Operations

Designing Event-Driven Serverless Workflows and APIs

Good serverless systems start with domain-driven design.

Model bounded contexts (Billing, Auth, Notifications).

Group functions around capabilities, not random endpoints.

Use patterns like pub/sub, fan-out/fan-in, idempotency, retries, and dead-letter queues.

Example: a SaaS signup flow used by users in Austin, London, and Berlin.

An HTTP signup API writes to a Users table.

An event triggers functions to send a welcome email, create a CRM record, and start a trial subscription.Failures go to a dead-letter queue (DLQ) for ops review.

This style of event-driven architecture in the cloud is where Mak It Solutions often starts when modernising SaaS platforms or building serverless-first backends alongside modern JavaScript frameworks. (makitsol.com)

Security, Compliance, and Data Residency by Region

For serverless in regulated environments.

Use least-privilege IAM for each function (scoped roles and service principals).

Map architecture to frameworks like SOC 2, ISO 27001, HIPAA, PCI DSS, GDPR/DSGVO, UK-GDPR and NIS2.

Choose regions deliberately.

us-east-1 / us-west-2 for US workloads.

UK South (London) for UK customers and NHS-adjacent workloads.

eu-central-1 (Frankfurt), eu-west-1 (Dublin), or Amsterdam/Stockholm for EU-only datasets.

GDPR and UK-GDPR both emphasise data minimisation and clear data flows. Serverless can support that via small, well-scoped functions and explicit event contracts but you must document those flows carefully for audits and DPIAs.

Monitoring, Observability, and Cost Management for Serverless

Operational maturity for serverless means:

Centralised logs, metrics, and traces (CloudWatch, Azure Monitor, GCP tools, plus platforms like Datadog or New Relic)

Dashboards for latency, cold-start rates, error rates, and concurrency.

Cost guardrails: rate limits, budgets, alerts, and regular reviews of your most expensive functions.

German and wider EU enterprises already under pressure from GDPR, DSA and NIS2 often treat observability as a compliance requirement, not just a nice-to-have, because it underpins incident response and audit trails.

AEO Micro-answer Cold starts, concurrency and UX

Cold starts and concurrency limits can add hundreds of milliseconds to the first request after inactivity and cap how many requests your serverless API can handle in parallel. Architects mitigate this by using provisioned concurrency or warmers, putting latency-sensitive endpoints on containers, tuning timeouts/retries, and setting concurrency limits plus throttling on upstream services.

Is Serverless Right for Your Next Project?

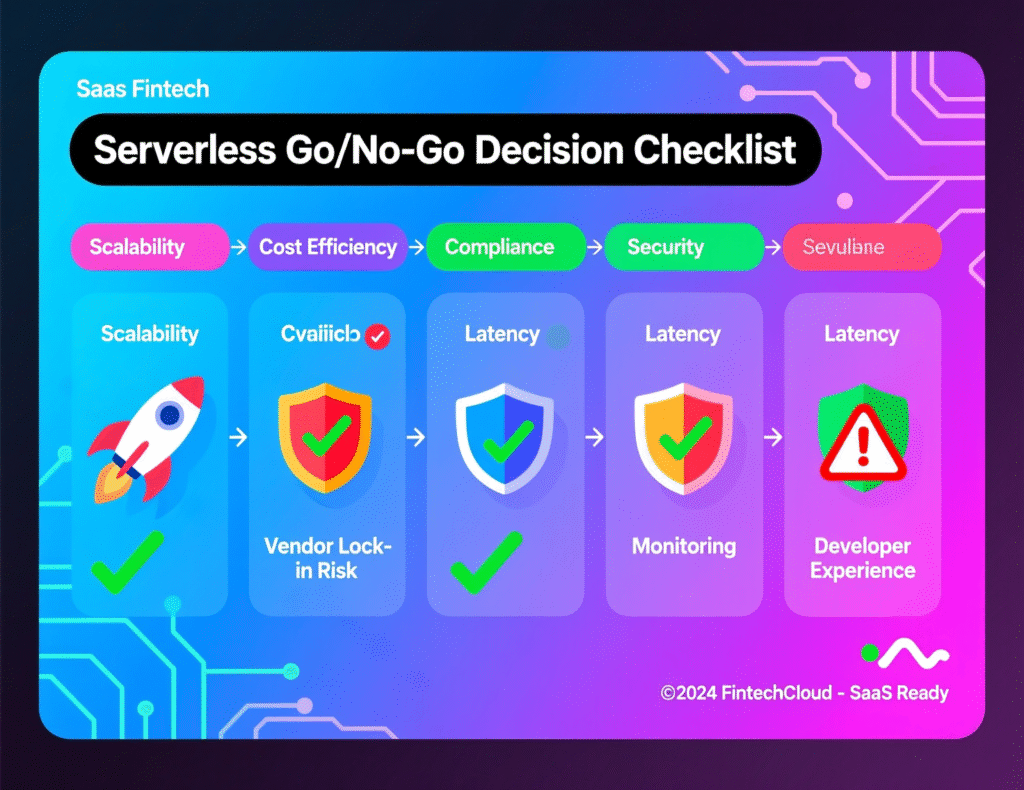

Go / No-Go for Serverless

Use this quick checklist.

Go serverless if…

Traffic is spiky or unpredictable.

Requests are stateless and complete within a few seconds.

You’re comfortable with managed services and event-driven patterns.

Latency tolerance is roughly 100–300 ms for most endpoints.

Compliance needs can be met with standard cloud controls and regional hosting.

Think twice (or go hybrid) if…

You need sub-10 ms latency consistently.

Workloads run for hours at a time or require GPUs/large memory.

You need full control of OS, network, and security tools.

Regulators (e.g., BaFin, NHS, US healthcare regulators) demand very detailed infra controls and on-prem options.

Startups in the US and UK can usually go serverless-first for greenfield SaaS. Large EU enterprises often land on a hybrid model: serverless for APIs and automation, containers or VMs for core systems of record.

SaaS, Fintech, Healthcare, Public Sector

SaaS (US & UK)

Start with serverless for public APIs, webhooks, background jobs and feature experiments.

Fintech & Open Banking

Use serverless for customer-facing APIs, reporting, and notifications; keep matching engines and core ledgers on containers/VMs, especially under BaFin/FCA scrutiny.

Healthcare (NHS, US HIPAA)

Serverless can power appointment reminders, portals, and non-PHI features. Anything involving sensitive PHI needs a careful HIPAA/GDPR review and might stay on more controlled platforms.

Public sector

Check residency rules and procurement constraints; serverless may be allowed only in specific accredited regions/providers.

From Monolith/Traditional to Serverless or Hybrid

A pragmatic migration path.

Identify low-risk workloads

Start with background jobs, emailers, report generation, and file processing.

Carve out async workflows

Put them behind queues or events and implement them as functions.

Expose new APIs

Introduce serverless APIs in front of existing systems, gradually moving well-bounded domains over.

Refactor core domains last

Tackle core business domains once you’re confident with tooling, observability, and governance.

This mirrors Mak It Solutions’ broader approach to cloud modernisation and repatriation: small, measured experiments rather than “big bang” rewrites. (makitsol.com)

Conclusion

Serverless is strongest for stateless APIs, event-driven workflows, data/ETL, and IoT streams.

It’s weakest for long-running, ultra-low-latency, or heavily regulated workloads that need infrastructure control.

In 2025, the serverless and FaaS markets are growing fast, but hybrid architectures (serverless + containers + VMs) are becoming the norm, not the exception.

GEO and compliance (US, UK, Germany/EU) heavily influence which serverless architecture use cases make sense for your platform.

How to Pilot Serverless Safely in the US, UK, and EU

Start with a small, well-instrumented pilot:

Pick one clear use case (for example, a webhook consumer or scheduled billing job).

Deploy to a compliant region (London or Frankfurt for EU/UK, us-east-1/us-west-2 for US).

Define clear success metrics: latency, error budget, monthly cost, and operator effort.

From there, you can scale up to more workloads or confidently decide that containers/VMs are the better fit for your next project.

If you’re still unsure whether your next SaaS, fintech or analytics workload belongs on serverless, containers or VMs, you don’t have to guess. Mak It Solutions can help you map workloads, regulations and cost models, and design a pragmatic hybrid architecture that fits your US, UK or EU footprint.

Book a short architecture assessment, share your current stack and constraints, and get a clear serverless go / no-go recommendation plus a concrete migration roadmap you can share with your stakeholders. ( Click Here’s )

FAQs

Q : Is serverless architecture always cheaper than running containers or VMs in the cloud?

A : No. Serverless can be dramatically cheaper for low to medium traffic and spiky workloads because you pay only when code runs. But for consistently high throughput — for example, a busy API that’s saturated all day well-right-sized containers or reserved VMs often win on cost. The only way to be sure is to model your request volume, average execution time, and memory usage and compare it to container or VM pricing in your target regions.

Q : Can I run long-running data processing or ML training jobs on serverless without blowing up my bill?

A : Technically you can chain functions or use workflows/orchestrators, but it’s rarely cost-efficient or operationally simple. FaaS platforms have execution time limits, and long-running CPU/GPU workloads accumulate GB-seconds very quickly. For big ETL jobs, model training or video encoding, containers and VMs (possibly spot/reserved) are usually a safer and cheaper choice, with serverless reserved for triggering or coordinating those jobs.

Q : How do cold starts in serverless affect user experience for APIs used in Europe and the US?

A : Cold starts introduce extra latency for the first request after a period of inactivity, which can be noticeable on user-facing APIs especially for customers far from your chosen region. In practice, most APIs can tolerate occasional 200–700 ms spikes, but trading platforms or real-time apps often cannot. You can mitigate this with provisioned concurrency, regional replicas (for example, US + EU deployments), connection pooling and caching layers in front of your functions.

Q : What are common mistakes teams make when migrating a monolith to serverless for the first time?

A : Common pitfalls include “lifting and shifting” the monolith into one giant function, over-chopping into hundreds of tiny functions with chatty network calls, and ignoring observability until troubleshooting becomes painful. Teams also underestimate IAM complexity and compliance documentation. A safer approach is to start by extracting async jobs and well-bounded domains, put proper logging and tracing in place, and only then tackle core business flows.

Q : How do I keep serverless applications compliant with GDPR/DSGVO, HIPAA, or PCI DSS across different regions?

A : Compliance is mostly about how you design and document, not the fact that you’re using serverless. Use data-minimising payloads, encrypt data at rest and in transit, restrict regions to where your users actually are, and apply strict IAM and network boundaries. Map your architecture to frameworks like GDPR/DSGVO, UK-GDPR, HIPAA and PCI DSS, keep a clear data inventory, and ensure logging and retention policies match regulatory requirements in each region.