Real-Time Analytics vs Batch Processing in US & EU

Real-Time Analytics vs Batch Processing in US & EU

Real-Time Analytics vs Batch Processing in US & EU

Real-time analytics processes data continuously as events arrive so teams can act within seconds or less, while batch processing groups large volumes of data and runs on a fixed schedule for deeper historical analysis. For most US, UK, German and wider EU organizations, the smartest move is not to choose one but to design a hybrid strategy where real-time analytics powers a few high-impact journeys and batch processing handles governance-heavy and cost-sensitive workloads.

Introduction

Your dashboards, alerts, fraud checks and “yesterday’s numbers” all run on one of two engines: real-time analytics or batch processing. Every time a data leader in New York, London or Berlin asks for “live” dashboards, someone on the team quietly wonders: if we invest in real-time, do we still need batch at all?

Real-time streaming data processing unlocks instant detection and personalization, but batch remains the workhorse for heavy historical workloads, finance, compliance and data science. Analysts expect the streaming analytics market alone to grow from around $35B in 2025 to well over $170B by 2032, while traditional batch-centric analytics and warehouses continue to expand in parallel.

In practice, modern teams in the US, UK and EU end up with a hybrid data pipeline architecture: a streaming layer for operational decisions within seconds, and a batch layer for trusted reporting, model training and regulatory data. The rest of this guide walks through how to compare the two, design architectures on AWS, Azure and GCP, and decide what should be real-time, batch, or both.

What Is Real-Time Analytics vs Batch Processing?

Real-time analytics processes events as they arrive so your system can update metrics and trigger actions within seconds or less. Batch processing accumulates data for a period minutes, hours or days then processes it in one or more large jobs to produce historical insight and stable outputs.

For a modern data team, the difference is mainly about when data is processed and what latency your stakeholders can live with.

Core Definitions in Plain Language (Data Team-Friendly)

In a real-time analytics setup, events flow continuously from sources (apps, IoT sensors, logs) into an event bus such as Apache Kafka, AWS Kinesis or Google Pub/Sub. Stream processors like Apache Flink, Kafka Streams or Dataflow transform and aggregate the data, then push it into low-latency stores sometimes directly into tools like Databricks, Snowflake or BigQuery for near real-time analytics. )

In batch analytics, data lands first in files or tables (S3, ADLS, GCS, OLTP databases) and is processed on a schedule using engines such as Apache Spark, dbt, SQL in BigQuery/Snowflake, or orchestrated jobs in tools like Airflow or Azure Data Factory.

You’ll also hear.

“Real-time” – typically sub-second to a few seconds latency.

“Near real-time” – seconds to a couple of minutes; fine for most dashboards.

“Micro-batch” – very small batches every minute or so; often used in warehouse-native streaming.

Practical Differences That Matter (Latency, Cost, Complexity)

For stakeholders, the key differences are:

Latency: streaming/real-time targets sub-second to a few seconds; batch typically runs every 5–60 minutes, hourly or overnight depending on SLAs.

Resource pattern: streaming clusters run continuously and must handle peak load, while batch jobs can scale up briefly, run heavy work, then scale back down.

Complexity & skills: building reliable event-driven data processing requires new skills (Flink/Spark streaming, Kafka ops, exactly-once semantics, event-time windows). Batch pipelines, especially SQL/dbt-based, are generally easier to onboard analysts and BI engineers onto.

How US, UK, and EU Teams Use Both Today

Across regions, usage patterns look similar with local twists.

US (New York, San Francisco, Seattle)

Real-time analytics powers fraud detection, recommendations and in-app personalization; finance teams still rely heavily on nightly and month-end batch for revenue reporting and SOX controls.

UK (London, Manchester)

Open Banking APIs and instant payment rails require streaming-level visibility, while PRA, FCA and board reporting remains batch-heavy.

Germany/EU (Berlin, Frankfurt, Dublin, Amsterdam)

Industrie 4.0 factories push sensor streams for predictive maintenance, but PSD2/SEPA and BaFin filings typically run in scheduled batch jobs against EU-only data warehouses because of GDPR/DSGVO and data residency rules.

Pros and Cons: Real-Time Analytics vs Batch Processing

Real-time analytics gives you fresh insight within seconds, but it costs more to run and is harder to operate, especially at global scale. Batch processing is cheaper, simpler and still unbeatable for large historical workloads that don’t need instant answers.

Benefits of Real-Time Analytics for Modern Enterprises

With streaming in place, you can.

Detect fraud on card transactions as they happen, blocking suspicious payments before settlement.

Power dynamic pricing and recommendations in an e-commerce app in Austin or London while the user is still browsing.

Monitor IoT devices in German factories or EU energy grids to trigger maintenance before a failure.

This isn’t just hype: some studies suggest over 70% of enterprises plan to invest in real-time analytics or data enrichment tools by 2025, and roughly 60% already use AI-powered enrichment to improve data quality.

Note

Global numbers like these are approximate and should be verified against current research before you use them in decision-making.

Where Batch Processing Still Wins (Cost, Stability, Throughput)

Batch is far from dead. It’s usually:

Cheaper: you can run large Spark jobs or warehouse queries in off-peak windows and scale clusters down afterward instead of keeping a streaming pipeline hot 24/7.

More predictable: ideal for end-of-day reconciliation, financial closes, and ML feature backfills.

Friendly to regulators: official filings, HIPAA audit trails, and NHS or BaFin reports are often produced from controlled batch jobs that generate reproducible outputs.

Examples: quarterly US healthcare quality reports under HIPAA, NHS England statutory reporting, and BaFin PSD2 summary filings across German banks all lean on robust batch pipelines.

When a Hybrid Approach Is Non-Negotiable

Most mature teams run real-time + batch together:

Streaming for operational decisions (fraud, personalization, alerts).

Batch for governance, forecasting and model training on full history.

Signs you’ve over-rotated toward real-time:

Alert fatigue from noisy streaming metrics.

Difficulty reconciling live numbers with finance or regulatory reports.

Signs you’ve gone too batch-heavy:

Fraud or outages discovered hours too late.

“Stale dashboard” complaints from product or ops.

Data Architectures & Pipelines

Streaming-First Stack vs Classic Batch

A streaming-first stack usually looks like:

Event bus

Kafka / Confluent, AWS Kinesis, Google Pub/Sub.

Stream processors

Flink, Kafka Streams, Kinesis Data Analytics, Dataflow.

Low-latency stores

Kafka topics, NoSQL, materialized views, or lakehouse/warehouse tables updated continuously

A classic batch stack:

Data lands in cloud storage or OLTP systems (S3, ADLS, GCS, operational DBs).

Orchestrators like Airflow, Azure Data Factory or AWS Glue run Spark, dbt or SQL jobs on a schedule.

Results land in Snowflake, BigQuery, Redshift, Synapse or Databricks for BI.

You’ll also need to place data in appropriate regions eu-central-1 (Frankfurt), eu-west-1 (Dublin), or UK South for GDPR/UK-GDPR and bank data residency policies.

Lambda vs Kappa vs Modern Hybrid Architectures

Traditionally

Lambda architecture keeps both batch and streaming code paths: a batch layer for correctness and a speed layer for low latency.

Kappa architecture collapses everything into streaming against an immutable log, replaying as needed.

Teams often complain about Lambda because of duplicated logic and higher maintenance. In response, cloud platforms now push “streaming-first, warehouse-native” patterns think Databricks, Snowflake or BigQuery using micro-batches, change data capture and materialized views to get near real-time analytics off a central lakehouse.

US startups might jump straight to Kappa-like architectures on Kafka + lakehouse.

UK SMEs and German Mittelstand companies often start batch-first, add a few streaming workloads, then standardize hybrid patterns over time.

Designing Hybrid Data Pipelines on AWS, Azure, and GCP

On AWS, a common pattern is:

Streaming

Kinesis or MSK (managed Kafka) + Lambda/Flink for real-time; store outputs in DynamoDB, OpenSearch, or streaming tables in Redshift or a lakehouse.

Batch

Glue/EMR + Redshift or S3/Trino, running in us-east-1 for US workloads and eu-central-1/eu-west-1 for EU banks or health providers.

On Azure (popular for UK public sector and NHS analytics)

Streaming

Event Hubs + Azure Stream Analytics / Databricks streaming.

Batch

Data Factory and Synapse or Azure Databricks for nightly pipelines in UK South or Germany West Central regions.

On GCP (strong in Berlin/Dublin-based SaaS):

Streaming

Pub/Sub + Dataflow for real-time analytics.

Batch

BigQuery scheduled queries and Dataform/dbt jobs on EU-only datasets to stay within GDPR and Schrems II constraints.

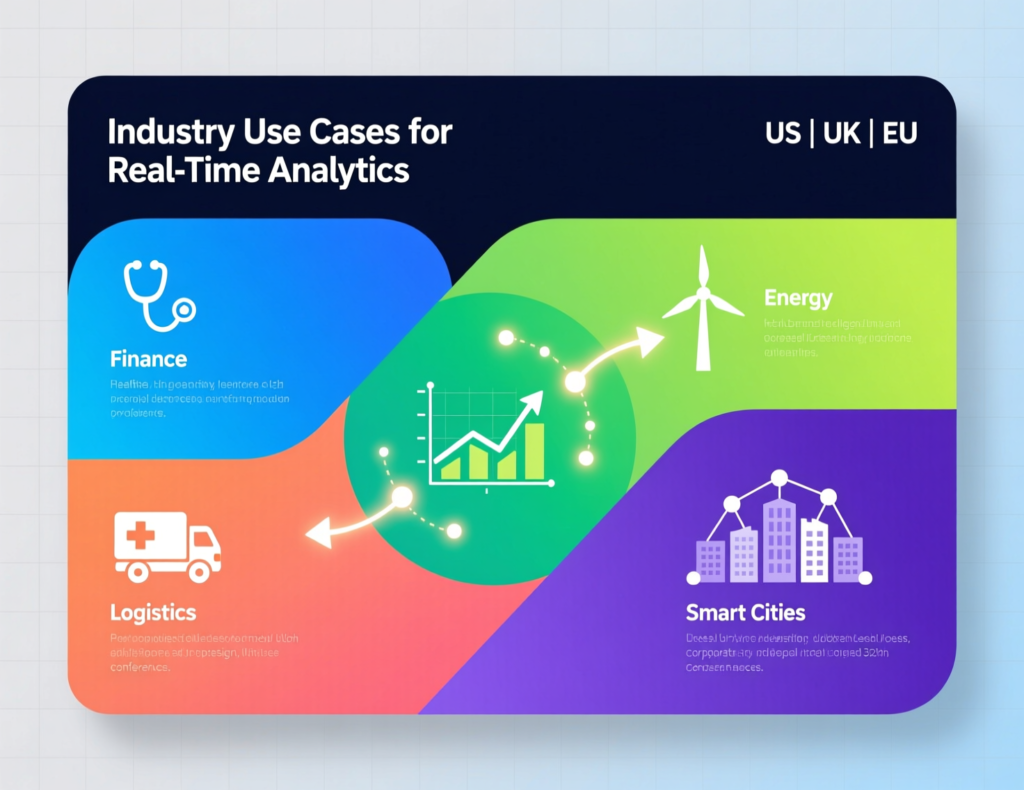

Industry Use Cases & ROI Across US, UK, and EU

Most organizations unlock the best ROI by using real-time analytics in specific, high-impact journeys and leaving the rest on cheaper batch.

Fintech & Banking

US: streaming pipelines score card transactions in real-time while batch jobs generate settlement files and PCI DSS-compliant reports.

UK: Open Banking providers push payment and consent events via streaming APIs, but PRA and BoE regulatory packs are still assembled via overnight batch.

Germany/EU: banks monitor PSD2/SEPA payments in near real-time to detect suspicious behaviour, then feed BaFin reporting pipelines running in monthly or quarterly batches.

E-Commerce, SaaS & Consumer Apps.

San Francisco, London and Berlin-based SaaS and consumer app teams typically:

Stream clickstream data to drive recommendations, feature flags, and cart abandonment nudges in real-time.

Use batch processing for cohort analysis, LTV modelling, revenue recognition and leadership dashboards.

Global consumer brands like Apple combine streaming user events from devices and apps with batch-processed App Store, subscription and hardware sales data to get a complete view of performance.

IoT, Healthcare & Public Sector: Monitoring vs Compliance

IoT/Industrie 4.0

German factories and EU energy operators use near real-time analytics on sensor streams for condition monitoring; batch is used to aggregate months of telemetry for capacity planning.

Healthcare (US + UK NHS)

Patient monitoring dashboards need near real-time alerts, but HIPAA and NHS audit requirements are met by batch jobs that consolidate logs, claims and outcomes data into secured warehouses.

Governance, Compliance & Data Strategy

GDPR/DSGVO, UK-GDPR and Data Residency in Streaming vs Batch

GDPR and UK-GDPR apply regardless of streaming or batch, but streaming raises sharper questions around profiling, cross-border transfers and purpose limitation.

Typical patterns in Europe.

Keep raw events inside EU or UK regions (Frankfurt, Dublin, Amsterdam, London), then send only aggregated or anonymized metrics to global systems.

Apply strict purpose-limited schemas on event streams so you don’t accidentally mix marketing, product analytics and risk scoring without appropriate consent.

Regulated Industries.

For heavily regulated sectors you must map requirements like log retention, access controls and encryption to both streaming and batch

HIPAA (US): mandates safeguards for protected health information; real-time alerts must still route through secured, audited systems, not shadow dashboards

PCI DSS: requires strong controls on cardholder data, whether you process transactions via real-time scoring or end-of-day batch files.

BaFin / PSD2 / NHS: regulators usually expect near real-time fraud monitoring but accept batch-based official filings and KPI packs, as long as reconciliation is robust.

Governance-by-Design: Catalogs, PII Masking & Observability

To avoid streaming becoming a governance black hole.

Use catalogs and lineage tools (Collibra, DataHub, Secoda, etc.) for both event streams and batch tables so you can answer “who touched what, when.”

Apply tokenisation and PII masking in producers, not just in the warehouse.

Set SLAs on data quality, latency and incident response for streaming pipelines just as you would for nightly ETL.

How to Choose: Decision Framework for Data Leaders

Start with business impact and compliance, not hype. Use real-time where latency truly changes outcomes; keep batch for everything else; and evolve gradually toward a hybrid model.

Clarify Use Cases, SLAs and Compliance Boundaries

Map user journeys: fraud, checkout, ICU monitoring, trading, etc.

For each, decide what “good enough” latency means: milliseconds, seconds, hourly, or “tomorrow morning.”

Align early with legal and compliance teams on GDPR/DSGVO, UK-GDPR, HIPAA, PCI DSS, BaFin, NHS expectations for each workflow.

Evaluate Cost, Complexity, and Team Readiness

Streaming isn’t just infra cost; it’s 24/7 operations, on-call, and new skills. Some estimates suggest enterprise-grade real-time processing stacks can cost an order of magnitude more per month than equivalent batch workloads, especially once you include people and tooling.

For a UK SME or German Mittelstand manufacturer, a near real-time or micro-batch solution (e.g., 5–15 minute warehouse refresh) often balances responsiveness with cost.

Important

Always validate current pricing and total cost of ownership for your specific cloud and tooling before committing.

Select Real-Time, Batch, or Hybrid for Each Project

Use a simple rule-of-thumb.

If SLA < 5–10 seconds: streaming or event-driven processing (fraud checks, IoT alarms).

If SLA is minutes to hours: micro-batch or near real-time warehouse.

If SLA is “by tomorrow” or less frequent: classic batch.

Apply it to fintech, e-commerce, IoT and healthcare teams in New York, London or Berlin and you’ll quickly see which workloads truly justify Kafka/Flink versus a well-tuned dbt + warehouse stack.

This is also where you decide build vs buy: native Kafka/Flink, versus managed streaming (Confluent, Kinesis, Pub/Sub) or real-time analytics platforms and iPaaS solutions.

Roadmap: Evolving from Batch-Only to Hybrid Real-Time + Batch

Stabilise and Modernise Your Batch Stack

Clean up legacy ETL, migrate toward a lakehouse or central warehouse.

Introduce modern orchestration (Airflow, Data Factory, Glue) and CI/CD so batch pipelines are predictable and well-tested.

Add Real-Time for a Few High-ROI Journeys

Start with 1–3 use cases where latency clearly changes outcomes: fraud, real-time personalization, operations alerting.

Introduce Kafka/MSK, Kinesis, Event Hubs or Pub/Sub and connect outputs into your existing warehouse to avoid creating a second data silo.

Standardise Hybrid Patterns and Organisation-Wide Playbooks

Document reference architectures by region (US, UK, EU) showing which workloads live where.

Create a checklist for “streaming vs batch” decisions and bake it into project intake.

Over time, stand up a platform or data infrastructure team to own Kafka/Flink, SLAs, observability and training.

Key Takeaways

You rarely replace batch with real-time; you layer streaming on top of a solid batch foundation.

Use real-time analytics only where latency truly changes business outcomes (fraud, personalization, critical monitoring).

Keep batch for finance, compliance, heavy modelling and everything that can wait until later.

Governance (GDPR/DSGVO, UK-GDPR, HIPAA, PCI DSS, BaFin, NHS) and data residency are core design inputs, not afterthoughts.

A phased roadmap batch modernisation, then targeted streaming, then standardised hybrid patterns reduces risk and cost for teams in the US, UK and EU.

If you’re wrestling with where to invest next Kafka clusters or better batch pipelines you don’t have to guess. Mak It Solutions can help your data and engineering leaders in New York, London, Berlin and beyond map use cases, SLAs and regulatory constraints into a clear hybrid data roadmap.

Book a short discovery session with our team and we’ll walk through your current analytics stack, highlight quick wins, and outline an architecture that balances real-time impact with batch reliability and compliance. ( Click Here,s )

FAQs

Q : Is real-time analytics always better than batch processing for dashboards?

A : No. Real-time analytics is only better when your users gain real value from seeing changes within seconds for example, operations control rooms or fraud teams. For many leadership and finance dashboards, a 5–60 minute refresh or overnight batch is more than enough and much cheaper to run. The right choice depends on how quickly a decision must be made and how costly it is to maintain streaming pipelines.

Q : Can I migrate from batch ETL to streaming pipelines without breaking my existing reports?

A : Yes, but it requires careful planning. Most teams first introduce streaming for a narrow set of use cases while keeping existing batch jobs as the system of record. Over time, you can phase in “change data capture + streaming into the warehouse” patterns and gradually switch downstream reports to read from streaming-enriched tables, validating numbers side-by-side before retiring old batch ETL.

Q : How expensive is real-time analytics infrastructure compared to classic batch jobs for a UK or EU business?

A : Real-time stacks tend to be more expensive because they run continuously, must handle peak load and need more specialised skills. Some industry analyses put managed real-time processing costs in the low thousands to tens of thousands of dollars per month for mid-sized enterprises, versus hundreds to low thousands for comparable batch-only setups. Actual totals depend on your cloud contracts, data volumes and team size, so you should model TCO for your specific workloads.

Q : What are examples where batch processing is still better than real-time analytics in healthcare and public sector?

A : Healthcare and public-sector organizations often lean on batch for regulatory reporting, claims processing, and long-horizon outcomes analysis. Examples include US HIPAA audit logs rolled up into monthly or quarterly summaries, NHS trust-level KPI packs generated overnight, and EU-wide public health or social care statistics compiled from many systems.These workloads value completeness, reproducibility and cost efficiency over second-by-second freshness.

Q : How do GDPR/DSGVO and UK-GDPR affect event streaming and real-time user profiling in Europe?

A : GDPR/DSGVO and UK-GDPR don’t forbid streaming, but they do restrict how you profile individuals and move their data across borders. You must have a clear lawful basis, explicit purposes, appropriate consent where required, and strong safeguards for cross-border streaming to non-EU/UK regions. Many EU organizations keep raw events in EU or UK regions, strip or tokenise PII early in the stream, and feed only aggregated metrics into global systems.