Data Lakehouse Architecture for US & EU Enterprises

Data Lakehouse Architecture for US & EU Enterprises

Data Lakehouse Architecture for US & EU Enterprises

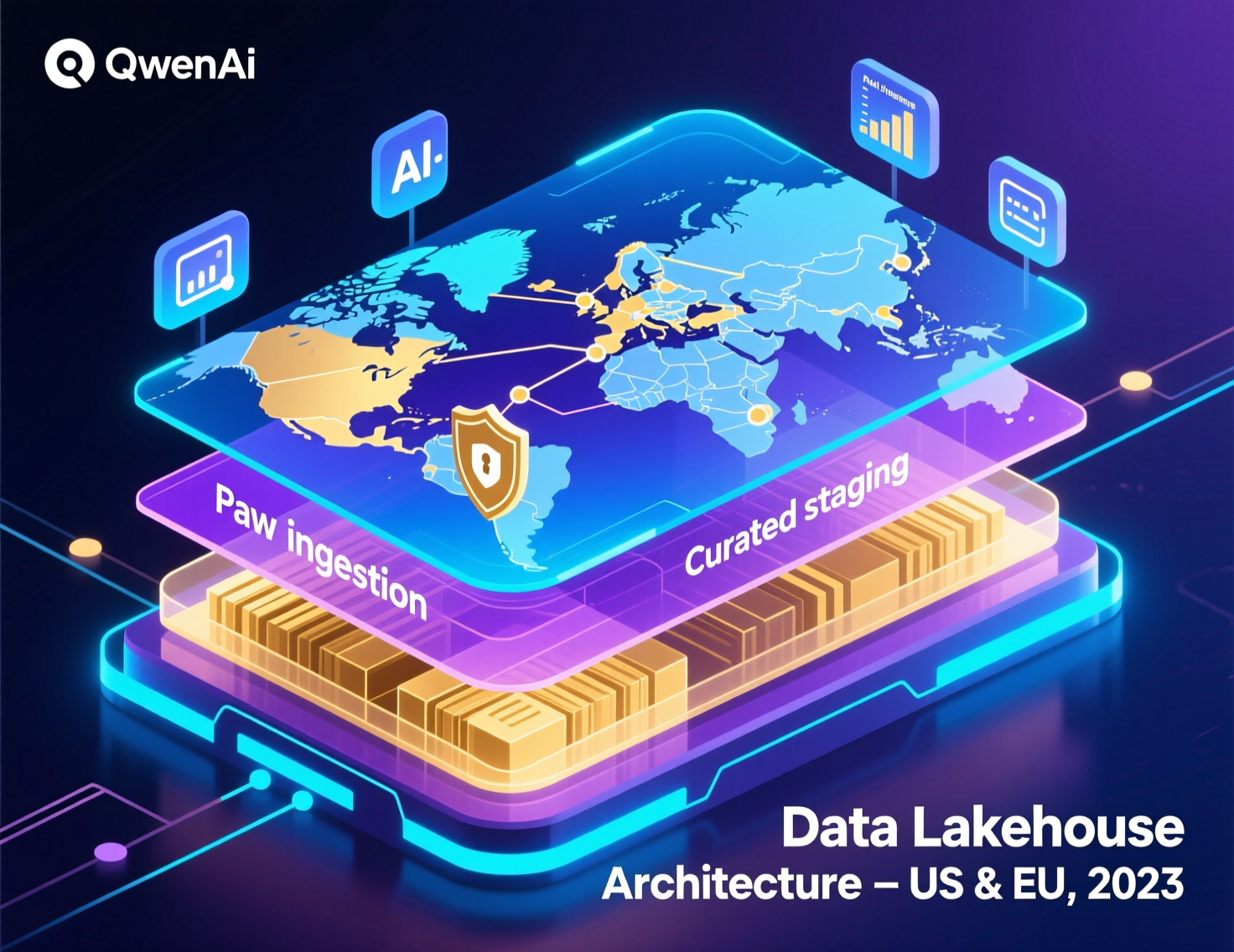

Data lakehouse architecture combines the cheap, scalable storage of data lakes with the governance, performance and SQL analytics of data warehouses on a single platform. For US, UK, German and wider EU enterprises, it’s becoming the preferred blueprint to power AI, BI and real-time analytics while still meeting strict data residency and compliance requirements.

From Data Lakes to Data Lakehouse Architecture: How Modern Data Platforms Are Evolving

Over the last decade, cloud data warehouses gave us curated BI at scale, and cheap object-storage data lakes became the dumping ground for raw data. Now, data lakehouse architecture is emerging as the converged pattern that lets enterprises in the US, UK, Germany and across the EU run governed analytics and AI on the same platform.

With global data expected to hit roughly 180+ zettabytes in 2025, the pressure to move from fragmented “data swamps” to unified, well-governed lakehouses is intense.

This guide stays vendor-neutral while explaining what a data lakehouse is, how it differs from lakes and warehouses, why organisations are moving this way, and how to migrate step by step. Along the way we’ll touch on regulatory realities like GDPR/DSGVO, UK-GDPR, HIPAA and PCI DSS, plus cloud choices across AWS, Azure and Google Cloud for US and European data leaders.

What Is a Data Lakehouse in Modern Data Architecture?

A data lakehouse is a modern data architecture that applies warehouse-grade governance, performance and reliability directly on top of data lake storage. It combines low-cost, scalable object storage with open or standardised table formats, strong metadata and flexible compute so BI, AI and real-time use cases can run on one unified analytics platform.

For US, UK, German and EU organisations this means a single governed source of truth that can still respect regional data residency and regulatory constraints.

Core Principles of Data Lakehouse Architecture

At its core, data lakehouse architecture is built on.

Separation of storage and compute so you can scale them independently on AWS S3, Azure Data Lake Storage (ADLS) or Google Cloud Storage (GCS).

Open table formats such as Apache Iceberg, Delta Lake and Apache Hudi that add ACID transactions, schema evolution and time travel on top of files.

ACID transactions to prevent partial writes and inconsistent reads when many jobs touch the same tables.

Governance-first design with central catalogues, policies and fine-grained access controls.

Batch + streaming support, so Kafka, Kinesis or Pub/Sub feeds and nightly ETL/ELT all land in the same tables.

In practice, a New York–based team might build a lakehouse on S3 with Iceberg and an engine like Databricks or Snowflake, while a London or Frankfurt team runs ADLS with Delta tables and Azure Databricks or Synapse same principles, different stacks.

Key Layers in a Data Lakehouse (Ingestion, Storage, Metadata, APIs, Consumption)

Most enterprise lakehouses can be explained in five layers.

Ingestion

Batch loads from SaaS apps and OLTP systems plus streaming from Kafka, Kinesis or Pub/Sub. As in any modern ETL vs ELT decision, you’ll balance where transformations run and how PII is handled. (makitsol.com)

Storage

Cheap, durable object storage (S3, ADLS, GCS) in appropriate regions (e.g., us-east-1 for New York workloads, UK South for London, eu-central-1 for Frankfurt).

Metadata & catalog

Unity Catalog, AWS Glue, Hive/Glue Data Catalog or BigQuery’s metadata layer define tables, schemas, ownership and lineage.

Governance & quality

Policies, data contracts, quality checks and approvals aligned with GDPR and sector rules.

Consumption

SQL, notebooks, ML pipelines, dashboards, reverse ETL and APIs.

In New York, you might prioritise low-latency analytics for trading; in London, NHS data may drive stricter PHI governance; in Frankfurt, BaFin expectations shape audit trails and retention rules. Same layers, different emphasis.

Lakehouse vs Traditional Data Warehouse.

Traditional data warehouses (on-prem Teradata, cloud-native Snowflake or BigQuery) are schema-on-write systems optimised for curated BI and reporting. They struggle when you throw large amounts of semi-structured, unstructured and event data at them exactly the fuel modern AI models need. Meanwhile, raw data lakes have kept costs low but often turned into “data swamps” with weak governance and unpredictable performance.

Lakehouses bridge this gap by running warehouse-like engines directly on lake storage, using columnar formats and table metadata to deliver near-warehouse performance for complex analytics and AI. With the global data lakehouse market already around the high single-digit to low-teens billions of dollars and growing at >20% CAGR, this architectural shift is clearly underway.

Data Lake vs Data Lakehouse vs Data Warehouse: What’s the Difference?

A data warehouse is a highly structured analytics store optimised for curated BI, reporting and dimensional models. A data lake is a low-cost, schema-flexible repository for raw data often semi-governed at best. A data lakehouse applies warehouse-grade governance, performance and reliability directly on lake storage, so one platform can power both BI and AI.

For architects in the US, UK, Germany and wider EU, the key decision is whether to keep separate lake + warehouse stacks or converge onto a governed lakehouse.

Comparing Storage, Schema, and Performance Characteristics

Storage

Lakes and lakehouses use object storage; warehouses hide storage under the hood.

Schema

Warehouses enforce schema-on-write; lakes lean schema-on-read; lakehouses blend both via table formats and contracts.

Performance

Warehouses shine for star-schema BI; raw lakes can be slow; lakehouses close the gap with columnar storage, partitioning and caching.

Cost

Lakes and lakehouses are usually cheaper per TB than pure warehouse storage, but poor design can still blow up compute bills.

Open formats like Iceberg or Delta let you keep data portable while still indexing, clustering and pruning aggressively for complex analytics.

When You Still Need a Separate Warehouse

Some organisations will still keep a dedicated warehouse alongside a lakehouse:

Highly standardised financial reporting in banks under BaFin or FCA oversight.

Legacy on-prem BI tools that can’t easily talk to modern lakehouse engines.

Extremely predictable, tabular reporting where an existing warehouse is already finely tuned.

For a German bank in Frankfurt, it’s common to run a BaFin-compliant core warehouse for regulatory reports while gradually shifting data science and ML to the lakehouse.

Common Hybrid Patterns in US, UK, and EU Enterprises

Typical patterns we see.

Lakehouse for AI + semi-structured data; warehouse for legacy BI

E.g., a US healthcare provider under HIPAA keeps Epic/Cerner aggregates in a warehouse while streaming HL7/FHIR feeds into a lakehouse for ML. (HHS)

Gradual migration

New workloads (fraud models, real-time customer 360, IoT telemetry) go lakehouse-first; old reports move later.

Sector variants

NHS trusts in the UK layering a lakehouse beside existing data platforms; German banks using a lakehouse for PSD2/open banking APIs and real-time analytics under DSGVO. (EUR-Lex)

Why Are Organisations Moving from Data Lakes to Lakehouse Architectures?

Organisations are shifting from raw data lakes to lakehouse architectures because unmanaged lakes create governance risk, erratic performance and low analytics adoption. Lakehouses layer robust schema management, data quality, security and cost controls on the same cheap storage, enabling reliable BI, self-service analytics and AI from one platform.

This is especially attractive in regulated US, UK, German and EU environments where GDPR/DSGVO, UK-GDPR, HIPAA and PCI DSS demand strong controls and auditability.

Pain Points of Legacy or “Data Swamp” Lakes

Legacy lakes on S3, ADLS or GCS often suffer from:

Missing or stale metadata and lineage.

Inconsistent schemas and duplicated pipelines.

High time-to-insight because every query needs custom wrangling.

Poor access controls, making regulators like BaFin or the FCA nervous.

These “data swamps” become liabilities just as AI and regulatory scrutiny ramp up.

Business and Compliance Drivers in US, UK, Germany, and EU

Drivers vary by region but rhyme.

US

Heavy AI/ML investment plus SOC 2 and HIPAA pressures for healthcare data.

UK

UK-GDPR and NHS data modernisation building on existing data platforms.

Germany & EU

DSGVO/GDPR, BaFin rules, ECB expectations and strict residency requirements.

A lakehouse makes it easier to apply consistent policies, log access and prove compliance.

How Data Lakehouse Architecture Unlocks AI, ML, and Real-Time Analytics

Because the same governed tables serve BI, feature stores and streaming analytics, a lakehouse simplifies AI delivery.

Feature stores and ML pipelines draw from clean, versioned data.

Streaming analytics supports fraud detection or real-time pricing in New York, London or Berlin.

Unified governance means your AI efforts don’t create a parallel “shadow lake” outside controls.

How Data Lakehouse Architecture Works.

Storage & Open Table Formats (Iceberg, Delta, Hudi)

Open table formats like Apache Iceberg, Delta Lake and Apache Hudi bring ACID transactions, time travel and schema evolution to lake storage. They track metadata in manifests or logs so engines can skip irrelevant files, roll back bad writes and safely evolve schemas without breaking downstream consumers.

European organisations often favour these open formats to reduce US-only cloud lock-in and support multi-cloud or EU-only residency strategies especially in DACH, Nordics and Benelux, where data sovereignty is front and centre.

Governance, Catalog, and Lineage in a Lakehouse

A modern lakehouse wraps those tables with.

Central catalogues recording ownership, classifications and sensitivity.

Fine-grained access control down to row/column level, with masking for PII/PHI.

Lineage tracking from source systems through transformations to BI dashboards and ML models.

These capabilities support GDPR/DSGVO obligations like purpose limitation, subject access requests and right to erasure, because you can trace and selectively delete a subject’s data across tables and regions.

Serving BI, Data Science, and Operational Use Cases from One Platform

In a successful lakehouse

Finance teams in London or Manchester query the same governed tables via Power BI or Tableau. (makitsol.com)

Data science teams in Seattle or Berlin run notebooks for experimentation and training.

Open banking / PSD2 APIs in European banks read from near real-time tables for balances and fraud checks.

Instead of copying data into multiple specialised stores, you standardise on a unified analytics platform with shared governance.

How Do You Migrate an Existing Data Lake or Warehouse to a Lakehouse Step by Step?

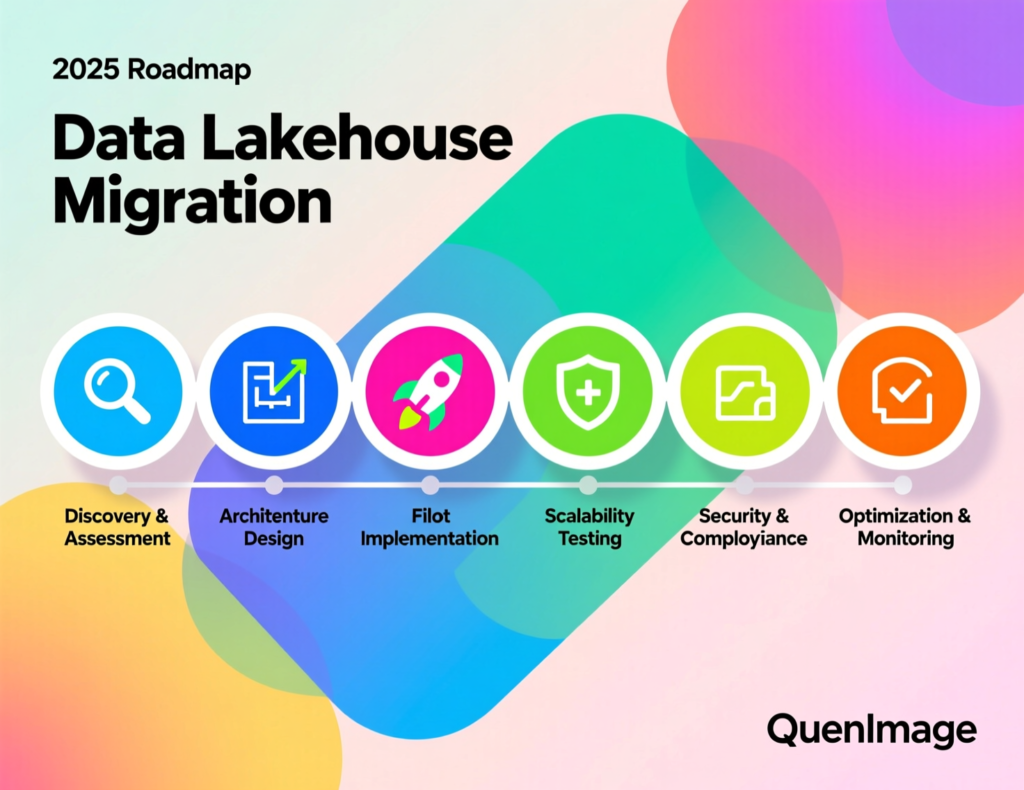

Migrating to a data lakehouse works best as an iterative programme. You start by profiling and prioritising your existing lakes and warehouses, then introduce open table formats and a central catalog on existing storage. From there, you refactor pipelines, migrate priority workloads and enforce governance controls aligned with GDPR/DSGVO, UK-GDPR, HIPAA and PCI DSS.

Each wave should be anchored to a small set of high-value AI or analytics use cases to prove ROI and de-risk change.

Assessment and Target Architecture Design

CDAOs and enterprise architects typically.

Inventory current platforms

Lakes, warehouses, marts, BI tools, regions and data volumes.

Classify data by sensitivity and GEO

PII/PHI, card data, trade data, etc., mapped to US, UK, Germany, EU residency rules.

Design a target lakehouse

Choose cloud(s), regions, open formats, engines and governance model, aligning with broader multi-cloud strategy.

Step-by-Step Migration Pattern from Raw Lake to Lakehouse

A common pattern.

Enable table formats on existing S3/ADLS/GCS buckets (Iceberg/Delta/Hudi).

Introduce a central catalog and baseline governance policies.

Refactor ingestion into medallion-style or multi-zone layers (raw → cleaned → curated).

Migrate priority workloads – e.g., S3 → Databricks/Snowflake on AWS for a US retailer or ADLS → lakehouse on Azure for a UK public-sector dataset.

Retire legacy artefacts once workloads stabilise, freeing cost and operational complexity.

This is where a partner like Mak It Solutions can align your lakehouse design with your existing cloud footprint and microservices or monolith strategies.

Risk Management, Change Management, and Success Metrics

Governed migrations treat risk and change as first-class.

Data quality SLAs and lineage coverage targets for critical domains.

Compliance checkpoints

DPIAs under GDPR, BaFin reviews, HIPAA risk analyses, PCI DSS attestations.

Business KPIs

Time-to-dashboard, incident reduction, cost-per-query and AI project cycle time.

Stakeholder engagement

Regular comms across New York, London, Berlin and Dublin teams so platform changes don’t surprise downstream users.

Why Does Data Lakehouse Architecture Make Governance and Compliance Easier?

A data lakehouse centralises governance with strong schemas, catalogues and access controls applied directly to lake storage. Compared with loosely managed lakes, it makes it far easier to enforce data protection policies, limit access and respond to audits.

Fine-grained permissions, masking, retention policies and complete lineage are critical for GDPR/DSGVO, UK-GDPR, HIPAA, SOC 2 and PCI DSS compliance in financial services, healthcare and the public sector across the US, UK, Germany and EU.

Mapping Lakehouse Controls to GDPR, DSGVO, and UK-GDPR

Key GDPR principles translate cleanly into lakehouse capabilities.

Purpose limitation & minimisation → Tagging and policies that restrict who can query which fields.

Subject rights (access, rectification, erasure) → Lineage + deletion workflows that locate and remove a subject’s records.

Accountability & auditability → Detailed access logs, policy-as-code and change history in the catalog.

Sector-Specific Requirements: HIPAA, PCI DSS, BaFin, and Open Banking

HIPAA

Encryption, strong access controls and audit logs for PHI in US healthcare.

PCI DSS

Segmented cardholder data environments, encryption, key management and monitoring.

BaFin / ECB / open banking (PSD2)

Data segregation, access controls and end-to-end traceability for financial services.

A well-designed lakehouse gives CISOs and DPOs a single control surface instead of multiple partially governed stores.

Data Governance Operating Model on a Lakehouse

Technology isn’t enough. You’ll also need:

Data owners & stewards embedded in domains.

Platform teams managing the lakehouse, catalogs, CI/CD and FinOps.

Federated governance councils across New York, London, Berlin and Dublin to agree on common policies and exceptions.

How Does a Data Lakehouse Support AI, Machine Learning, and Real-Time Analytics?

A data lakehouse supports AI and ML by storing large volumes of curated, feature-ready data with consistent schemas, time travel and lineage. That makes model training, evaluation and monitoring more reliable than ad-hoc extracts.

By integrating streaming ingestion and incremental processing, lakehouses also keep features and dashboards fresh so models and analysts can react to events in near real time, while still respecting local governance and residency requirements across US and European regions.

Feature Stores, ML Pipelines, and MLOps on the Lakehouse

In ecosystems like Databricks, AWS, Azure or GCP, feature stores persist reusable features in lakehouse tables; experiment tracking and model registries sit beside them. That allows:

Consistent, versioned features for teams in San Francisco, London or Munich.

Reproducible experiments with a clear link back to underlying data.

Automated CI/CD pipelines that validate data quality before deploying new models.

Real-Time and Streaming Patterns (Kafka, Kinesis, Pub/Sub)

Streaming into the lakehouse typically looks like:

Events from Kafka, Kinesis or Pub/Sub land in raw tables.

Streaming jobs transform them into cleaned and curated tables.

Near real-time dashboards and alerts power US retail, German manufacturing or EU logistics.

The same stream can feed real-time APIs for recommendations, fraud detection or anomaly detection.

AI Use Cases Across US, UK, Germany, and EU

Typical AI use cases on a lakehouse include:

Fraud detection for EU banks operating under PSD2.

Patient flow optimisation and demand forecasting for NHS hospitals.

Predictive maintenance for German Industry 4.0 manufacturers in Berlin, Frankfurt or Munich.

E-commerce personalisation for US retailers running across multiple AWS or GCP regions.

Comparing Major Data Lakehouse Platforms and Ecosystems

Snowflake vs Databricks vs BigQuery for Lakehouse Use Cases

Snowflake

Strong for SQL analytics and governed sharing; has been moving into lakehouse territory with support for Iceberg/Delta-style external tables and ML integrations.

Databricks

Originator of the “lakehouse” term, strong open-source credentials (Delta Lake), deeply integrated ML and data engineering.

Google BigQuery

Serverless analytics with tight integration to GCS, Vertex AI and the broader Google Cloud ecosystem.

In US markets, Snowflake and Databricks both have strong enterprise traction; in Europe, we see BigQuery favoured for analytics-heavy workloads and Databricks for open-format and AI-heavy use cases.

AWS vs Azure vs Google Cloud Native Lakehouse Patterns

Cloud-native lakehouse patterns often look like:

AWS – S3 + Iceberg/Delta + Athena/Redshift/Databricks, with Glue catalog.

Azure – ADLS + Delta + Azure Synapse / Fabric + Azure Databricks.

Google Cloud – GCS + BigQuery + Dataproc / Dataflow, with Iceberg/Delta options.

Our separate comparison of AWS vs Azure vs Google Cloud digs deeper into which cloud tends to win for data and AI workloads.

Open Formats and Vendor Lock-In

With roughly 79–89% of enterprises already using multi-cloud, open table formats have become a strategic hedge against lock-in.

EU and DACH organisations in particular use Iceberg, Delta or Hudi to keep data portable between hyperscalers, sovereign clouds or even on-prem clusters. For US firms, the same strategy often underpins cloud repatriation or hybrid cloud moves

Data Lakehouse Best Practices and Common Pitfalls

Designing a Modern Cloud Data Stack Around the Lakehouse

Good lakehouse designs usually include.

Reliable orchestration and observability tooling.

Strong data quality checks and SLAs.

Clear patterns for batch, micro-batch and streaming workloads.

FinOps governance for storage and compute, especially as AI workloads scale.

Governance, Lineage, and FinOps Best Practices

Use tagging and chargeback/showback to keep teams accountable for spend.

Define lineage coverage targets for critical domains (e.g., card data, PHI).

Align FinOps with SOC 2, GDPR and other standards so cost optimisation never undermines compliance.

Anti-Patterns to Avoid When Implementing a Lakehouse

Watch out for

Rebuilding a data swamp on new tech by skipping modelling and governance.

Ignoring stakeholder onboarding, leaving global teams confused or resistant.

Copy-pasting every warehouse pattern without revisiting what lakehouse tools can simplify or remove.

Concluding Remarks

Summary: When a Data Lakehouse Is the Right Move

Data lakehouse architecture is usually the right move when you:

Need to support both BI and AI on fast-growing, multi-structured datasets.

Operate under UK-GDPR, GDPR/DSGVO, HIPAA or PCI DSS and need stronger controls.

Want to reduce complexity and cost from running separate lakes and warehouses.

For smaller organisations or highly standardised reporting, a simpler warehouse or hybrid approach may still suffice.

(General note: This article is for educational purposes only and is not legal, financial or compliance advice. Always consult your internal and external advisors.)

Roadmap: From Vision to Pilot Use Cases

The most successful programmes start with an architecture assessment, then pilot a lakehouse for one or two high-value cross-region use cases such as an EU bank’s fraud platform or a UK healthcare analytics hub. From there, they iterate into more domains, tightening governance and FinOps along the way.

Mak It Solutions works with data and cloud leaders from San Francisco and New York to London, Berlin and beyond to design, build and optimise modern cloud data stacks whether you’re planning your first AI-ready data lakehouse or untangling a legacy data swamp.

If you’re evaluating data lakehouse architecture for your US or European organisation, you don’t need a vendor pitch you need a neutral, hands-on blueprint. Share your current lake, warehouse and cloud landscape with Mak It Solutions, and our Editorial Analytics Team can help you sketch a pragmatic migration roadmap aligned to your AI, governance and cost goals. ( Click Here’s )

FAQs

Q : How long does a typical data lake to lakehouse migration take for a mid-sized US or European enterprise?

A : Most mid-sized enterprises see initial value from a lakehouse in 3–6 months, starting with one or two high-impact domains and a handful of dashboards or ML use cases. Full consolidation of legacy lakes and warehouses can take 12–24 months depending on complexity, regulatory reviews and how many BI tools and data products you migrate. The key is to sequence the work into waves that each deliver visible business value, rather than one massive “big bang” cutover.

Q : Do I need to rebuild all my ETL/ELT pipelines when adopting a data lakehouse architecture?

A : Not always. Many ingestion jobs can stay as they are, with outputs redirected into open table formats instead of flat files or warehouse tables. However, you will often refactor some legacy ETL into more modular ELT or medallion-style pipelines to take advantage of lakehouse features like time travel and incremental processing. A sensible approach is to triage pipelines by business criticality and technical debt and only re-engineer what truly needs it.

Q : How does a data lakehouse impact BI tools like Power BI, Tableau, or Looker already used in my organisation?

A : Most modern BI tools already connect to lakehouse engines via standard drivers, so your users can keep familiar tools while pointing reports at new curated lakehouse tables. You may need to rebuild some semantic models and optimise query patterns, but you don’t have to throw away existing dashboards. In many US, UK and EU cases the lakehouse simply becomes the new governed “source of truth” for those tools, improving performance and lineage. (makitsol.com)

Q : What are the cost implications of moving from a separate data lake and warehouse to a unified data lakehouse architecture?

A : In the medium term, a well-designed lakehouse can reduce duplicated storage, simplify data pipelines and lower overall TCO especially when combined with strong cloud cost optimisation practices. In the short term, expect overlapping costs as you run old and new platforms in parallel plus the investment required for migration and upskilling. FinOps disciplines, tagging and chargeback/showback are crucial to keeping compute and storage under control as AI workloads grow.

Q : Can a data lakehouse be deployed in a hybrid or on-premise model for strict data residency requirements in Germany or the EU?

A : Yes. While most lakehouses today run on public clouds, the same patterns open table formats, catalogues, governance and engines can be deployed on private clouds, sovereign clouds or on-prem clusters where required. German and EU organisations with stringent residency rules often adopt hybrid models, keeping sensitive data in in-country or sovereign environments while using public cloud for less sensitive analytics and AI workloads. The trick is to design your architecture and data contracts so they tolerate this split from day one.