OpenAI vs Open-Source AI: Cost, Risk and Control

OpenAI vs Open-Source AI: Cost, Risk and Control

OpenAI vs Open-Source AI: Cost, Risk and Control

For most US, UK and EU organizations, OpenAI’s proprietary stack wins when you care most about speed, product velocity and ecosystem. Open-source and self-hosted LLMs win when data sovereignty, regulatory control (GDPR/UK-GDPR/DSGVO, HIPAA) and deep customization matter more than convenience. In practice, a hybrid approach OpenAI for generic productivity, open-source for sensitive or regulated workloads is fast becoming the default.

Introduction

Choosing between OpenAI vs open-source AI isn’t really a “which model is smarter?” debate. It’s a strategy call about cost, risk and control for your business.

OpenAI gives you the fast lane: battle-tested models, managed infrastructure and a huge ecosystem of tools and integrations. Open-source AI gives you the steering wheel: more control over data, governance and where models run (Frankfurt, London, Zurich, on-prem in your own racks).

For most US, UK and German/EU organizations, the endpoint is the same: a hybrid stack. OpenAI (and similar proprietary APIs) cover speed, experimentation and generic productivity. Open-source and self-hosted models step in wherever regulation, sovereignty, auditability or very specific behavior matter more than convenience.

Defining OpenAI vs Open-Source AI in 2025

AEO micro-answer.

In 2025, “proprietary AI” usually means closed models like OpenAI’s GPT family accessed via API, while “open-source AI” covers models where weights and code are available under open licenses. In between sits a fast-growing middle category of open-weight models: you can download and run them, but under more restrictive licenses than fully open-source.

What counts as “proprietary AI” today?

Proprietary AI models are closed, vendor-hosted systems you access via API or managed services, including:

OpenAI’s GPT-class models (e.g., GPT-4o and successors).

Anthropic’s Claude family.

Google’s Gemini models.

Other closed services from AWS, Azure and Google Cloud.

You don’t see or control the weights, training data or low-level safety stack. You:

Pay per token or request.

Rely on the vendor’s uptime, SLAs and governance.

Inherit their roadmap, deprecation schedule and safety policies.

OpenAI and several peers now also release open-weight models, but these ship with use restrictions (e.g., no competitive use, no military use) and are not the same as truly open-source LLMs.

For a CIO in New York or London, “proprietary” essentially means high-quality models with minimal ops burden but limited transparency and real vendor lock-in.

Open-source, open-weight and self-hosted LLMs

On the “open” side, it helps to distinguish three buckets:

Fully open-source models

Code, weights and (sometimes) training recipes are public.

Licensed under OSI-style licenses (Apache-2.0, MIT, etc.).

You’re free to self-host, fine-tune and embed them in commercial products (subject to license)

Open-weight foundation models

Model weights are downloadable (often via Hugging Face), but with usage restrictions (non-commercial, field-of-use limits, competitive carve-outs).

Great for experimentation and internal tools, but your lawyers will care deeply about license terms if you’re a bank in Frankfurt or a healthtech startup in Austin. Self-hosted LLM infrastructure

You deploy Llama, Mistral, DeepSeek or regional models on:

AWS/GCP/Azure (us-east-1, eu-west-2 London, eu-central-1 Frankfurt, etc.).

Regional or sovereign clouds in Paris, Berlin, Zurich.

On-prem GPU clusters in German factories or UK hospitals.

You own the MLOps, scaling, logging and security story—both a burden and a powerful source of control.

When German teams search for “open source KI vs OpenAI für DSGVO konforme Unternehmen”, this is what they’re really asking: more sovereignty and compliance clarity vs more operational complexity.

Key models and ecosystems to know

On the proprietary side, OpenAI’s GPT-4o is still widely seen as a top general-purpose LLM, especially for multimodal and multilingual use cases, with strong rankings in independent 2025 comparisons.

On the open side, most evaluation shortlists include:

Meta Llama 3 as a versatile open(ish) baseline.

Mistral models for efficiency and European alignment.

DeepSeek and similar models for cost-efficient, high-throughput inference.

European projects such as OpenEuroLLM and other EU-backed initiatives aimed at sovereignty and DSGVO-friendly deployments.

Around these models sits a fast-maturing ecosystem:

Hugging Face and model hubs.

Vector DBs (for RAG) and inference stacks like vLLM, KServe and Kubernetes. )

Regional AI hubs in Paris, Berlin, Zurich and London pushing open and hybrid architectures.

If you’re already thinking about edge vs cloud for AI workloads, this ecosystem is where you’ll spend a lot of your design time.

Strategic Choice.

AEO micro-answer.

For most organizations, OpenAI wins on time-to-value, managed scaling and ecosystem; open-source and self-hosted LLMs win where control, sovereignty, or deep customization are non-negotiable. A hybrid approach OpenAI for generic tasks, open-source for regulated workloads is increasingly the default in US, UK and EU enterprises.

Framing the decision for CIOs, CDOs and product leaders

For CIOs in San Francisco or Munich, choosing between OpenAI vs open-source AI affects:

Innovation speed

OpenAI lets a product team ship a prototype in days; open-source stacks may need weeks of infra work.

Vendor lock-in & negotiation power

Proprietary APIs concentrate power with one vendor; open-source gives you credible multi-vendor options.

Exit options

If OpenAI or another vendor changes pricing or terms, do you have a viable migration path?

Risk governance

Boards and regulators increasingly expect a clear view on model sources, training data assumptions and incident response.

Personas differ.

US SaaS & startups

Optimise for speed, talent leverage and runway; often start OpenAI-first, then add open-source for cost control or specific features.

UK public sector & NHS-adjacent projects

Need UK-GDPR, NHS data sensitivity and ICO guidance aligned; more cautious about sending PII to global APIs.

German industrials & EU banks/insurers

Operate under DSGVO/GDPR and BaFin-style expectations; more likely to require EU-only hosting and strong documentation.

If you’re mapping generative AI security risks in the workplace, these trade-offs sit right at the centre of your risk register.

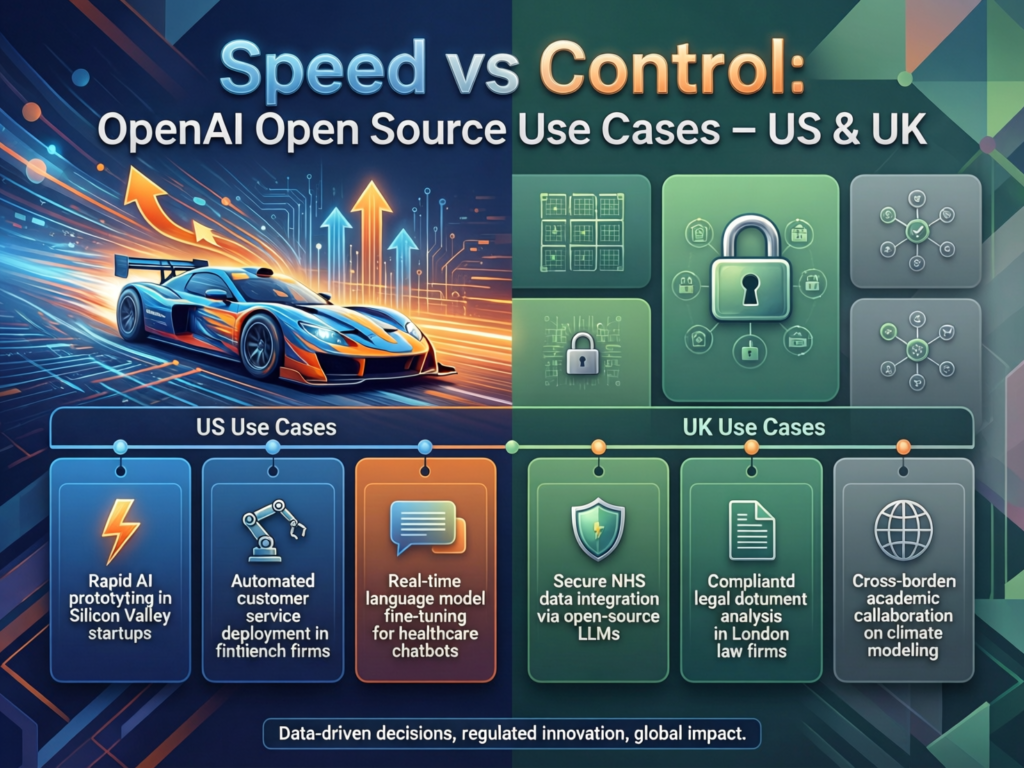

Use-case archetypes.

Speed-first

A fintech in New York or Austin wants to ship an AI co-pilot in 90 days.

OpenAI provides best-in-class models, Assistants APIs, function calling and evals with minimal infra.

Risk is acceptable because data is carefully anonymised or synthetic, and workloads are non-regulated.

Control-first

A BaFin-regulated bank in Frankfurt or a public-sector project in Paris must prove data never leaves the EU, keep detailed logs, and transparently document model behavior.

They lean toward Llama/Mistral on EU cloud regions or sovereign clouds, with tight access controls and in-house governance.

Hybrid

Use OpenAI for generic productivity (email drafting, meeting notes, agent copilots).

Use open-source/self-hosted models for PHI/PII workloads, model-risk-sensitive use cases or EU-resident datasets.

This is the pattern already visible across clients working on AI content with guardrails and gen-AI security. (Mak it Solutions)

Regional strategy patterns.

US

Heavy focus on productivity, talent leverage and using cloud credits wisely.

Healthcare and fintech workloads must align with HIPAA, PCI DSS and SOC 2.

Often OpenAI-first, with isolated open-source clusters for PHI or card data.

UK

Balances UK-GDPR, NHS data rules and London’s fintech scene.

Regulators (ICO, FCA) increasingly ask tough questions about AI explainability, vendor dependency and third-party risk.

Germany/EU

Political emphasis on data sovereignty and “digital strategic autonomy.”

The EU AI Act and national bodies like BaFin push institutions toward robust documentation and local hosting (Frankfurt, Berlin, Paris, Zurich).

Open-source and open-weight models, combined with EU-only hosting, fit the narrative—provided you still meet AI Act obligations.

Technical Performance, Benchmarks & Infrastructure

AEO micro-answer

OpenAI delivers strong out-of-the-box quality, especially for reasoning, coding and multilingual tasks, with managed latency and scaling. Well-chosen open-source LLMs (Llama, Mistral, DeepSeek) can match or beat OpenAI in specific domains if you invest in the right GPUs, inference stack and fine-tuning.

Model quality and benchmarks: OpenAI vs Llama, Mistral, DeepSeek

Independent 2025 round-ups still rank GPT-4o among the top general-purpose LLMs for reasoning and multimodal work. Open-source models are closing the gap:

Llama 3 often wins on price–performance and availability.

Mistral models excel at efficient inference on smaller GPU nodes, ideal for EU workloads and edge scenarios.

DeepSeek and similar models show competitive coding and analytics performance at lower cost, especially when heavily tuned.

For multilingual EU scenarios say a Berlin insurer serving German, French and Italian customers GPT-4o and leading multilingual open models both perform well. The deciding factors become cost, latency and governance, not raw accuracy

Latency, throughput and SLOs.

OpenAI API

Global infra, tuned networking and sophisticated batching: typically sub-second to low-seconds latency for GPT-4o under normal load.

You inherit OpenAI’s autoscaling and rate-limit management, but you’re also constrained by their quotas and roadmap.

Self-hosted on AWS/Azure/GCP

In us-east-1, eu-west-2 (London) or eu-central-1 (Frankfurt) you can optimise latency to users in the US, UK and Germany/EU. (Mak it Solutions)

With vLLM, tensor parallelism and request batching you can hit similar or better latency at scale but only if you have strong SRE/MLOps capability. (Hugging Face)

Data residency

EU privacy rules increasingly push sensitive workloads into EU-only regions or sovereign clouds. (IBM)

OpenAI and other vendors now offer region-bound processing, but some regulators and customers still prefer fully self-hosted paths.

Integration & operations.

With OpenAI you get

Mature SDKs, Assistants, RAG features, eval tooling and function calling patterns.

Trade-off: less observability into the model internals and fewer knobs to change the safety stack beyond prompt design, policies and retries.

With open-source you typically adopt:

Hugging Face + vLLM or KServe on Kubernetes, plus vector DBs (Pinecone, pgvector, etc.).

Full control over logging, PII redaction, custom filters and jailbreak mitigation but you must design and operate it all.

This is where Mak It Solutions often connects the dots: blending cloud cost optimization with AI observability and security patterns so you don’t overspend on GPUs or under-invest in monitoring. (Mak it Solutions)

Compliance, Governance & Data Protection

AEO micro-answer.

If you’re tightly bound by GDPR/UK-GDPR, HIPAA, PCI DSS or BaFin/NHS rules, self-hosted and open-source stacks can simplify data residency and logging. But major vendors like OpenAI are rapidly adding EU-hosted, enterprise-only, no-training options that can also satisfy regulators if you configure them correctly and document your controls.

GDPR, UK-GDPR, DSGVO and data residency in Europe

Under GDPR/DSGVO and UK-GDPR you must understand:

Who is data controller vs processor.

The awful basis for each processing activity.

How you minimise personal data and handle transfers to “third countries” like the US.

For AI, this translates to:

Mapping which prompts and outputs contain PII/PHI.

Ensuring EU-resident data stays in Frankfurt, Paris or Zurich where required.

Choosing between:

OpenAI’s regional offerings (EU-only processing, no training on your data).

Fully self-hosted models in EU data centres where you’re the controller of both data and infra.

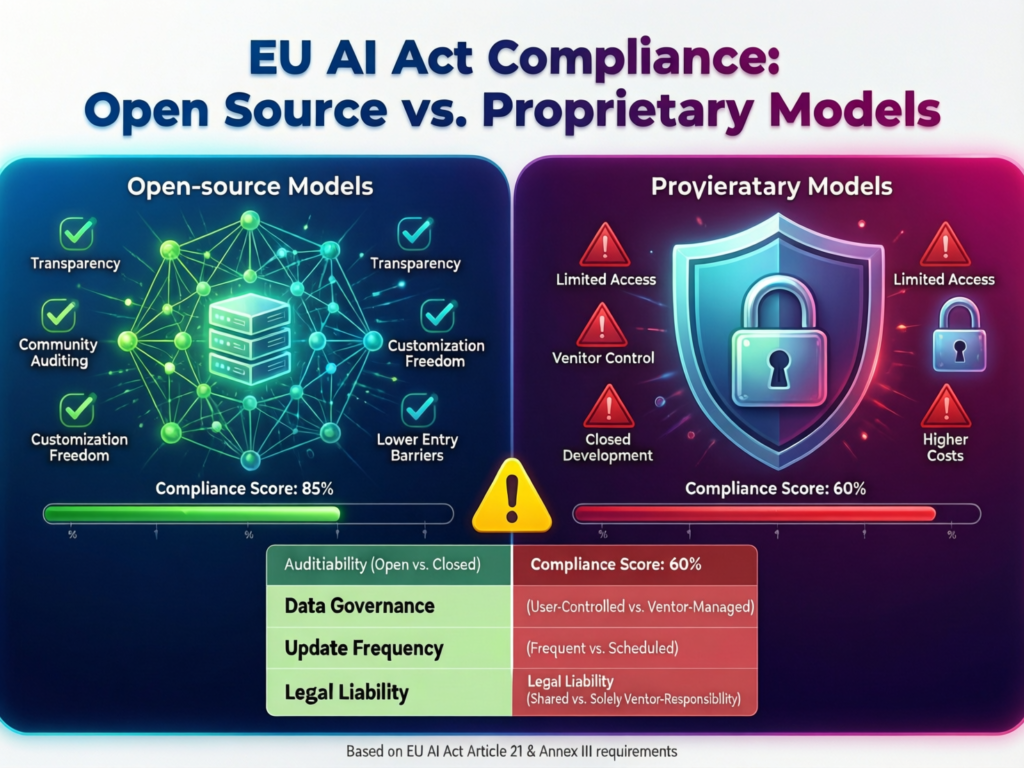

EU AI Act obligations for OpenAI vs open-source models

The EU AI Act introduces.

A risk-based regime with bans on some practices and strict requirements for high-risk AI.

Special rules for general-purpose AI (GPAI) models and additional obligations for models with “systemic risk,” including evaluation, incident reporting and cybersecurity controls.

Timeline (simplified):

2024 – AI Act entered into force.

Feb 2025 – Bans on prohibited practices apply.

Aug 2025 – Rules for GPAI models start to apply, supported by a GPAI Code of Practice.

2026–2027 – High-risk system rules and full enforcement ramp up.

Crucially, truly open-source GPAI models get some exemptions, but not a free pass especially if they cross the systemic-risk compute thresholds.

Sector-specific rules: HIPAA, PCI DSS, SOC 2, NHS & BaFin

US (HIPAA, PCI DSS, SOC 2)

For PHI or card data, many teams still prefer self-hosted LLMs or tightly scoped vendor integrations with BAAs, strict DLP and tokenisation.

SOC 2 and internal audit will scrutinise your AI vendors’ controls and incident response paths.

UK (NHS, FCA, ICO)

NHS data has its own sensitivity and retention rules; AI in diagnostics or triage will likely be high-risk under the AI Act and subject to UK-GDPR/ICO guidance.

London fintechs answer both to FCA (model risk, operational resilience) and emerging AI-specific expectations.

Germany/EU (BaFin, ECB, sector regulators)

BaFin already expects robust model risk management and documentation; plugging OpenAI into a loan underwriting pipeline without a clear control framework is unlikely to pass scrutiny.

Open-source/self-hosted stacks give more audit trails and local logs, but they also make you directly responsible for every patch and control.

If your AI is touching security tooling, don’t forget to cross-check decisions against your AI in cybersecurity strategy.

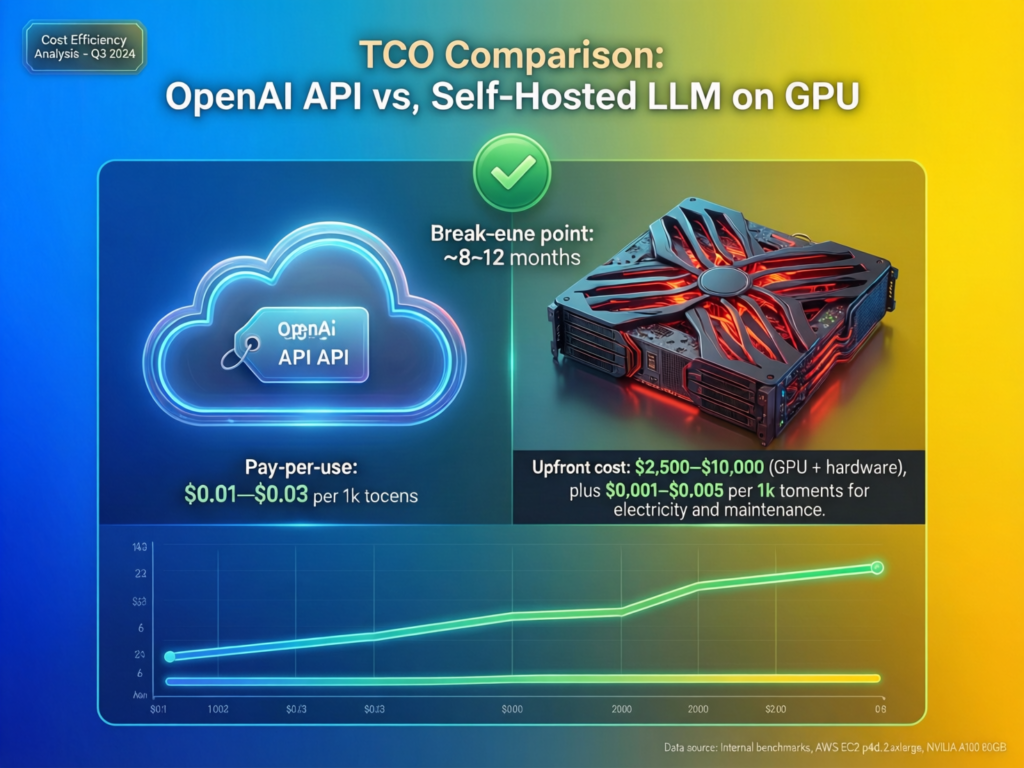

Cost, TCO and Operating Models

AEO micro-answer.

OpenAI usually provides low upfront cost and predictable pay-per-use pricing, which is ideal at low to medium scale. Open-source AI can be cheaper at sustained high volume, but only if you run GPUs efficiently and are ready to pay the people, governance and incident-response costs that come with owning the stack.

Direct costs.

OpenAI & similar APIs

You pay per input/output token, sometimes with cheaper “batch” or “distilled” tiers.

Great for US startups in San Francisco or Seattle validating product–market fit. You can keep infra lean and capital-light while you learn.

Self-hosted on hyperscalers (AWS/Azure/GCP)

GPU instances in us-east-1, eu-west-2 (London), eu-central-1 (Frankfurt) are expensive but flexible and benefit from autoscaling and spot pricing.

For 24/7 high-volume workloads, reserved instances and right-sizing can turn GPUs into a lower unit cost than per-token APIs. (Mak it Solutions)

EU sovereign clouds / on-prem in Germany

Often higher energy and operational costs, but potentially lower regulatory friction and better negotiation leverage with local providers.

Hidden costs.

Self-hosting open-source LLMs adds:

MLOps engineers and SREs to keep clusters healthy, update models and tune throughput.

Security, privacy and compliance staff to handle SOC 2, GDPR documentation, DPIAs and vendor reviews.

24/7 incident response for model abuse, jailbreaks and data leakage—especially in sectors like finance and healthcare. (Mak it Solutions)

APIs reduce infra overhead but still require.

Vendor due diligence (DPAs, BAAs, data-processing agreements).

Strong guardrails at the application layer (logging, PII redaction, prompt controls)

Scenario-based TCO comparison for US, UK and German buyers

US healthtech startup (US-East)

Early stage: OpenAI is usually cheaper no infra team, low request volume, strong HIPAA-ready tooling.

At high, predictable volume (millions of visits/month), a well-run Llama stack on AWS with PHI controls may undercut API spend if you can afford the MLOps team.

London fintech under UK-GDPR

Starts with OpenAI for internal agents and customer-service copilots.

As conversational volume grows and model decisions affect regulated products, it may be cheaper and safer to migrate critical flows to a self-hosted open-weight model in London or Frankfurt, while keeping OpenAI for experimentation.

German Industrie 4.0 manufacturer (Frankfurt)

Data is already centralised in German data centres; OT integration and BaFin/ECB expectations drive demand for local control.

After an initial PoC phase on OpenAI, long-term TCO often favours self-hosted EU models, especially if AI workloads run 24/7 in production lines.

If TCO is a recurring pain point, it’s worth pairing this analysis with a dedicated cloud cost optimization review. (Mak it Solutions)

Practical Decision Framework & Hybrid Strategies

AEO micro-answer:

To choose between OpenAI and open-source AI, start with data sensitivity and regulation, then consider volume/scale, latency needs, internal skills and your stance on vendor lock-in. In many US/UK/EU cases, the answer is a hybrid stack where you pair OpenAI with one or more self-hosted LLMs.

A 7-question decision checklist for your AI stack

Ask these seven questions.

What data will the model see?

Anonymous marketing copy vs PHI, payroll or trading data.

Which regulations apply?

GDPR/UK-GDPR/DSGVO, HIPAA, PCI DSS, BaFin, FCA, NHS, etc.

What’s our expected scale and concurrency?

Tens of users or tens of thousands?

How sensitive is latency?

Async batch vs real-time chat or trading decisions.

What’s our budget and forecasted AI spend over 24 months?

What skills do we have in-house?

Kubernetes, GPUs, MLOps, security, legal/compliance.

What is our vendor strategy?

Single strategic partner vs multi-cloud, multi-model posture.

Turn this into a simple matrix:

Mostly “low risk / low scale / low skills”? → OpenAI-first.

Mostly “high risk / high scale / strong infra skills”? → OSS/self-host-first.

Mixed answers? → Hybrid.

Common patterns: OpenAI core + OSS edge

Two patterns appear again and again in US and EU deployments:

OpenAI for generic assistants; OSS for sensitive workloads

GPT-class models power general chat, doc Q&A and coding assistants.

Llama/Mistral/DeepSeek power PHI-aware workflows, internal search, or industrial decision support inside EU-only clusters.

OpenAI for experimentation; OSS for stable production

Product and data teams prototype with OpenAI to find high-value use cases.

Once stable, “core” use cases migrate onto cost-optimised, self-hosted open-weight models—with guardrails and governance inherited from your AI content with guardrails, cloud cost optimization and edge vs cloud strategies. (Mak it Solutions)

Implementation roadmap: 0–3, 3–12, 12–24 months

0–3 months

Run small PoCs in US and EU regions.

Map data flows and regulations; perform DPIAs and initial risk assessments.

3–12 months

Stand up a hybrid stack: OpenAI + at least one open-source model on EU cloud.

Integrate monitoring, logging and incident response; align with SOC 2 and AI governance baselines.

12–24 months

Re-evaluate your stack as EU AI Act obligations fully bite and US/UK rules mature.

Optimise TCO with GPU scheduling, FinOps and workload placement between cloud, edge and on-prem. (Mak it Solutions)

The Future: EU AI Act, Open-Weight Models and AI Overviews

AEO micro-answer.

EU and UK regulators care so much about open-source vs proprietary AI because open models change who controls powerful capabilities and how easily safeguards can be removed or bypassed. The EU AI Act, GPAI Code of Practice and emerging national laws all aim to balance open innovation with systemic-risk mitigation.

How the EU AI Act reshapes OpenAI vs open-source trade-offs

Despite heavy lobbying from US Big Tech and European startups for delays, the European Commission is sticking to the AI Act timeline, with GPAI rules kicking in from August 2025 and full adoption by 2027.

Implications.

Providers of high-end models (OpenAI, Anthropic, big EU labs) must meet systemic-risk obligations, including evaluation, incident reporting and cybersecurity.

Open-source models get partial exemptions, but once they cross certain compute thresholds or are fine-tuned into new systemic-risk models, obligations re-attach

For buyers in Berlin, Brussels or London, this means:

You can’t avoid AI Act obligations simply by choosing open-source.

You can design architectures and documentation that make compliance easier often by using open-source plus clear governance instead of a black-box API everywhere.

OpenAI’s open-weight moves and the blurring line

As OpenAI, Meta and other vendors release open-weight and limited-open models, the old binary “proprietary vs open-source” framing gets blurry.

Vendors use open-weight models to answer sovereignty and pricing concerns.

European policymakers both encourage open ecosystems and worry about powerful capabilities with fewer guardrails.

Expect more hybrid licensing models, more options to run vendor models on your own GPUs, and tighter ties between AI providers and the EU AI Office’s Code of Practice.

Answer engines, AI Overviews and discoverability

Google’s AI Overviews, AI-powered SERPs and answer engines are already changing how CIOs and product leaders in the US, UK and EU discover guidance on questions like “OpenAI vs open-source LLM for HIPAA-compliant applications.”

Content that surfaces well tends to:

Provide clear, self-contained micro-answers to common questions.

Use structured comparisons, FAQs and clean headings.

Include schema and speakable snippets that answer engines can easily quote.

That’s the structure you’re reading now which also mirrors how Mak It Solutions designs AI content with guardrails to perform across both SEO and answer engines. (Mak it Solutions)

Summary & recommended next step

For a one-screen summary.

US readers

Start OpenAI-first unless you’re handling PHI/PCI at scale; layer in open-source where cost and governance demand it.

UK readers

Plan for hybrid from day one; balance UK-GDPR, NHS/FCA expectations and London’s innovation tempo.

German/EU readers

Assume EU AI Act + DSGVO/sector rules push you toward EU-hosted open or open-weight models, with OpenAI used selectively under strong contractual and technical controls.

If you’re unsure, your next move is not “pick a model” it’s to map your data, regulations and workloads, then design the simplest stack that satisfies all three.

Key Takeaways

OpenAI vs open-source AI is a strategy choice, not a popularity contest: decide whether you prioritise speed and ecosystem or sovereignty and deep control.

Hybrid stacks are becoming the default in US, UK and EU enterprises OpenAI for generic tasks, self-hosted LLMs for regulated, high-risk or cost-sensitive workloads.

The EU AI Act and GPAI Code of Practice tighten obligations on both proprietary and open-source models, especially those with systemic risk.

True TCO includes GPUs and people, governance, audits and incident response self-hosting only wins when you operate at scale and have the skills.

A simple decision checklist and phased roadmap (0–3, 3–12, 12–24 months) keeps your architecture adaptable as regulations and models evolve.

Partnering with a team that understands cloud, AI safety, compliance and cost optimisation together reduces the risk of expensive re-platforming later.

If you’re staring at a maze of options GPT-4o, Llama, Mistral, DeepSeek, Frankfurt vs London vs us-east-1—you don’t have to untangle it alone. Mak It Solutions works with US, UK and EU teams to design hybrid AI architectures that respect GDPR/UK-GDPR, HIPAA and sector rules while still shipping features fast.

Share your current stack, data locations and top 2–3 AI use cases, and our Editorial Analytics Team can help you turn them into a concrete OpenAI-vs-open-source decision and a 12-month roadmap.( Click Here’s )

FAQs

Q : Is OpenAI considered “open source” if it releases open-weight models and APIs for enterprises?

A : No. OpenAI remains a proprietary vendor, even if it releases open-weight models or enterprise APIs. Open-weight means you can download and run certain model weights under specific license terms, but you don’t get full open-source rights such as unrestricted modification and redistribution under an OSI-approved license. For compliance and procurement, you should still treat OpenAI as a closed, third-party SaaS/API provider rather than an open-source project.

Q : When does it make financial sense to migrate from OpenAI APIs to a self-hosted open-source LLM stack?

A : A migration usually makes sense when three things are true: your monthly API bill is substantial and predictable, your workloads run near-continuously, and you have (or can hire) a team to run GPUs efficiently. At low to medium volumes, OpenAI’s pay-per-use pricing and zero-ops model are hard to beat. At higher scale, especially for EU-resident workloads or specialised domains, a well-tuned Llama/Mistral/DeepSeek cluster can lower unit costs provided you include people, compliance and incident response in your TCO model.

Q : How can a UK or EU company prove GDPR/DSGVO compliance when using OpenAI instead of self-hosted open-source AI?

A : You need to treat OpenAI as a data processor and document everything: where data flows, which regions process it, how long it’s retained and how you respond to data-subject requests. Use OpenAI’s enterprise or EU-regional offerings with no-training guarantees, sign DPAs, and add layered controls such as PII redaction, access management and logging. Then link this setup to your GDPR/DSGVO records of processing, DPIAs and AI Act documentation, so regulators and auditors can see exactly how the service is governed.

Q : What skills and roles does a team need before committing to an open-source LLM infrastructure strategy?

A : At a minimum you’ll need: cloud or on-prem infrastructure engineers comfortable with GPUs and Kubernetes; MLOps engineers who can handle deployment, scaling and model updates; security and privacy specialists who understand GDPR/UK-GDPR/HIPAA; and product owners who can translate governance rules into user-facing guardrails. In highly regulated sectors, add legal/compliance partners and an internal AI governance committee to watch EU AI Act and sector-specific rules as they evolve.

Q : Can a hybrid approach (OpenAI + open-source) still satisfy HIPAA, PCI DSS and BaFin requirements in practice?

A : Yes if it’s designed intentionally. Many organisations keep PHI, card data and BaFin-regulated workloads on self-hosted, EU-resident or HIPAA-aligned open-source stacks, while using OpenAI for low-risk tasks like summarising internal docs or generating marketing copy. The key is segmentation: clear data-flow boundaries, strong access controls, separate logging domains and documentation that shows regulators which systems see which data and under what safeguards. Done right, hybrid architectures can actually improve your risk posture by avoiding any single point of failure.